GenAI, the most talked-about manifestation of AI these days, imposes two tough constraints on a hardware platform. First, it demands massive memory to serve large language model with billions of parameters. Feasible in principle for a processor plus big DRAM off-chip and perhaps for some inference applications but too slow and power-hungry for fast datacenter training applications. Second, GenAI cores are physically big, already running to reticle limits. Control, memory management, IO, and other logic must often go somewhere else though still be tightly connected for low latency. The solution of course is an implementation based on chiplets connected through an interposer in a single package: one or more for the AI core, HBM memory stacks, control, and other logic perhaps on one or more additional chiplets. All nice in principle but how do even hyperscalers with deep pockets make this work in practice? Alphawave Semi has already proven a very practical solution as I learned from a Mohit Gupta (SVP and GM of Custom Silicon and IP at Alphawave Semi) presentation, delivered at the recent MemCon event in Silicon Valley.

Start with connectivity

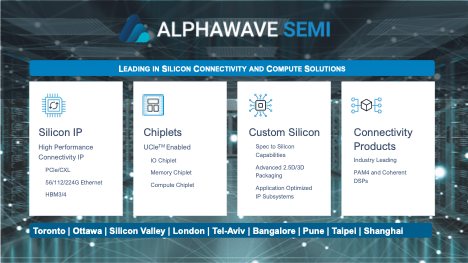

This and the next section are intimately related, but I have to start somewhere. Silicon connectivity (and compute) is what Alphawave Semi does: PCIe, CXL, UCIe, Ethernet, HBM; complete IP subsystems with controllers and PHYs integrated into chiplets and custom silicon.

Memory performance is critical. Training first requires memory for parameters (weights, activations, etc.) but it also must provide pre-allocated working memory to handle transformer calculations. If you once took (and remember) a linear algebra course, a big chunk of these calculations is devoted to lots and lots of matrix/vector multiplications. Big matrices and vectors. Working space needed for intermediate storage is significant; I have seen estimates running over 100GB (the latest version of Nvidia Grace Hopper reportedly includes over 140GB). This data must also move very quickly between HBM memory and/or IOs and the AI engine. Alphawave Semi support better than an aggregated (HBM/PCIe/Ethernet) terabyte/second bandwidth. For the HBM interface they provide memory management subsystem with an HBM controller and PHY in the SoC communicating with the HBM controller sitting at the base of each HBM memory stack, ensuring not only protocol compliance but also interoperability between memory subsystem and memory stack controllers.

Connectivity between chiplets is managed through Alphawave UCIe IP (protocol and PHY), delivering 24Gbps per data lane. These have already been proven in 3nm silicon. A major application for this connectivity might well be connecting the AI accelerator to an Arm Neoverse compute subsystem (CSS) charged with managing the interface between the AI world (networks, ONNX and the like) to the datacenter world (PyTorch, containers, Kubernetes and so on). Which conveniently segues into the next topic, Alphawave Semi’s partnership with Arm in the Total Design program and how to build these chiplet-based systems in practice.

The Arm partnership and building chiplet-based devices

We already know that custom many-core servers are taking off among hyperscalers. It shouldn’t be too surprising then that in the fast-moving world of AI, custom AI accelerators are also taking off. If you want to differentiate on a world-beating AI core you need to surround it with compute, communications, and memory infrastructure to squeeze maximum advantage out of that core. This seems to be exactly what is happening at Google (Axion and the TPU series), Microsoft (Maia), AWS (Tranium), and others. Since I don’t know of any other technology that can serve this class of devices, I assume these are all chiplet-based.

By design these custom systems use the very latest packaging technologies. Some aspects of design look rather like SoC design based on proven reusable elements, except that now those elements are chiplets rather than IPs. We’ve already seen the beginnings of chiplet activity around Arm Neoverse CSS subsystems as a compute front-end to an AI accelerator. Alphawave Semi can also serve this option, together with memory and IO subsystem chiplets and HBM chiplets. All the hyperscaler must supply is the AI engine (and software stack including ONNX or similar runtime).

What about the UCIe interoperability problem I raised in an earlier blog? One way to mitigate this problem is to use the same UCIe IP throughout the system. Which Alphawave Semi can do because they offer custom silicon implementation capabilities to build these monsters, from design through fab, OSAT and delivering tested, packaged parts. And they have established relationships with EDA and IP vendors and foundry and OSAT partners, for example with TSMC on CoWoS and InFO_oS packaging.

The cherry on this cake is that Alphawave Semi is also a founding member with Arm on the Total Design program and can already boast multiple Arm-based SoCs in production. As proof, they already can claim a 5nm AI accelerator system with 4 accelerator die and 8 HBM3e stacks, a 3nm Neoverse-based system with 2 compute die and 4 HBM3e stacks, and a big AI accelerator chip with one reticle-size accelerator plus HBM3e/112G/PCIe Subsystem and 6 HBM3e stacks. Alphawave also offers custom silicon implementation for conventional (no-chiplet) SoCs.

Looks like Alphawave Semi is on the forefront of a new generation of semiconductor enterprises, serving high-performance AI infrastructure for systems teams who demand the very latest in IP, connectivity, and packaging technology (and are willing to spend whatever it takes). I have also noticed a few other semis also taking this path. Very interesting! If you want to learn more click HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.