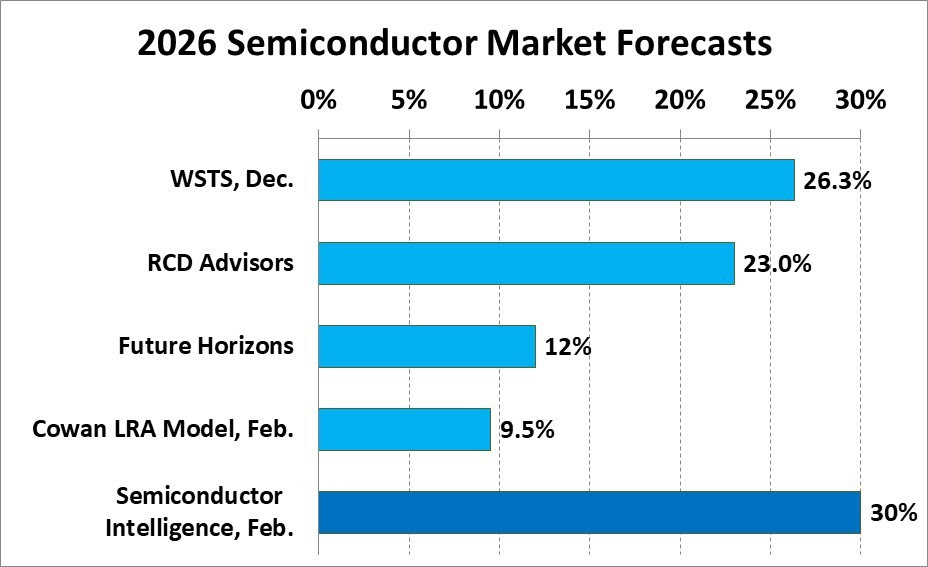

The semiconductor industry is in the midst of a structural supply challenge that’s tightly coupled to exploding demand for advanced chips, especially those used in AI, HPC, and next-generation mobile and consumer devices. At the center of this vortex is the 2nm class of manufacturing technology, representing one of the most … Read More

Podcast EP334: The Unique Benefits of LightSolver’s Laser Processing Unit Technology with Dr. Chene Tradonsky

Daniel is joined by Dr. Chene Tradonsky, a physicist and the CTO and co-founder of LightSolver, where he leads the development of a proprietary physics-based computing system built on coupled laser dynamics to accelerate compute-heavy simulations and other computationally demanding workloads. Before moving into physics,… Read More

An AI-Native Architecture That Eliminates GPU Inefficiencies