Since the fall of the Roman Empire, France has played a defining role in shaping Western civilization. In the 9th century, Charlemagne—a Frank—united much of Europe under one rule, leaving behind a legacy so profound he is still remembered as the “Father of Europe.” While Italy ignited the Renaissance, it was 16th-century France… Read More

CEO Interview with Dr. Maksym Plakhotnyuk of ATLANT 3DDr. Maksym Plakhotnyuk, is the CEO and Founder…Read More

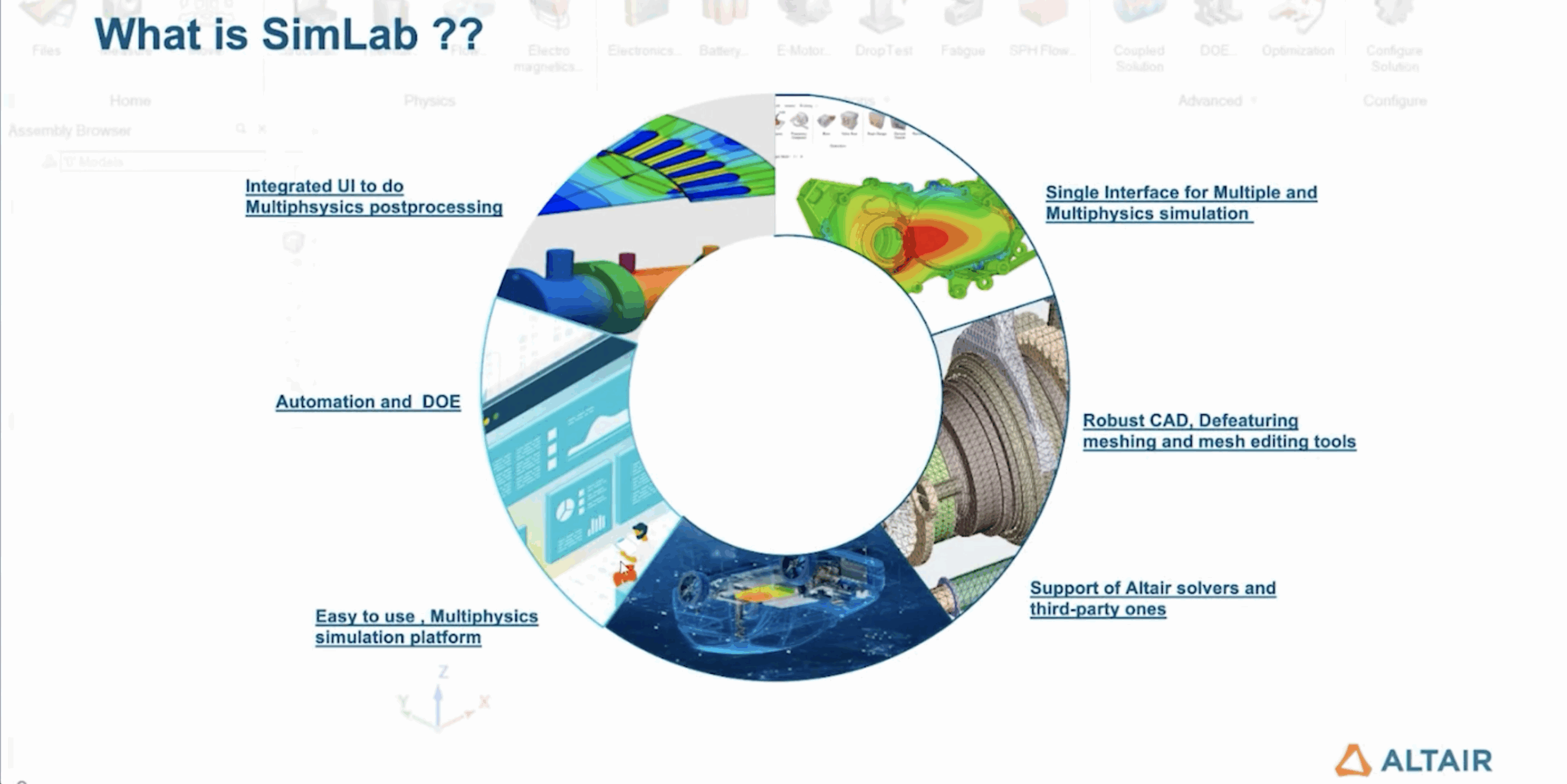

CEO Interview with Dr. Maksym Plakhotnyuk of ATLANT 3DDr. Maksym Plakhotnyuk, is the CEO and Founder…Read More Altair SimLab: Tackling 3D IC Multiphysics Challenges for Scalable ECAD ModelingThe semiconductor industry is rapidly moving beyond traditional…Read More

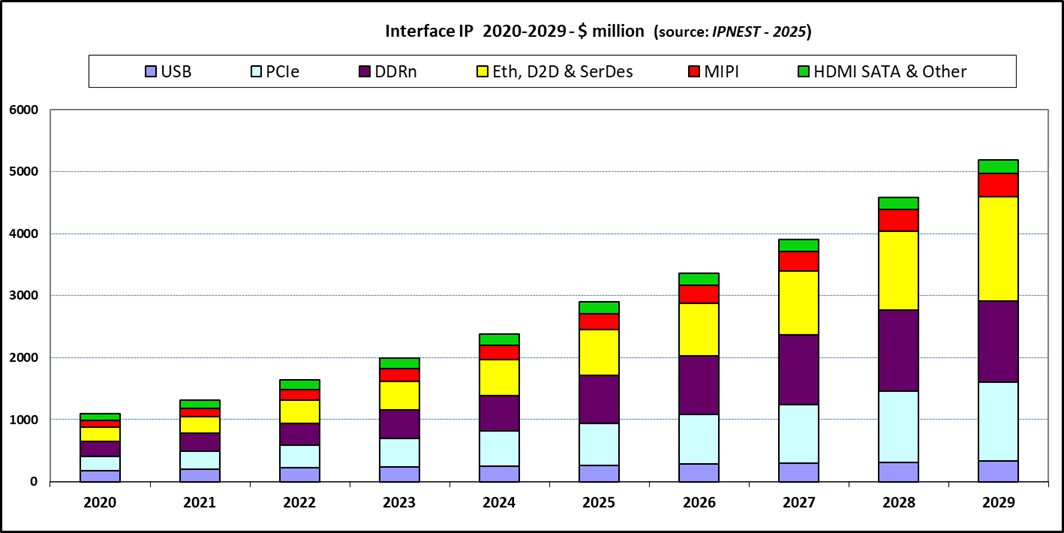

Altair SimLab: Tackling 3D IC Multiphysics Challenges for Scalable ECAD ModelingThe semiconductor industry is rapidly moving beyond traditional…Read More AI Booming is Fueling Interface IP 23.5% YoY GrowthAI explosion is clearly driving semi-industry since 2020.…Read More

AI Booming is Fueling Interface IP 23.5% YoY GrowthAI explosion is clearly driving semi-industry since 2020.…Read MoreCEO Interview with Dr. Maksym Plakhotnyuk of ATLANT 3D

Dr. Maksym Plakhotnyuk, is the CEO and Founder of ATLANT 3D, a pioneering deep-tech company at the forefront of innovation, developing the world’s most advanced atomic-scale manufacturing platform. Maksym is the inventor of the first-ever atomic layer advanced manufacturing technology, enabling atomic-precision development… Read More

CEO Interview with Carlos Pardo of KD

Carlos Pardo has a distinguished career as a manager in the microelectronics industry, excelling in leading R&D teams. He possesses extensive expertise in the high-tech silicon sector, encompassing both hardware and software development. Previously, he served as the Technical Director at SIDSA, where he managed R&D… Read More

Podcast EP297: An Overview of sureCore’s New Silicon Services with Paul Wells

Dan is joined by sureCore CEO Paul Wells. Paul has worked in the semiconductor industry for over 25 years including two years as director of engineering for Pace Networks, where he led a multidisciplinary, 70 strong product development team creating a broadcast quality video & data mini-headend. Before that, he worked for… Read More

CEO Interview with Darin Davis of SILICET

With over 30 years of diverse industry experience, Darin leads SILICET, a semiconductor IP licensing firm. He spearheaded a strategic pivot to focus on a seamless LDMOS innovation that delivers unmatched cost, performance and reliability advantages – backed by a robust global patent portfolio. Prior to co-founding SILICET,… Read More

Altair SimLab: Tackling 3D IC Multiphysics Challenges for Scalable ECAD Modeling

The semiconductor industry is rapidly moving beyond traditional 2D packaging, embracing technologies such as 3D integrated circuits (3D ICs) and 2.5D advanced packaging. These approaches combine heterogeneous chiplets, silicon interposers, and complex multi-layer routing to achieve higher performance and integration.… Read More

AI Booming is Fueling Interface IP 23.5% YoY Growth

AI explosion is clearly driving semi-industry since 2020. AI processing, based on GPU, need to be as powerful as possible, but a system will reach optimum only if it can rely on top interconnects. The various sub-system need to be interconnected with ever more bandwidth and lower latency, creating the need for ever advanced protocol… Read More

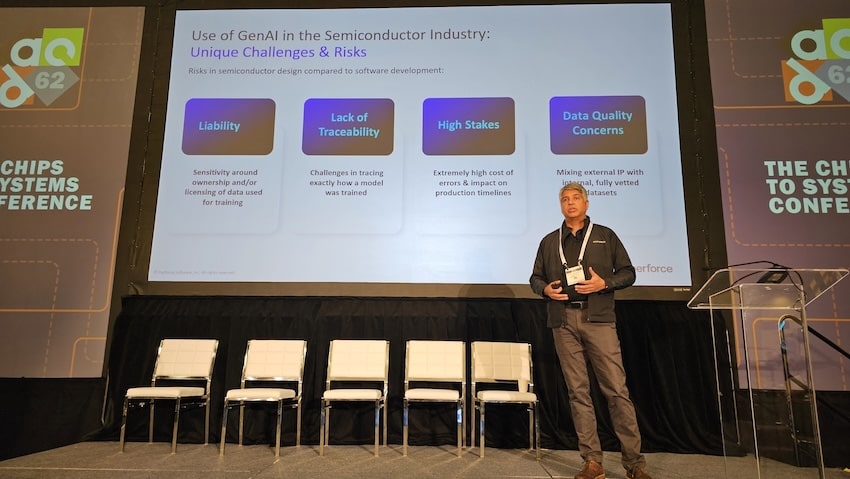

Building Trust in Generative AI

AI technology was prevalent at DAC 2025, but can we really trust what Generative AI (GenAI) is producing? Vishal Moondhra, VP of Solutions Engineering from Perforce talked about this topic in the Exhibitor Forum on Monday, so I got a front row seat to learn more.

Vishal started out by introducing the four challenges and risks of using… Read More

Podcast EP296: How Agentic and Autonomous Systems Make Scientists More Productive with SanboxAQ’s Tiffany Callahan

Dan is joined by Dr. Tiffany Callahan from SandboxAQ. As one of the early movers in the evolving sciences of computational biology, machine learning and artificial intelligence, Tiffany serves as the technical lead for agentic and autonomous systems at SandboxAQ. She has authored over 50 peer-reviewed publications, launched… Read More

Insider Opinions on AI in EDA. Accellera Panel at DAC

In AI it is easy to be distracted by hype and miss the real advances in technology and adoption that are making a difference today. Accellera hosted a panel at DAC on just this topic, moderated by Dan Nenni (Mr. SemiWiki). Panelists were: Chuck Alpert, Cadence’s AI Fellow driving cross-functional Agentic AI solutions throughout… Read More

TSMC N3 Process Technology Wiki