For a long time, memories were the primary technology driver for process development. If you built memories, you got access to cutting-edge process information. If you built other products, this could give you a competitive edge. In many cases, FPGAs are replacing memories as the driver for advanced processes. The technology access benefits still apply and at least one company, Flex Logix, is reaping those benefits.

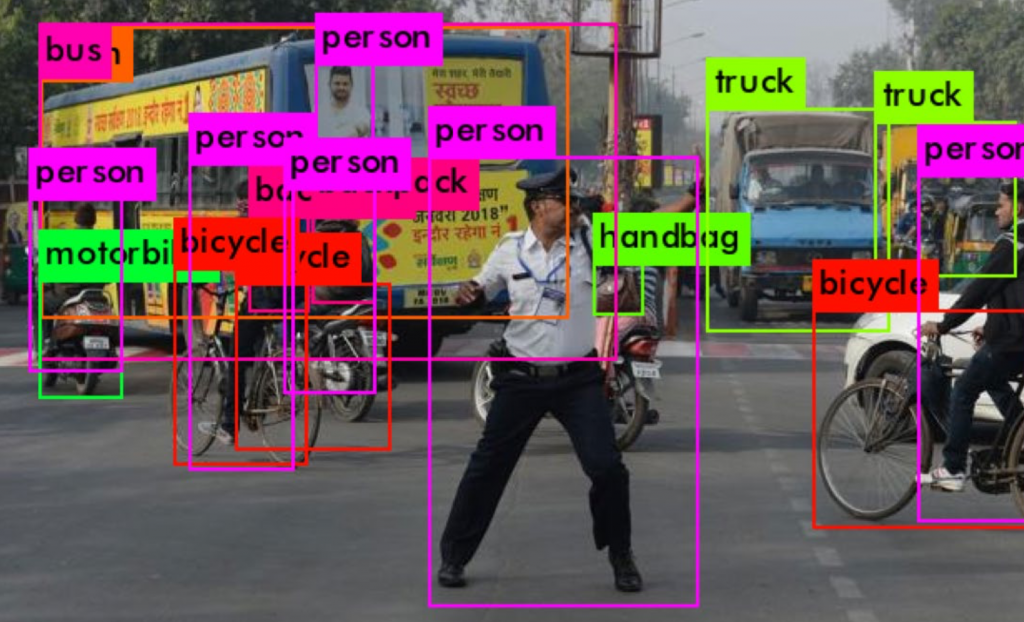

Flex Logix has been known for their embedded FPGA technology, providing the best of both custom logic and programmable logic in one chip. On April 9, the company disclosed real-world AI interference benchmarks for its InferX X1 product. This new product contains both custom logic and embedded programmable logic.

An eye-popping overview of the benchmark results was presented at the Spring Linley Processor Conference on the same day. This popular conference was conducted as a virtual event. I got a chance to attend many of the sessions and I can say that The Linley Group did a great job capturing their live event in a virtual setting, delivering both high-quality presentations and providing informal access to the presenters. Expect to see more events like this.

The presentation was given by Vinay Mehta, AI inference technical marketing manager at Flex Logix. Prior to joining Flex Logix, Vinay spent two years at Lyft designing next generation hardware for Lyft’s self-driving systems. His activities included demonstration of quantization and hardware acceleration of neural networks and evaluation of edge and data center inference accelerator hardware and software. Vinay is a very credible speaker on AI topics.

Vinay’s presentation covered an overview of edge computing, customer requirements, characterizing workloads, a discussion of throughput vs. latency for streaming, benchmark details and convolution memory access pattern strategies. Here are some the highlights of Vinay’s talk…

The InferX X1 is completing final design checks and will tape out soon (TSMC 16FFC). It contains 4,000 MACs interconnected with Flex Logix’s EFLX eFPGA technology. This flexible interconnect helps the product achieve high utilization. Total power is 13.5 watts max, with typical power consumption substantially lower. Chip samples and a PCIe evaluation card are expected in Q3 2020. The part has flexibility built-in to support low-latency operation for both complex and simpler models at the edge.

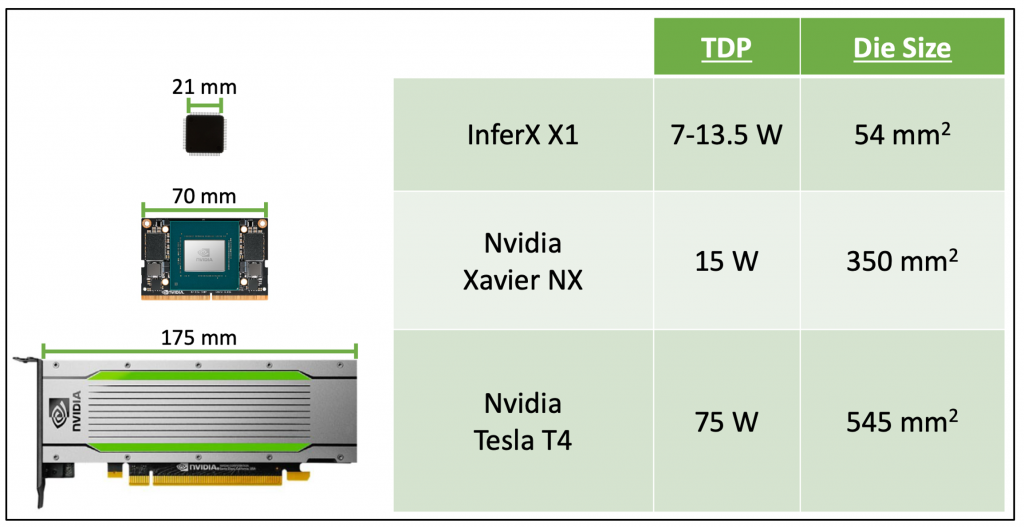

Vinay covered many aspects of customer benchmarks for the InferX X1. To begin with, power and size stack up as shown in the figure below. The Flex Logix part appears to be lower power and less expensive (thanks to the small die size).

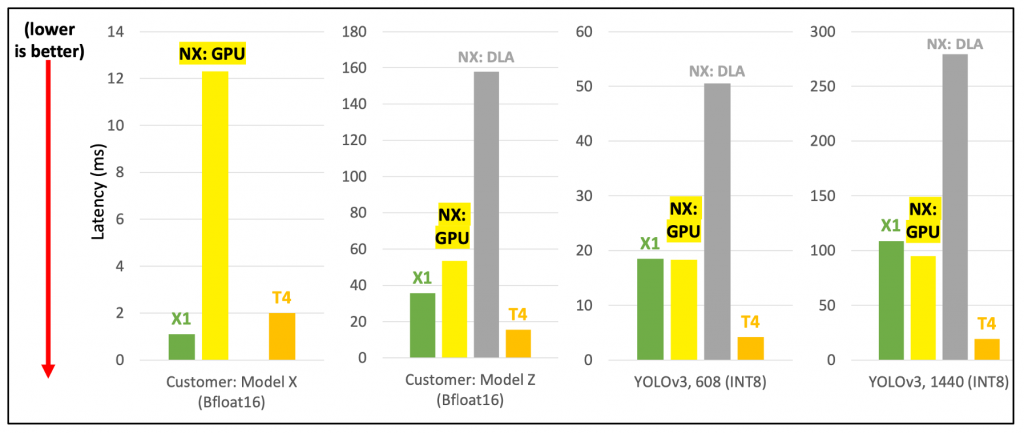

Regarding performance benchmarking, Vinay spent some time reviewing the various benchmarks (e.g., MobileNet, ResNet-50, Inception v4, YOLOv3). He also explained that many benchmarks assume perfectly ordered data, which often is not the case in real-world workloads. Putting this all together to examine benchmark performance for latency-sensitive edge applications yields the figure below. Note these results focus on latency without regard to power and cost.

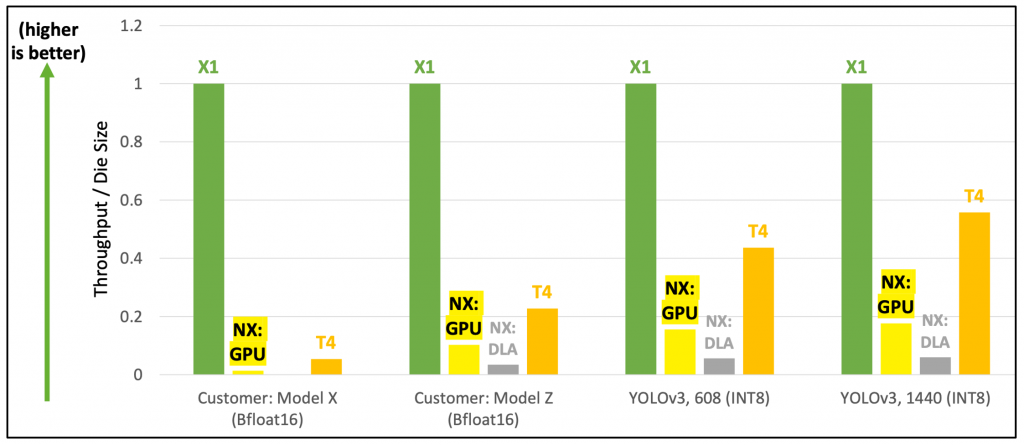

Vinay pointed out that the view above isn’t holistic in the sense that customers will be interested in the combination of performance, power and cost. Looking at the benchmark data through the lens of throughput relative to die size, which is a proxy for cost, you get the figure below. The InferX X1 has a clear advantage thanks to its small size and efficient utilization of resources.

Vinay then spent some time explaining how various convolutional neural network (CNN) algorithms are mapped to the InferX X1 architecture. The ability to “re-wire” the part based on the memory access patterns of the particular convolutional kernel or series of convolutional kernels being implemented is a key reason for the results portrayed in the figure above. Flex Logix’s embedded FPGA technology provides this critical level of differentiation, as it allows for more complicated operations (such as 3D convolutions) to map efficiently to its unique 1D systolic architecture.

Vinay’s talk covered many more aspects of real-time inference requirements at the edge. There was also a very useful Q&A session. If you weren’t able to attend his presentation at the Linley Conference, there is a replay available. I highly recommend you catch that. You can register to access the replay here. The presentation is on day four, April 9, 2020 at 11:10 AM.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.