In sports, we’re all familiar with how even a team with the best individual players for every role needs to be coordinated as a team to win a championship. In healthcare, a patient is better served with a well-trained primary physician to coordinate with the various medical specialists. The field of semiconductors involves a series… Read More

Tag: ai at the edge

Build a Sophisticated Edge Processing ASIC FAST and EASY with Sondrel

Building a custom chip for edge computing applications can be quite daunting. For starters, there is very little power available at the edge, so energy efficiency will be top of mind. The whole point of edge processing is to off-load the time-consuming and costly process of sending data to the cloud, so substantial processing capability… Read More

Flex Logix Brings AI to the Masses with InferX X1

In April, I covered a new AI inference chip from Flex Logix. Called InferX X1, this part had some very promising performance metrics. Rather than the data center, the chip focused on accelerating AI inference at the edge, where power and form factor are key metrics for success. The initial information on the chip was presented at … Read More

Accelerating Edge Inference with Flex Logix’s InferX X1

For a long time, memories were the primary technology driver for process development. If you built memories, you got access to cutting-edge process information. If you built other products, this could give you a competitive edge. In many cases, FPGAs are replacing memories as the driver for advanced processes. The technology… Read More

Wave Computing and MIPS Wave Goodbye

Word on the virtual street is that Wave Computing is closing down. The company has reportedly let all employees go and will file for Chapter 11. As one of the many promising new companies in the field of AI, Wave Computing was founded in 2008 with the mission “to revolutionize deep learning with real-time AI solutions that scale from… Read More

Artificial Intelligence in Micro-Watts: How to Make TinyML a Reality

TinyML is kind of a whimsical term. It turns out to be a label for a very serious and large segment of AI and machine learning – the deployment of machine learning on actual end user devices (the extreme edge) at very low power. There’s even an industry group focused on the topic. I had the opportunity to preview a compelling webinar about… Read More

Linley Spring Processor Conference Kicks Off – Virtually

The popular Linley Processor Conference kicked off its spring event at 9AM Pacific on Monday, April 6, 2020. The event began with a keynote from Linley Gwennap, principal analyst and president at The Linley Group. Linley’s presentation provided a great overview of the application of AI across several markets. Almost all of the… Read More

Edge Computing – The Critical Middle Ground

Ron Lowman, product marketing manager at Synopsys, recently posted an interesting technical bulletin on the Synopsys website entitled How AI in Edge Computing Drives 5G and the IoT. There’s been a lot of discussion recently about the emerging processing hierarchy of edge devices (think cell phone or self-driving car), cloud… Read More

Efficiency – Flex Logix’s Update on InferX™ X1 Edge Inference Co-Processor

Last week I attended the Linley Fall Processor Conference held in Santa Clara, CA. This blog is the first of three blogs I will be writing based on things I saw and heard at the event.

In April, Flex Logix announced its InferX X1 edge inference co-processor. At that time, Flex Logix announced that the IP would be available and that a chip,… Read More

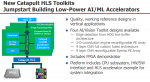

AI Hardware Summit, Report #2: Lowering Power at the Edge with HLS

I previously wrote a blog about a session from Day 1 of the AI Hardware Summit at the Computer History Museum in Mountain View, CA, held just last week. From Day 2, I want to delve into this presentation by Bryan Bowyer, Director of Engineering, Digital Design & Implementation Solutions Division at Mentor, a Siemens Business.… Read More