Last week I attended the Linley Fall Processor Conference held in Santa Clara, CA. This blog is the first of three blogs I will be writing based on things I saw and heard at the event.

In April, Flex Logix announced its InferX X1 edge inference co-processor. At that time, Flex Logix announced that the IP would be available and that a chip, InferX X1, would tape out in Q3 2019. Speaking at the fall conference, Cheng Wang, Co-founder and Senior VP of Engineering, announced that indeed, the chip did tape out in Q3. Also, Cheng said that first silicon/boards would be available sometime in March 2020, there would be a public demo in April 2020 (perhaps at the next Linley Conference?), and that mass production will be in 2H 2020. While this means that Flex Logix is delivering on the announced schedule, there was certainly a specific focus to Cheng’s presentation beyond that message. In a word – Efficiency.

In engineering fields, we often compare different efforts or approaches to the same problems using benchmarks. When it comes to looking at finished products, these benchmarks can be straight-forward. For example, we review miles per gallon, acceleration, stopping distance, and other factors when analyzing the performance of a car. For processors, it has always been a bit more difficult to do benchmarking. I remember working with BDTi nearly 20 years ago when trying to compare the performance of various processors for video processing with widely different architectures. It took an organization like BDTi to give an unbiased analysis, though it was still challenging to see how the results related to your real-world needs.

There is an increasing number of processing options now being developed and deployed for neural network inference at the edge. More and more, we see attempts to standardize the benchmarks for these solutions. One example is Stanford University’s DAWNBench, a benchmark suite for end-to-end deep learning training and inference. But reading through this information, you still will come to realize that it is your specific application that truly matters. Why look at benchmark results for “93% accuracy”, if you must meet “97% accuracy”? Does Resnet 50 v1 accurately represent the model you will be running? In particular, DAWNbench was ranking results based on either cost or time. As engineers though we typically face criteria in a different manner – hard constraints and efficiency.

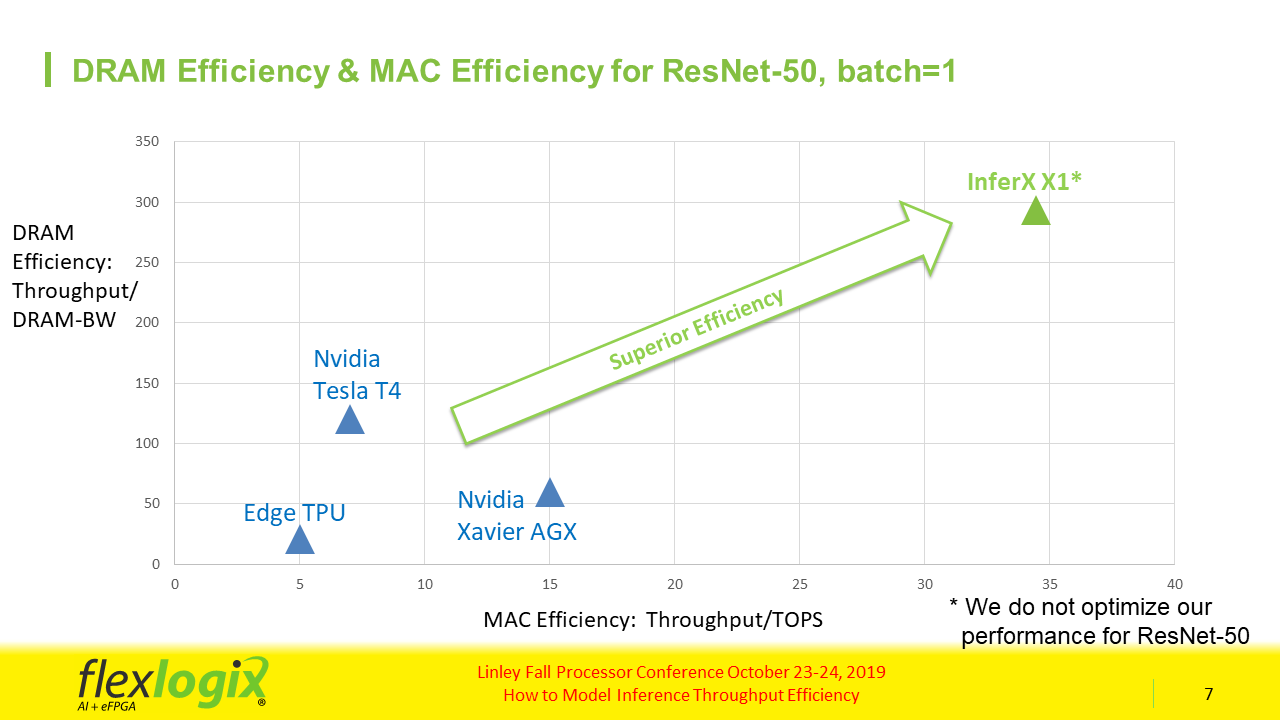

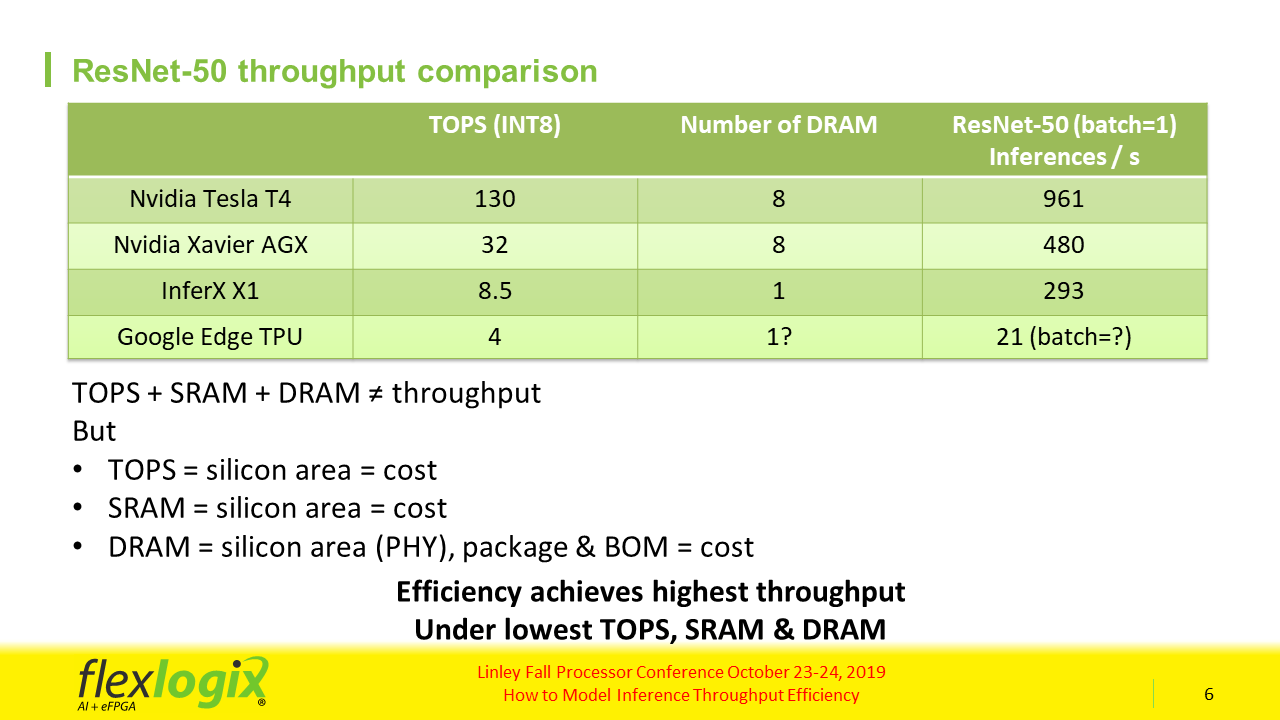

Hard constraints are easy to understand when looking at these benchmarks as there will be simple constraints for area, power, and performance. Likely, multiple architectures may be able to meet all or most of these constraints, though perhaps not simultaneously. But to understand which approach meets them best, you need to consider efficiency – inferences per $, and inferences per watt. This method of showing performance is where Flex Logix’s InferX X1 approach seems to separate itself from the competition, at least for the devices shown. From the Flex Logix presentation at the Fall Conference:

DRAM costs money, so it is important to be efficient in your use of DRAM. If you are not considering DRAM efficiency in making your selection of IP, then you are not measuring your true costs. The DRAM requirements to hit a certain performance level are not equal between the various processors.

The one thing that has been clear to me this year, especially having attended both the AI Hardware Summit and the Linley Fall Processor Conference, is that simply measuring TOPS is a waste of time. See below the information presented by Flex Logix on TOPS across a few well-known solutions. In this example, InferX X1 would seem to be a minimum of 2x more efficient than the Nvidia solutions.

The entire Linley Fall Processor Conference presentation from Flex Logix is available on their website here. It is not possible to share all the details in a blog here, but I encourage you to see the entire presentation. There is more information available in the presentation about how this efficiency is achieved and how to accurately predict inference performance (how Flex Logix confirmed their performance pre-silicon).

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.