DSP and AI are generally considered separate disciplines with different application solutions. In their early stages (before programmable processors), DSP implementations were discrete, built around a digital multiplier-accumulator (MAC). AI inference implementations also build on a MAC as their primitive. If the interconnect were programmable, could the MAC-based hardware be the same for both and still be efficient? Flex Logix says yes with their next-generation InferX reconfigurable DSP and AI IP.

Blocking-up tensors with a less complex interconnect

If your first thought reading that intro was, “FPGAs already do that,” you’re not alone. When tearing into something like an AMD Versal, one sees AI engines, DSP engines, and a programmable network on chip. But there’s also a lot of other stuff, making it a big, expensive, power-hungry chip that can only go in a limited number of places able to support its needs.

And, particularly in DSP applications, the full reconfigurability of an FPGA isn’t needed. Having large numbers of routable MACs sounds like a good idea, but configuring them together dumps massive overhead into the interconnect structure. A traditional FPGA looks like 80% interconnect and 20% logic, a point most simplified block diagrams gloss over.

Flex Logix CEO Geoff Tate credits his co-founder and CTO Cheng Wang with taking a fresh look at the problem. On one side are these powerful but massive FPGAs. On the other side sit DSP IP blocks from competitors that don’t pivot from their optimized MAC pipeline to sporadic AI workloads with vastly wider and often deeper MAC fields organized in layers.

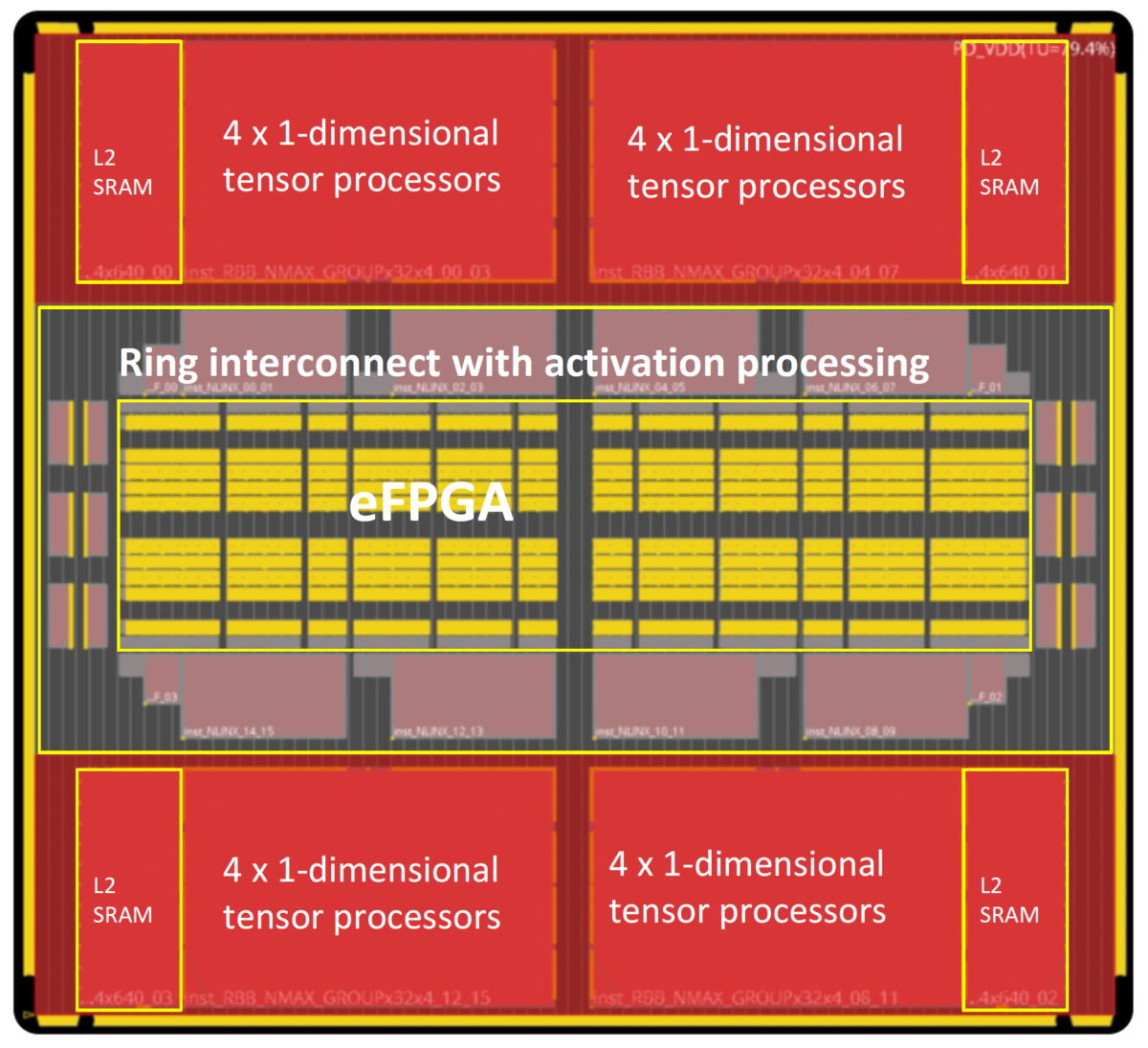

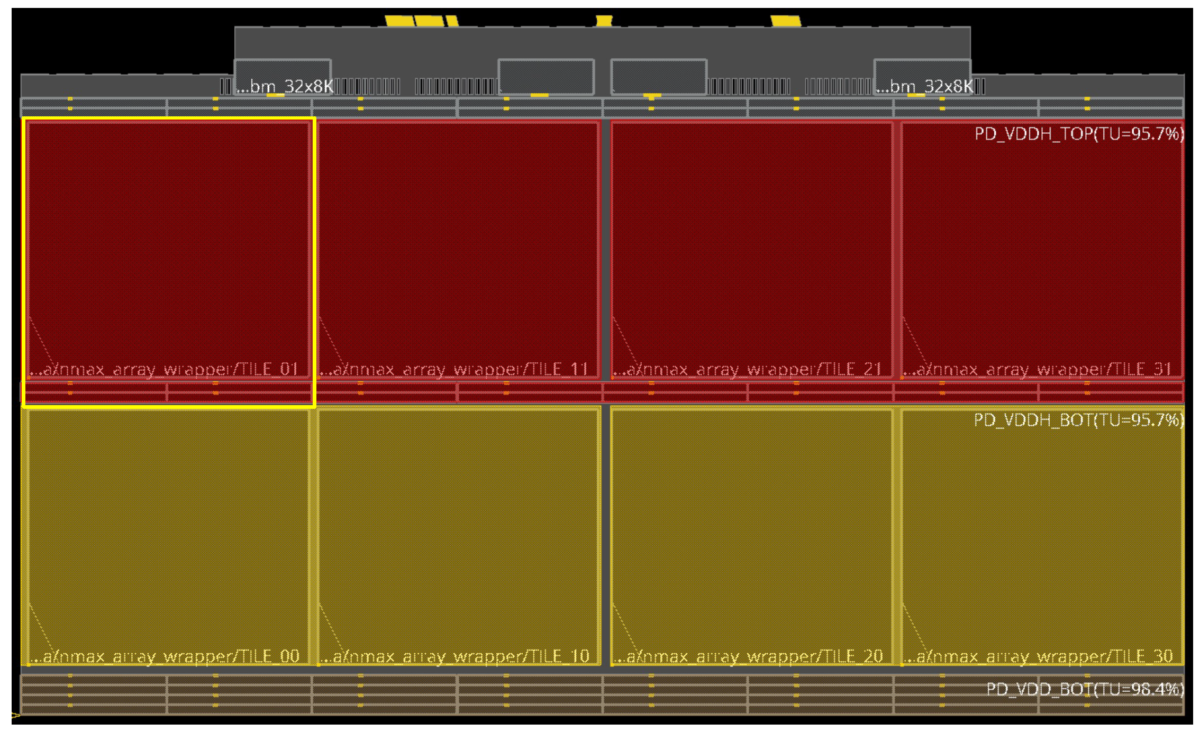

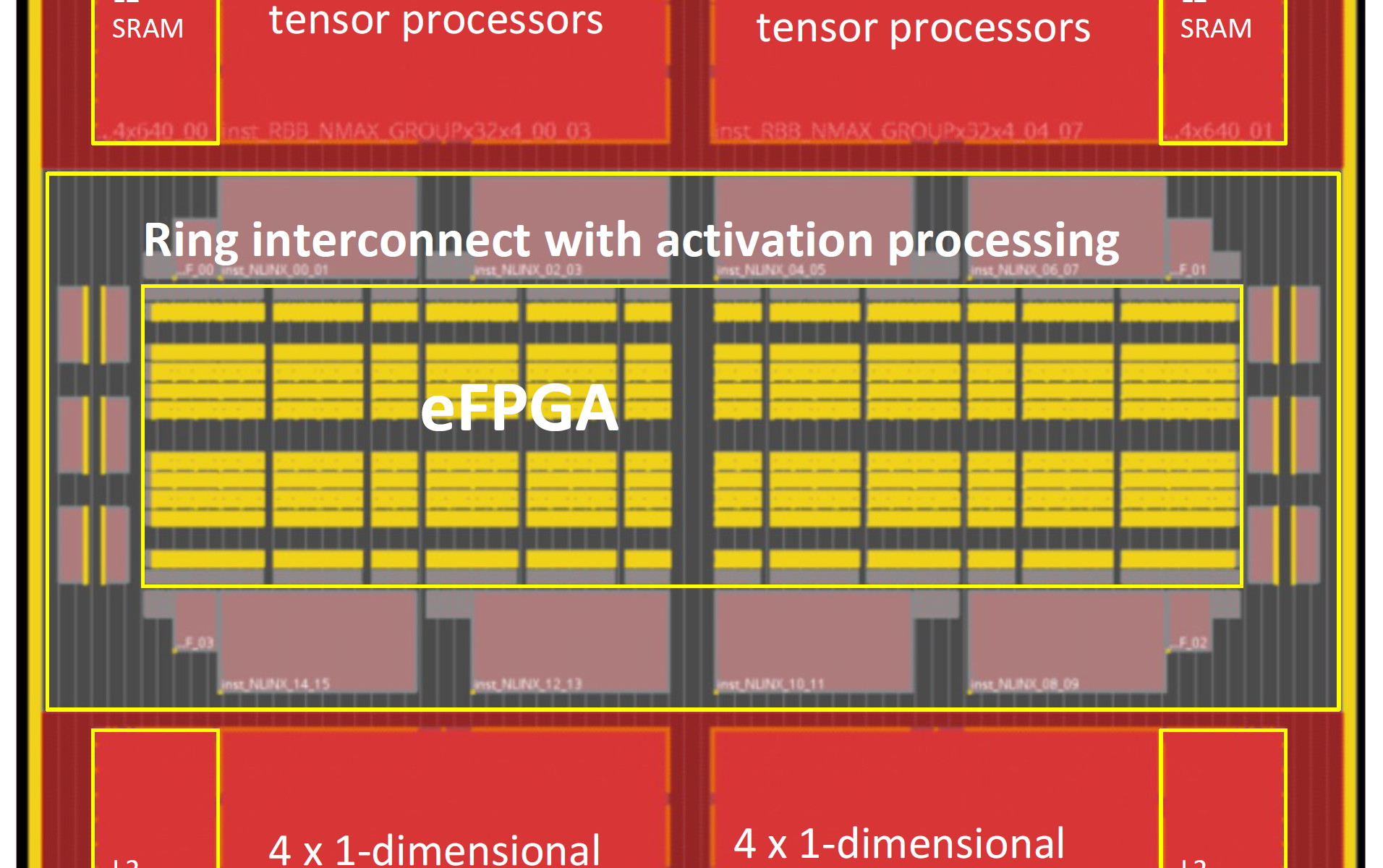

Wang’s idea: create a next-generation InferX 2.5 tile built around a tensor processor unit, each with eight blocks of 64 MACs (INT8 x INT8) tied to memory and a more efficient eFPGA-based interconnect. With 512 MACs per TPU and 8192 MACs per tile, each tile delivers 16 TOPS peak at 1 GHz. It’s flipped the percentages: 80% of the InferX 2.5 unit is hardwired, yet it retains 100% reconfigurability. One tile in TSMC 5nm is a bit more than 5mm2, a 3x to 5x improvement over competitive DSP cores for equivalent DSP throughputs.

Software makes reconfigurable DSP and AI IP work

The above tile is the same for either DSP or AI applications – configuration happens in software.

The required DSP operations for a project are usually close to being locked down before committing to hardware. InferX 2.5, with its software, can handle any function: FFT, FIR, IIR, Kalman filtering, matrix math, and more, at INT 16×16 or INT 16×8 precision. One tile delivers 4 TOPS (INT16 x INT16), or in DSP lingo 2 TeraMACs/sec, at 1 GHz. Flex Logix codes a library that handles softlogic and function APIs, simplifying application development. Another footprint-saving step is an InferX 2.5 tile that can be reconfigured in less than 3usec, enabling a function quick-change for the next pipeline step.

AI configurations use the same tile with different Flex Logix software. INT 8 precision is usually enough for edge AI inference, meaning a single tile and its 16 tensor units push 16 TOPS at 1 GHz. The 3usec reconfigurability allows layers or even entire models to switch processing instantly. Flex Logix AI quantization, compilers, and softlogic handle the mapping for models in PyTorch, TensorFlow Lite, or ONNX, so application developers don’t need to know hardware details to get up and running. And, with the reconfigurability, teams don’t need to commit to an inference model until ready and can change models as often as required during a project.

Scalability comes with multiple tiles. N tiles provide N times the performance in DSP or AI applications, and tiles can run functions independently for more flexibility. Tate says so far, customers have not required more than eight tiles for their needs, and points out larger arrays are possible. Tiles can also be power managed – below, an InferX 2.5 configuration has four powered tiles and four managed tiles that can be powered down to save energy.

Ready to deliver more performance within SoC power and area limits

Stacking InferX 2.5 up against today’s NVIDIA baseline provides additional insight. Two InferX 2.5 tiles in an SoC check in around 10mm2 and less than 5W – and deliver the same Yolo v5 performance as a much larger external 60W Orin AGX. Putting this in perspective, below is super-resolution Yolo v5L6 running on an SoC with InferX 2.5.

Tate says what he hears in customer discussions is that transformer models are coming – maybe displacing convolutional and recurrent neural networks (CNNs and RNNs). At the same time, AI inference is moving into SoCs with other integrated capabilities. Uncertainty around models is high, while area and power requirements for edge AI have finite boundaries. InferX 2.5 can run any model, including transformer models, efficiently.

Whether the need is DSP or AI, InferX is ready for the performance, power, and area challenge. For more on the InferX 2.5 reconfigurable DSP and AI IP story, please see the following:

Press release: Flex Logix Announces InferX™ High Performance IP for DSP and AI Inference

Product pages: InferX DSP and InferX AI

Also Read:

eFPGA goes back to basics for low-power programmable logic

eFPGAs handling crypto-agility for SoCs with PQC

WEBINAR: Taking eFPGA Security to the Next Level

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.