It is an unavoidable fact that machine learning (ML) hardware architectures are evolving rapidly. Initially most visible in datacenters (many hyperscalars have built their own AI chips), the trend is now red-hot in inference engines for the edge, each spinning new ground-breaking methods. Markets demand these advances to support bigger images and voice recognition problems in real-time, for some level of local training, for local processing for privacy/security, and to reduce power and latency in communication to the fog or cloud. Product OEMs depend on those advances, for differentiation in power, latency, privacy, cost, etc. But they are far from expert in the underlying hardware. Without that understanding, how can they fully exploit their advantages? That’s why software rules AI success at the edge – the software that maps between open AI solutions trained in the cloud and these highly optimized hardware platforms.

FlexLogix at Linley

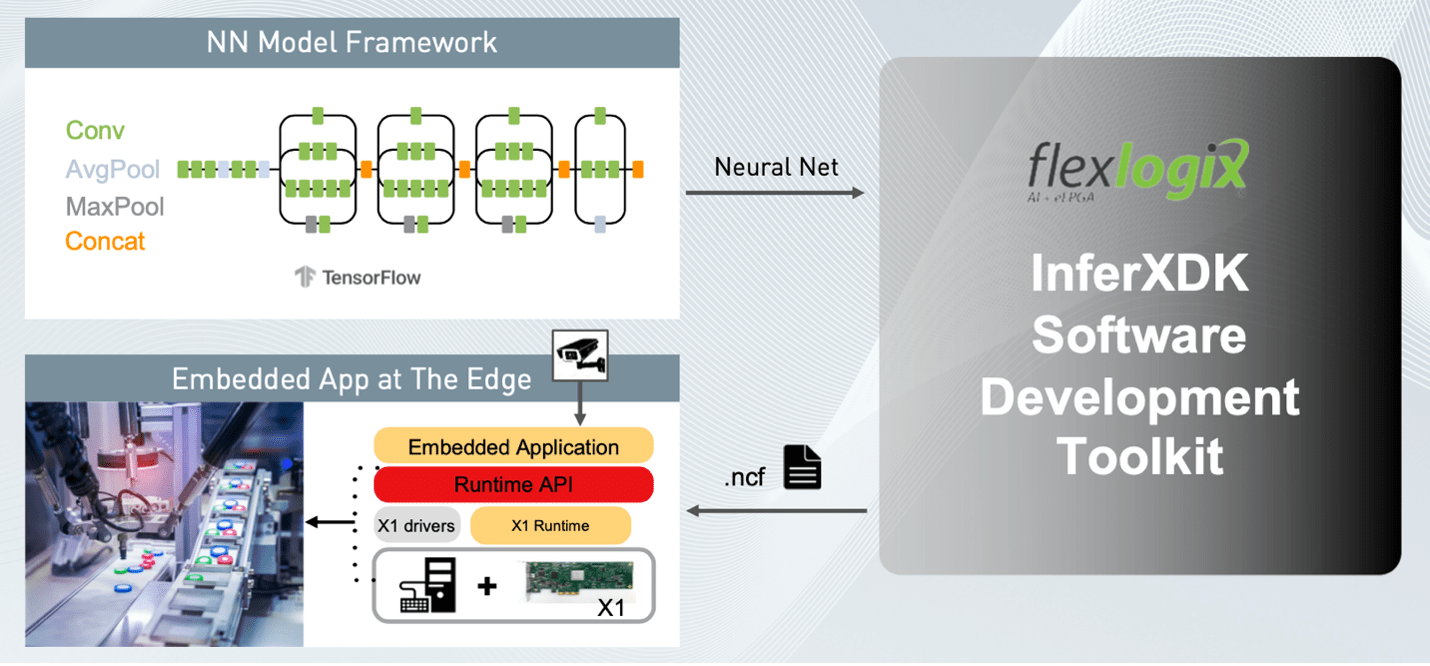

Randy Allen, VP of software at FlexLogix, presented at the Linley Spring conference on this question, illustrating through the FlexLogix InferX X1 product line. Briefly, the heart of the X1 is a dynamically reconfigurable tensor processor, in which you can rapidly reconfigure the hardware datapath for each layer of ML processing. As one layer completes processing, the next layer reconfigures in microseconds. X1 offers the software flexibility of a CPU solution but with the performance and power advantages of a full ASIC solution.

InferX is a good example of a highly optimized edge architecture. Capable of amazing performance at amazingly low power, but only if used correctly. Meeting that goal requires compiler magic which knows how to map optimally from one of the standard open-source networks (TensorFlow, PyTorch, etc) into the underlying hardware architecture and to connect back to the OEM application. Completely hands-free from an OEM developer point of view.

You can understand then why software (the compiler) can make or break a great edge AI solution. Because, for edge devices fantastic hardware is useless if it is only usable by experts.

More detail on the FlexLogiX compiler

A compiler for one of these devices is a completely different animal from a regular software compiler. In this instance it must map TensorFlow Lite or ONNX operators produced from standard trained networks; into a reconfigurable tensor processor in a way that maximizes throughput while minimizing power.

The compiler maps many diverse planes in a typical network model into the tensor fabric with several constraints in mind. First to organize operations in the network for maximum parallelism. Second to minimize off-chip memory traffic as much as possible. Since any operation needing to go off-chip will automatically incur significant latency and power penalties. So a major consideration in these compilers is finding the greatest possible reuse of the data already on on-chip. Image data, weights and activation function fetch and store delayed as long as possible off-chip memory operations. Between these two constraints is where the X1 compiler works; through scheduling, parallelizing and fusing operations, and maximizing on-chip data reuse. Then finally generating a bit stream for that optimized model to program the InferX device.

Going deeper

You can learn more about the InferX products HERE. If you’re interested in digging deeper into the compiler technology, there’s a nice presentation by Jeremy Roberson (also at FlexLogix), from the 2021 Spring Linley conference.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.