Looking backward and forward, the white paper from Codasip “Scaling is Failing” by Roddy Urquhart provides an interesting history of processor development since the early 1970s to the present. However it doesn’t stop there and continues to extrapolate what the chip industry has in store for the rest of this decade. For the last half century, Moore’s Law, an observation regarding the number of transistors that can be integrated on chip, was crafted by Gordon Moore, one of the founders of Intel Corp. That observation was followed by Robert Dennard of IBM Corp., who in addition to inventing the single-transistor DRAM cell, defined the rules for transistor scaling, now known as Dennard Scaling.

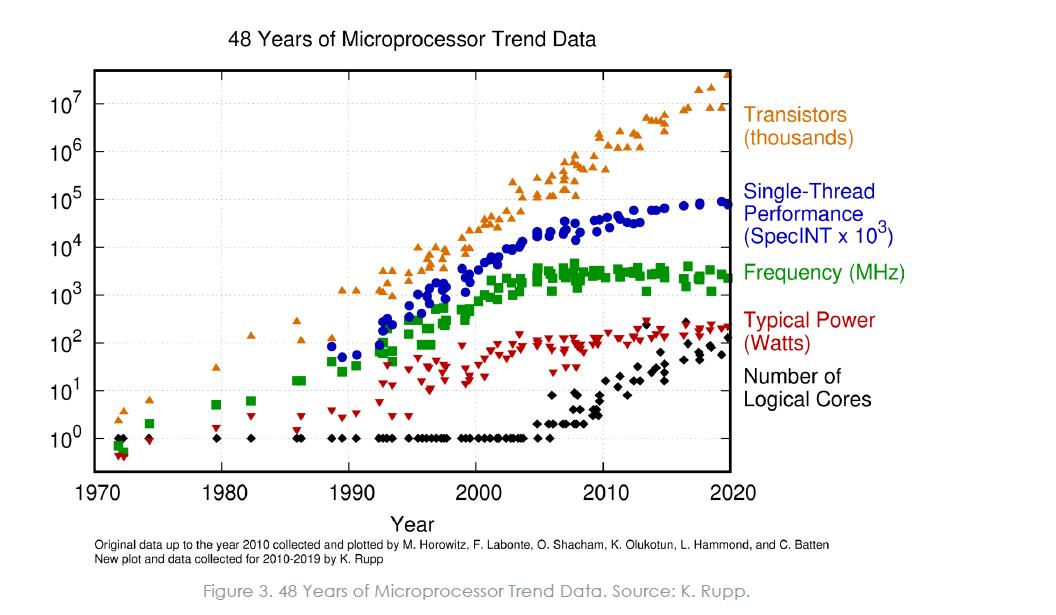

In addition to scaling, Amdahls law, stipulated by Gene Amdahl while at IBM Corp. in 1967, deals with the theoretical speedup possible when adding processors in parallel. Any speedup will be limited by those parts of the software that are required to be executed sequentially. Thus, Moore’s Law, Dennard Scaling, and Amdahl’s law have guided the semiconductor industry over the last half century (see the figure). However, Codasip claims they are all failing and that the industry must change and the processor paradigms must change with it. Some of those changes include the creation of domain-specific accelerators, customized solutions, and new companies that create disruptive solutions.

Supporting the paper’s premise that semiconductor scaling is failing are numerous examples in the microprocessor world. The examples start with the Intel x86 family as an illustration of how scaling failed as chip complexities and clock speeds increased with each new generation of the single-core CPUs. As each CPU generation’s clock frequency increased from the MHz to the GHz level thanks to the improvements in scaling, chip thermal limits became a restraining factor for performance. The performance limitation was the result of a dramatic increase in power consumption as clock speeds hit 3 GHz and higher and complexities hit close to a billion transistors on a chip. The smaller size of the transistors also resulted in increased leakage currents, and the higher leakage currents caused the chips to consume more power even when idling.

To avoid thermal runaway caused by increasing clock frequencies, designers opted for multi-core architectures, integrating two, four or more CPU cores on a single chip. These cores could operate at lower clock frequencies, share various on-chip resources, and thus consume less power. The additional benefit of the multiple cores was the ability to multitask, allowing the chip to run multiple programs simultaneously. However, the multicore approach was not enough for the CPUs to handle the myriad tasks that new applications such as graphics, image and audio processing, artificial intelligence, and still other functions.

Thus, Codasip is proposing that further processor specialization will deliver considerable performance improvements – the industry must change from adapting software to execute on available hardware to tailoring computational units to match their computational load. To accomplish this, many varied custom designs will be needed, permitting companies to design for differentiation. Additionally new approaches to processor design must be considered – especially the value of processor design language and processor design automation.

Using the RISC-V modular architecture as an example of the ability to create specialized cores and its flexibility to craft specialized instructions, Codasip sees the RISC-V as an excellent starting point for tailored processing units. Cores will typically be classified in one of four general categories – MCU, DSP, GPU, and AP (application processor), with each type optimized for a range of computations, some of which may not match what is actually required by the on-chip subsystem. Some companies have already developed specialized cores (often referred to as application-specific instruction processors, ASIPs) that efficiently handle a narrowly-defined computational workload. However, crafting such cores requires specialized skills to define the instruction set, develop the processor microarchitecture, create the associated software tool chain, and finally, verify the core.

Codasip suggests that the only way to take specialization a step further is to create innovative architectures to tackle specialized processing problems. Hardware should be created to match the software workload – that can be achieved by customizing the instruction set architecture, creating special microarchitectures, or creating novel processing cores and arrays. ASIPs can be considered a subset of domain-specific accelerator, a category defined in a paper presented in 2019 by John Hennessy and David Paterson – “A New Golden Age for Computer Architecture”.

They characterized DSAs as exploiting parallelism (such as instruction-level parallelism, or SIMD, or systolic arrays) if the class of applications benefitted from it. DSAs can better match their computational capabilities to the intended application. One example is the Tensor Processing Unit (TPU) developed by Google, which is a systolic array working with 8-bit precision. The more specialized the processor, the greater the efficiency in terms of silicon area and power consumption. However, with less specialization, the greater the flexibility of the DSA. On the DSA continuum there is the possibility of fine-tuning a core for performance, area, and power – and design for differentiation is enabled.

Specialization is not only a great opportunity, but it means that there will be many different designs created. Those designs will require a broader community of designers and a greater degree of design efficiency. Codasip sees four enablers that can contribute to the efficient design – the open RISC-V ISA, processor design language, processor design automation, and existing verified RISC-V cores for customization.

They feel that RISC-V – a free and open standard that only covers the instruction set architecture and not the microarchitecture – has garnered widespread support and does not prescribe a licensing model so both commercially licensed and open-sourced microarchitectures are possible. If designers use an processor design lanaguage such as Codasip’s CodAL, they have a complete processor description capable of supporting software, hardware, and verification aspects. If custom instructions are implemented by adding to the processor design language source and can thus be reflected in the software toolchain and verification environment as well as the RTL.

Also read:

Optimizing AI/ML Operations at the Edge

Podcast EP60: Knowing your bugs can make a big difference to elevate the quality of verification

Share this post via:

Comments

5 Replies to “Scaling is Failing with Moore’s Law and Dennard”

You must register or log in to view/post comments.