The Linley Group held its Fall Processor Conference 2021 last week. There were several informative talks from various companies updating the audience on the latest research and development work happening in the industry. The presentations were categorized as per their focus, under eight different sessions. The sessions topics were, Applying Programmable Logic to AI Inference, SoC Design, Edge-AI Software, High-Performance Processors, Low-Power Sensing & AI, Server Acceleration, Edge-AI Processing, High-Performance Processor Design.

Edge-AI processing has been garnering a lot of attention over the recent years and accelerators are being designed-in for this important function. Flex Logix, Inc, delivered a couple of presentations at the conference. The talk titled “A Flexible Yet Powerful Approach to Evolving Edge AI Workloads,” was given by Cheng Wang, their Sr.VP Software Architecture Engineering. This presentation covered details of their InferX X1 hardware, designed to support evolving learning models, higher throughput and lower training requirements. The other talk titled “Real-time Embedded Vision Solutions with the InferX SDK,” was given by Jeremy Roberson, their Technical Director and AI Inference Software Architect. This presentation covered details of their software development kit (SDK) that makes it easy for customers to design an accelerator solution for Edge-AI applications. The following is an integrated summary of what I gathered from the two presentations.

Market Needs and Product Requirements

As fast as the market for edge processing is growing, the performance, power and cost requirements of these applications are also getting increasingly demanding. And AI adoption is pushing processing requirement more toward data manipulation rather than general purpose computing. Hardware accelerator solutions are being sought after to meet the needs of a growing number of consumer and commercial applications. While an ASIC-based accelerator solution is efficient from a performance and power perspective, it doesn’t offer the flexibility to address the changing needs of an application. A CPU or GPU based accelerator solution is flexible but not efficient in terms of performance, power and cost efficiencies. A solution that is both efficient and flexible will be a good fit for edge-AI processing applications.

The Flex Logix InferX™ X1 Chip

The InferX X1 chip is an accelerator/co-processor for the host processor. It is based on a dynamic Tensor processing approach. The Tensor array and datapath are programmed via a standard AI model paradigm described using TensorFlow. The hardware path is reconfigured and optimized for each layer of AI model processing. As a layer completes processing, the next layer configuration is reconfigured in microseconds. This allows efficiencies approaching what can be expected from a full custom ASIC at the same time providing the flexibility to accommodate new AI models. This reconfigurable hardware approach makes it well suited for executing new neural network model types.

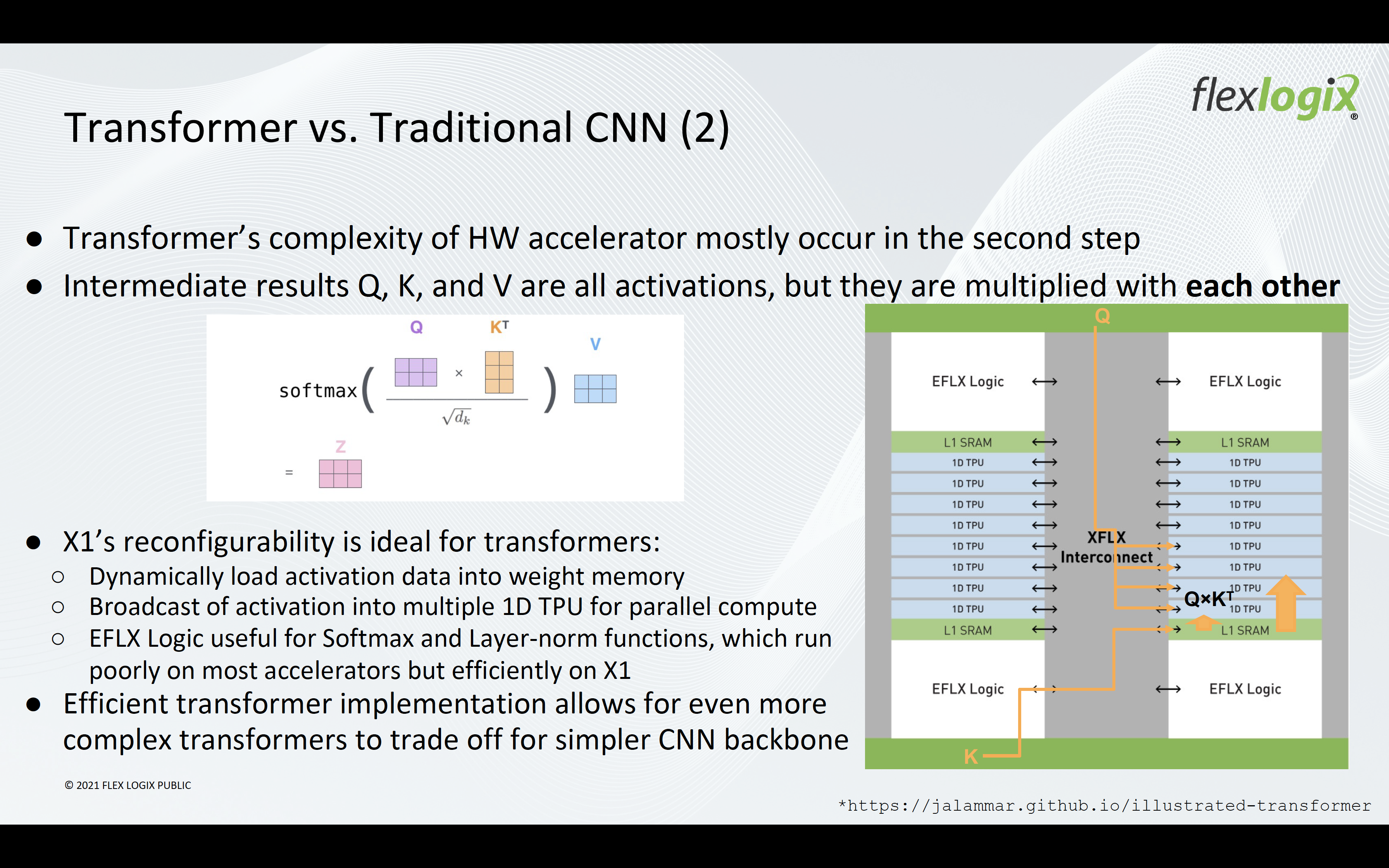

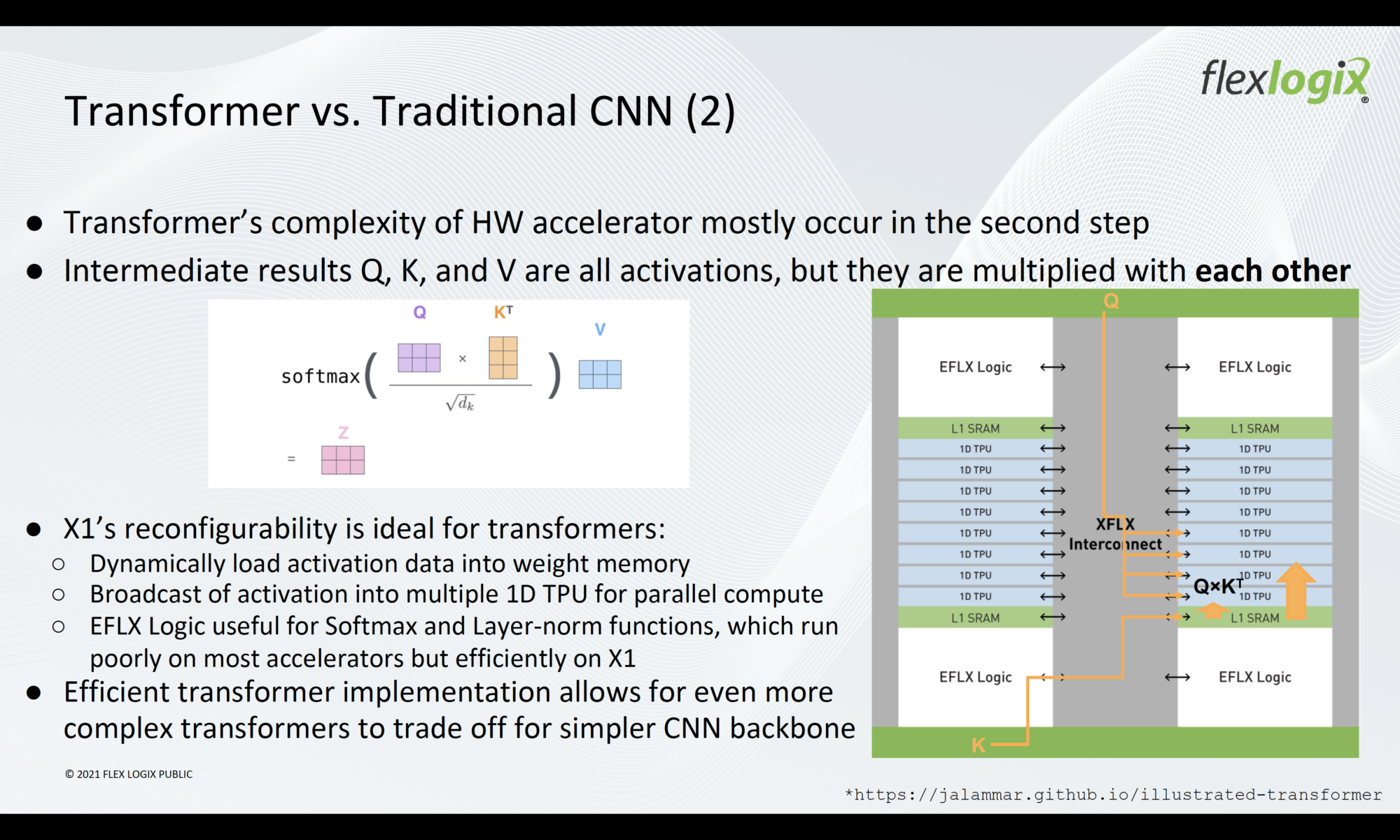

A Transformer is a new type of neural network architecture that is gaining adoption due to better efficiencies and accuracies for certain edge applications. But transformer’s computational complexity far exceeds what host processors can handle. Transformers also have a very different memory access pattern than CNNs. The flexibility of the InferX technology can handle this. ASICs and other approaches (MPP for example) may not be able to easily support the memory access requirements of transformers. X1 can also help implement more complex transformers efficiently in exchange for simpler neural network backbone.

The InferX X1 chip includes a huge bank of multiply accumulate units (MACs) that do the neural math very efficiently. The hardware blocks are threaded together using configurable logic which is what delivers the flexibility. The chip has 8MB of internal memory, so performance is not impacted due to being external memory-bound. Very large network models can be run off of external memories.

Current Focus for Flex Logix

Although the InferX X1 can handle text input, audio input and generic data input, Flex Logix is currently focused on embedded vision market segments. Embedded vision applications are proliferating across multiple industries.

The InferX SDK

The SDK is responsible for compiling the model and enabling inference on the X1 Inference Accelerator.

How the Compiler Works

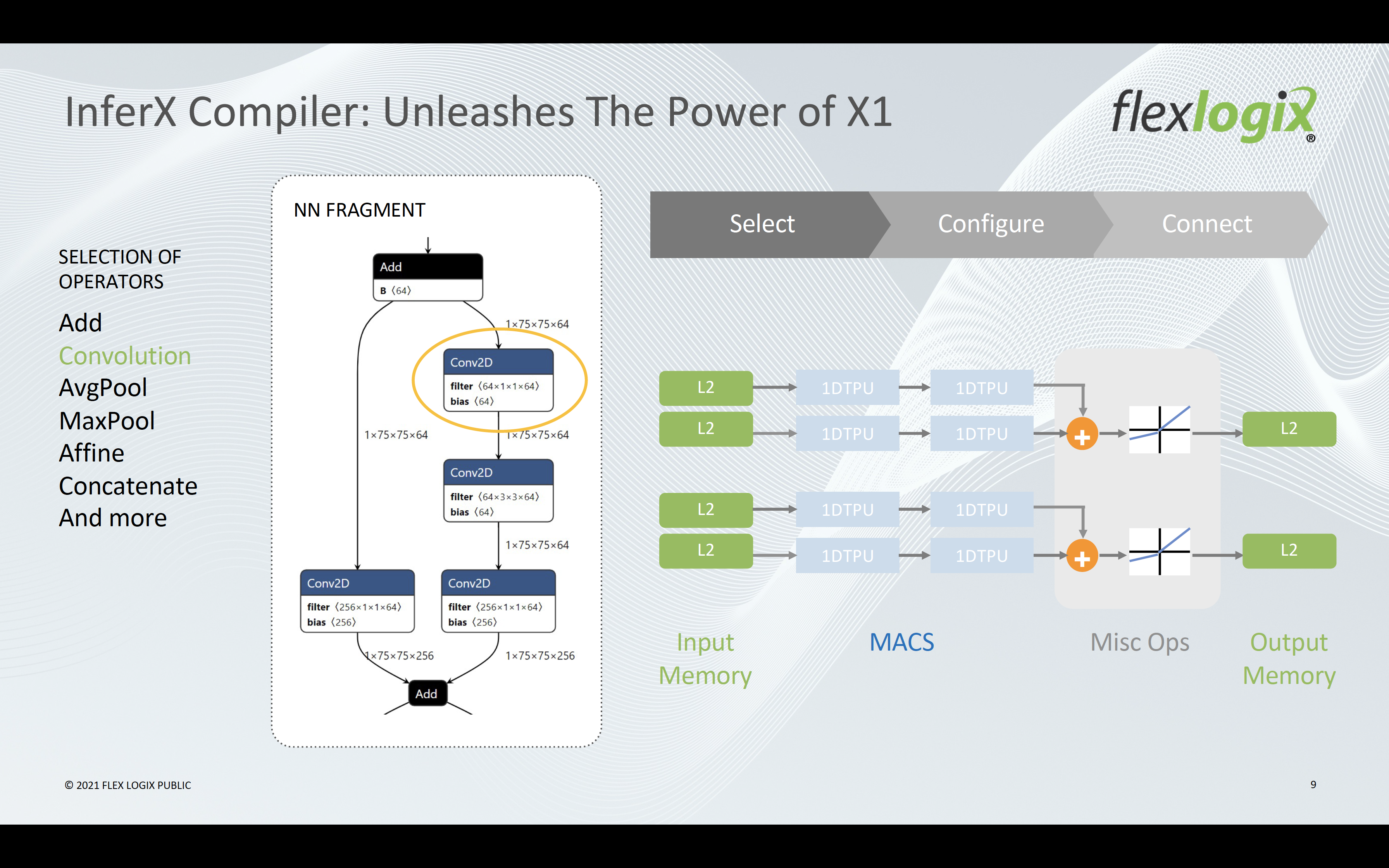

The compiler traverses the neural network layer by layer and optimizes each operator by mapping to the right hardware on X1. It converts TensorFlow graph model to dynamic InferX hardware instances. It automatically selects memory blocks and the 1D-TPU (MACs) and connects these blocks and other functions such as non-linearity and activation functions. And it finally adds and configures the output memory blocks for receiving the inference results.

Minimal effort is required to go from Model to Inference results. The customer supplies just a TFLite/ONNX model as input to the compiler. The compiler converts the model into a bit file for runtime processing of the customer’s data stream on the X1 hardware.

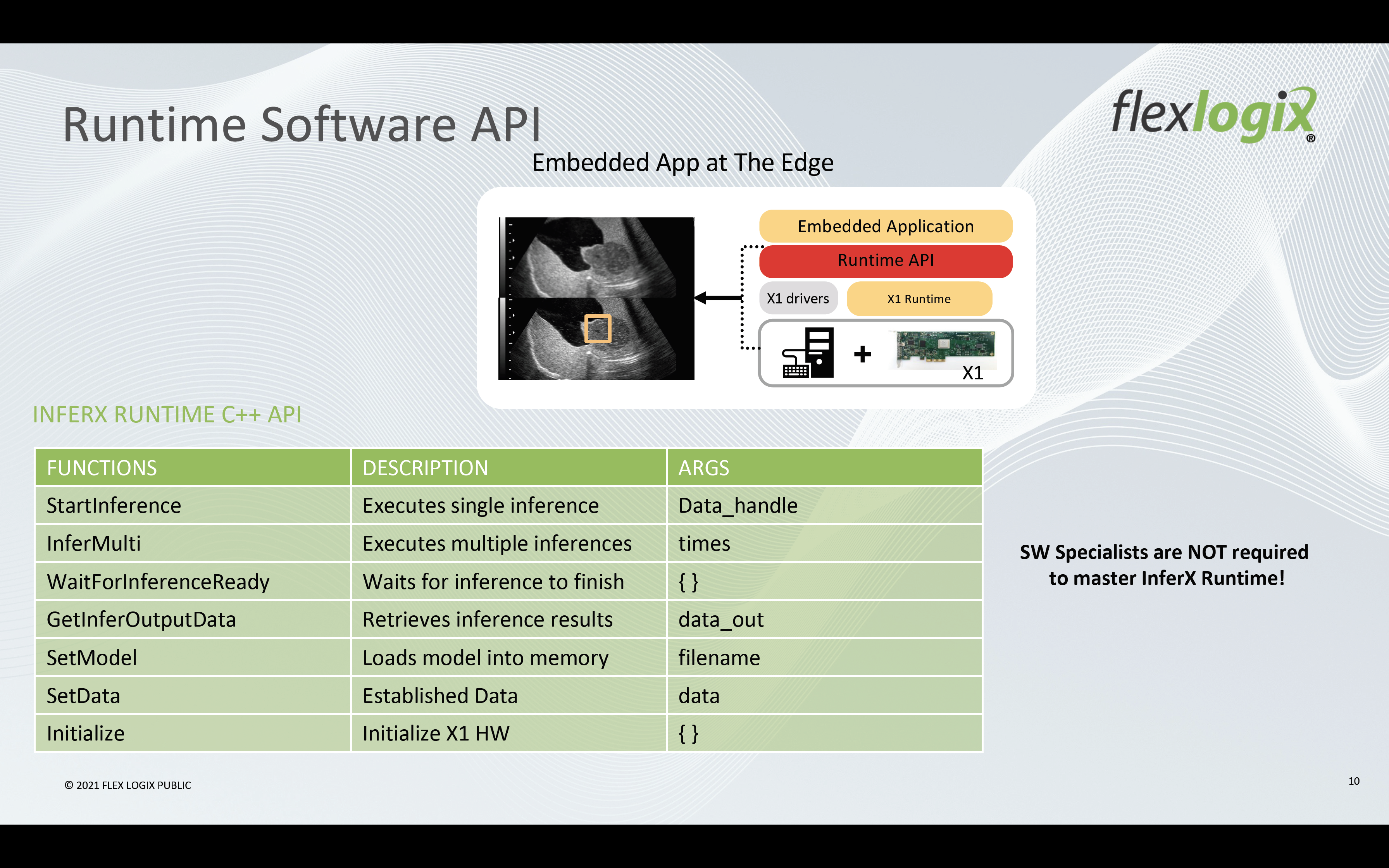

Runtime

API calls to the InferX X1 are made from the runtime environment. The API is architected to be able to handle the entire runtime specification with just a few API calls. The function call names are self-explanatory. This makes it easy and intuitive to implement.

Assuring High Quality

Each convolution operator has to be optimized differently as that depends on the channel depth. Flex Logix engages the hardware, software and apps team to rigorously test the usual as well as the corner cases. This is the diligent process they use to confirm that both the performance and functionality of the operators are met. Flex Logix has also quantized image de-noising and object detection models and verified a less than 0.1% accuracy loss in exchange for huge benefits in memory requirement.

Summary

Customers can implement their accelerator/inference solutions based on the InferX X1 chip. The InferX SDK makes it easy to implement edge acceleration solutions. Customers can optimize the solutions around their specific use cases in the embedded vision market segments. The compiler ensures maximum performance with minimal user intervention. The InferX Runtime API is streamlined for ease-of-use. The end result is CPU/GPU kind of flexibility with ASIC kind of performance at low-power. Because of the reconfigurability, the solution is future-proofed for handling newer learning models.

Cheng’s and Jeremy’s presentations can be downloaded from here. [Session 2 and Session 10]

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.