As the market for edge processing is growing, the performance, power and cost requirements of these applications are getting increasingly demanding. These applications have to work on instant data and make decisions in real time at the user end. The applications span the consumer, commercial and industrial market segments.… Read More

Tag: inferx x1

A Flexible and Efficient Edge-AI Solution Using InferX X1 and InferX SDK

The Linley Group held its Fall Processor Conference 2021 last week. There were several informative talks from various companies updating the audience on the latest research and development work happening in the industry. The presentations were categorized as per their focus, under eight different sessions. The sessions topics… Read More

Characteristics of an Efficient Inference Processor

The market opportunities for machine learning hardware are becoming more succinct, with the following (rather broad) categories emerging:

- Model training: models are evaluated at the “hyperscale” data center; utilizing either general purpose processors or specialized hardware, with typical numeric precision of 32-bit

AI Inference at the Edge – Architecture and Design

In the old days, product architects would throw a functional block diagram “over the wall” to the design team, who would plan the physical implementation, analyze the timing of estimated critical paths, and forecast the signal switching activity on representative benchmarks. A common reply back to the architects was, “We’ve… Read More

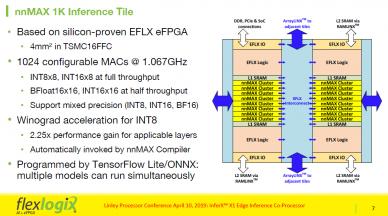

Flex Logix InferX X1 Optimizes Edge Inference at Linley Processor Conference

Dr. Cheng Wang, Co-Founder and SVP Engineering at Flex Logix, presented the second talk in the ‘AI at the Edge’ session, at the just concluded Linley Spring Processor Conference, highlighting the InferX X1 Inference Co-Processor’s high throughout, low cost, and low power. He opened by pointing out that existing inference solutions… Read More