The market opportunities for machine learning hardware are becoming more succinct, with the following (rather broad) categories emerging:

- Model training: models are evaluated at the “hyperscale” data center; utilizing either general purpose processors or specialized hardware, with typical numeric precision of 32-bit floating point for weights/data (fp32)

- Data center inference: user data is evaluated at the data center; for applications without stringent latency requirements (e.g., minutes, hours, or overnight); examples include: analytics and recommendation algorithms for e-commerce, facial recognition for social media

- inference utilizing an “edge server”: a derivative of data center inference utilizing plug-in hardware (PCIe) accelerator boards in on-premises servers; for power efficiency, training models may be downsized to employ “brain” floating point (bfloat16) with a truncated mantissa representation from 23 to 7 bits and the same exponent range as fp32; more recent offerings include stand-alone servers solely focused on ML inference

- inference accelerator hardware integrated into non-server edge systems: applications commonly focused on models receiving sensor or camera data requiring “real-time” image classification results – e.g., automotive data from cameras/radar/LiDAR, medical imaging data, industrial (robotic) control systems; much more aggressive power and cost constraints, necessitating unique ML hardware architectures and chip-level integration design; may invest additional engineering resource to further optimize the training model down to int8 for inference weights and data, accuracy-permitting (or, a network using a larger data representation for a Winograd convolution algorithm)

- voice recognition-specific hardware for voice/command recognition: relatively low computational demand; extremely cost-sensitive

Hyperscale data center hardware

The hardware opportunities for hyperscale data center training and inference are pretty well-defined, although research in ML model topologies and weights + activation optimization strategies is certainly evolving rapidly. Performance for hyperscale data center operation is assessed by the overall throughput on large (static) datasets. Hardware architectures and the related software management are focused on accelerating the evaluation of input data in large batch sizes.

The computational array of MAC’s and activation functions is optimized concurrently with the memory subsystem for evaluation of multiple input samples – i.e., batch >> 1. For large batch sizes provided to a single network model, the latency associated with loading network weights from memory to the MAC array is less of an issue. (Somewhat confusingly, the term batch is also applied to the model training phase, in addition to inference of user input samples. In the case of training, the term batch refers to the number of supervised testcases evaluated and error accumulated before the model weight correction step commences – e.g., the gradient descent evaluations of weight value sensitivities to reduce the error.)

Machine Learning Inference Hardware

The largest market opportunities are for the design of ML accelerator cards and discrete chips for categories (3) and (4) above, due to the breadth of potential applications, both existing and yet to evolve. What are the characteristics of these inference applications? What will be the differentiating features of the chip and accelerator boards that are integrated into these products? For some insight into these questions, I recently spoke with Geoff Tate, CEO at FlexLogix, designers of the InferX X1 machine learning hardware.

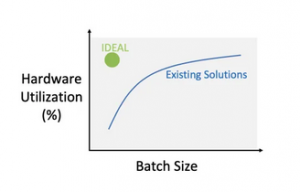

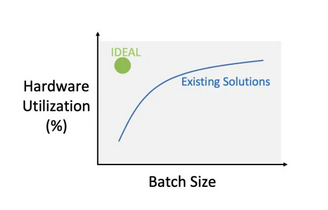

Geoff provided a very clear picture, indicating: “The market today for machine learning hardware is mostly in the data center, but that will change. The number one priority of the product developers seeking to integrate inference model support is the performance of batch=1 input data. The camera or sensor is providing real-time data at a specific resolution and sampled frame rate. The inference application requires model output classification to keep up with that data stream. The throughput measure commonly associated with data center-based inference computation on large datasets doesn’t apply to these applications. The goal is to achieve high MAC utilization, and thus, high model evaluation performance for batch=1.”

Geoff shared the following graph to highlight this optimization objective for the inference hardware (from Microsoft, Hot Chips 2018).

“What are the constraints and possible tradeoffs these product designers are facing?”, I asked.

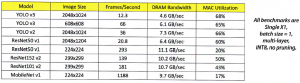

Geoff replied, “There are certainly cost and power constraints to address. A common measure is to reference the performance against these constraints. For example, product developers are interested in “frames evaluated per second per Watt’ and “frames per second per $’, for a target image resolution in megapixels and a corresponding bit-width resolution per pixel. There are potential tradeoffs in resolution and performance that are possible. For example, we are working with a customer pursuing a medical diagnostic imaging application. A reduced resolution pixel image will increase batch=1 performance, while providing sufficient contrast differentiation to achieve highly accurate inference results.”

I asked, “The inference chip/accelerator architecture is also strongly dependent upon the related memory interface – what are the important criteria that are evaluated for the overall design?”

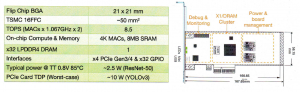

Geoff replied, “The capacity and bandwidth of the on-die memory and off-die DRAM need to load the network weights and store intermediate data results to enable a high sustained MAC utilization, for representative network models and input data sizes. For the InferX architecture, we balanced the on-die SRAM memory (and related die cost) and the external DRAM x32 LPDDR4 DRAM interface.” The figures below illustrate the inference chip specs and performance benchmark results.

“Another tradeoff in accuracy versus performance is that a bfloat16 computation takes two MAC cycles compared to an int8 model.”

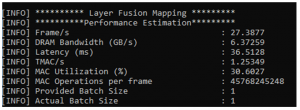

I then asked Geoff, “A machine learning model is typically represented in a high-level language, and optimized for training accuracy. How does a model developer take this abstract representation and evaluate the corresponding performance on inference hardware?”

He replied, “We provide an analysis tool to customers that compiles their model to out inference hardware and provides detailed performance estimation data – this is truly a critical enabling technology.” (See the figure below for a screenshot example of the performance estimation tool.)

The FlexLogix InferX X1 performance estimation tool is available now. Engineering samples of the chip and PCIe accelerator card will be available in Q2’2020. Additional information on the InferX X1 design is available at this link.

-chipguy

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.