In April, I covered a new AI inference chip from Flex Logix. Called InferX X1, this part had some very promising performance metrics. Rather than the data center, the chip focused on accelerating AI inference at the edge, where power and form factor are key metrics for success. The initial information on the chip was presented at the Spring Linley Processor Conference. At that event, the part was reported to be taping out to TSMC’s 16FFC process soon. Fast forwarding to today, Flex Logix has the part back from the fab. Cheng Wang, SVP and co-founder at Flex Logix recently presented silicon results at the Linley Fall Processor Conference. Flex Logix also issued a press release announcing working silicon for the part. All of this provides technical backup to the fact that Flex Logix brings AI to the masses with InferX X1.

To examine the strategy and technical details behind InferX X1, I had the opportunity to speak with Geoff Tate, CEO and co-founder at Flex Logix. Geoff has quite a storied career in semiconductors. Prior to co-founding Flex Logix, Geoff became a director at Everspin, a leading MRAM company. He continues to sit on this Board. Prior to that, Geoff was the founding CEO of Rambus, where he took the company to IPO and a $2B market cap. Before co-founding Rambus, he was SVP of microprocessors and logic at AMD.

Geoff explained that the chip came back from TSMC about a month ago. It’s running in the lab and the team is in the process of bringing up YOLOv3, a popular object detection algorithm. There is significance to this choice of algorithm. More on that in a moment. Geoff discussed throughput per dollar as a key metric. He reminded me this part is not for data centers. Rather is it focused on “systems in the real world” where the metrics are quite different. Think of applications such as ultrasound, MRI, factory and retail distribution robots, all kinds of cameras, autonomous vehicles, gene sequencing and industrial inspection. Edge computing is used to describe some of these applications, but the targets for the InferX X1 are larger than that.

Geoff explained that he’s spoken with companies in all these application areas and more, and everyone wants more performance at a lower price. Performance drives the ability to add features, and price drives the ability to expand the market. InferX X1 delivers more performance at a lower price with no compromises. Latency is also low and accuracy is very high – Flex Logix is not cutting corners. This profile of capabilities is supported by a very small die size – 54 mm2 in TSMC 16FFC. Total design power is 7 – 13W. Note this power spec is for worst case conditions (max junction temperature, max voltage, worst case process). This is a small, low power high performance part.

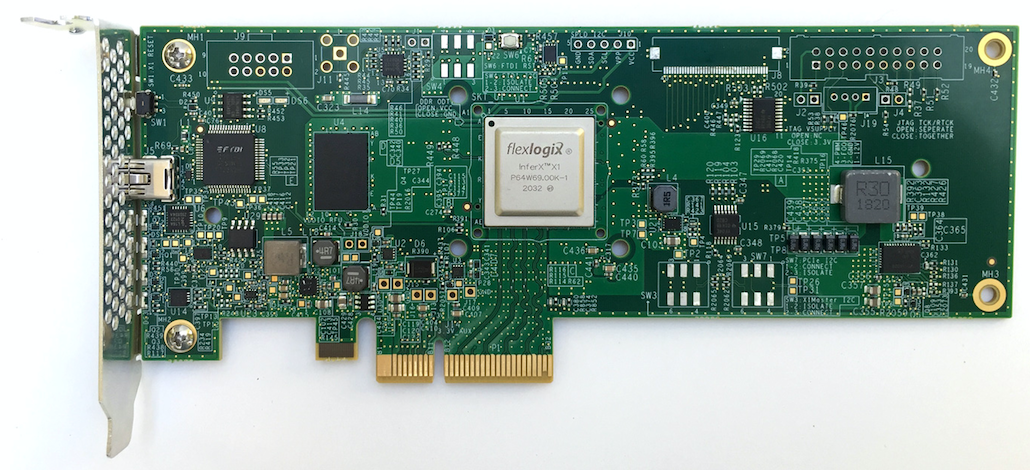

The chip is populated with Tensor processors that do the inference work. Geoff explained that Flex Logix also provides PCIe boards for those applications that have a form factor that requires this additional hardware. These boards can also be used to do software development on the chip before deploying it in the system. More on software in a bit.

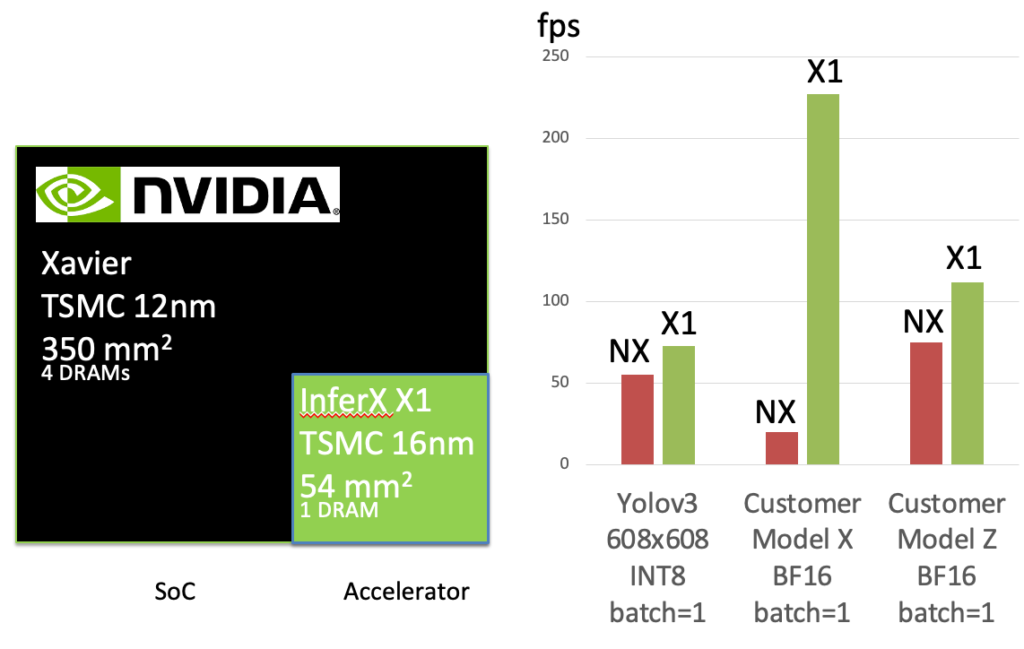

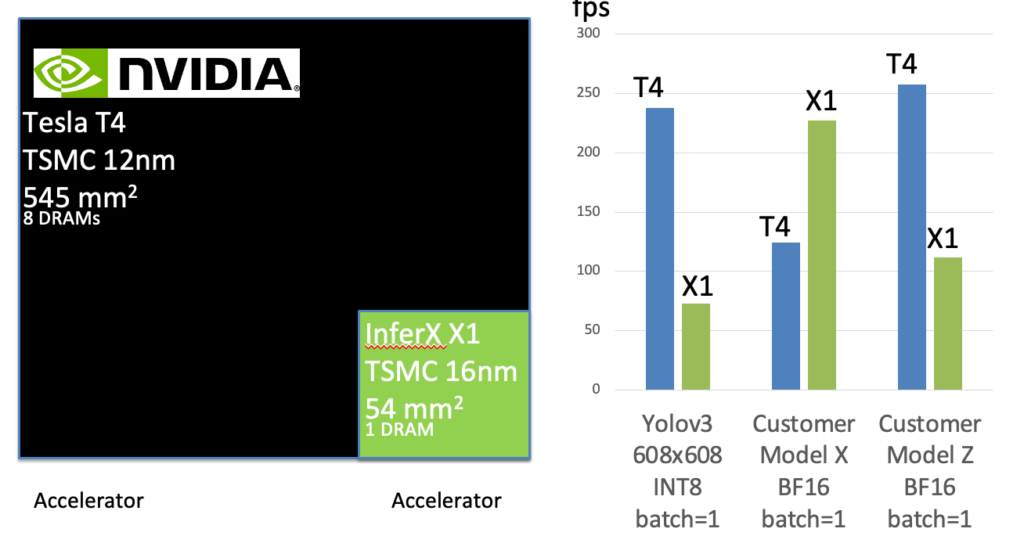

We then discussed benchmarking. The vast majority of applications today use Nvidia’s Xavier NX or Tesla T4 for edge inference. The following analyses use two customer models and YOLOv3. The use of YOLO is significant in that there are many customers that use this model in their real applications. That makes it a much more relevant model for benchmarking since it’s performing real-world workloads on significant size data. Other benchmark models are smaller in scale and so don’t really stress the architecture.

First, Geoff reviewed InferX X1 vs. Xavier NX, the smaller or the Nvidia products. Here, the Flex Logix part delivered up to 11X performance gains with a much smaller and less expensive part. Regarding size, Geoff pointed out that Xavier is an SoC that contains other IP such as Arm processors. Comparing just the accelerator portions of the two designs, the Flex Logix part is about 3X smaller.

Next, InferX X1 was compared to the larger Nvidia Tesla T4. This device is a pure accelerator like the Flex Logix part, so the comparison is more direct. Not surprisingly, the very large Tesla T4 outperformed the InferX X1 in MOST cases. Interestingly, there was one case where the smaller Flex Logix part outperformed the T4.

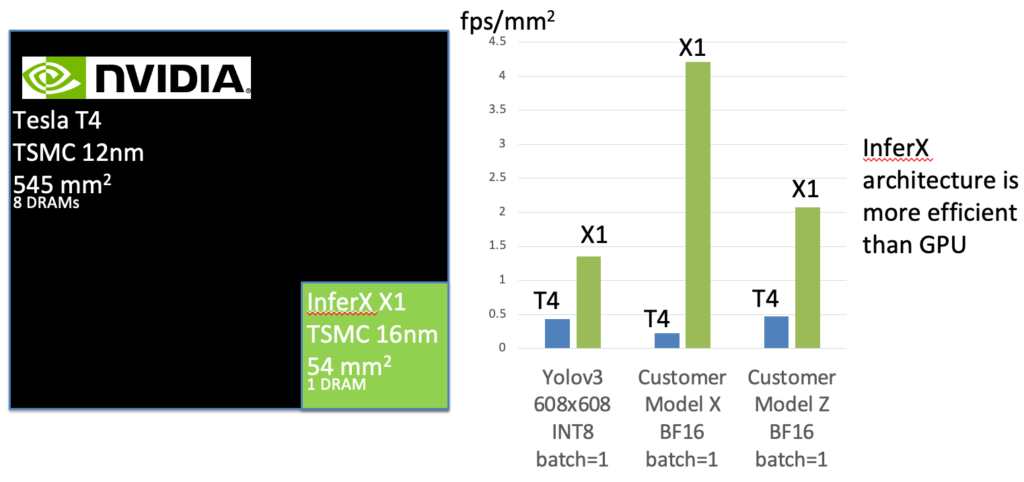

The real insight came when the data was presented in a normalized fashion. That is, performance per mm2. This analysis shows how much more efficient the InferX X1 is compared to the Tesla T4. 3X – 18X.

I probed Geoff on the reasons for such a dramatic difference. He reminded me the underlying architecture of the InferX X1 is much more reconfigurable than a hardwired part and the Tensor processors are finer grained. Remember, Flex Logix is also an FPGA company. This is a key part of their architecture’s competitiveness. Another differentiator is the high precision offered by the part. Full precision is used for all operations, there are no short-cuts. Geoff pointed out that some of their customers are doing ultrasound imaging in a clinical setting. Accuracy really matters here.

There are many more enhancements to the architecture that support high throughput and high precision. You can learn more about the InferX X1 on the Flex Logix website.

We concluded with a discussion of software support. Geoff explained that the compiler for InferX X1 is very easy to use. The compiler reads in a high-level representation of the algorithm in either TensorFlow Lite or ONNX. Most algorithm models are expressed in one of these formats. The compiler them makes all the decisions about how to optimally configure the InferX X1 for the target application, including items such as memory management. In a matter of minutes, an executable is available to run on the part. There is a lot of optimization going on during the compile process. Geoff described multi-pass analyses for things like memory optimization. Flex Logix appears to have optimized this part of the process very well.

The pricing for InferX1 is very aggressive and supports very high volumes and associated discounts. I talked with Geoff about that strategy. It turns out Flex Logix is not only pricing InferX X1 to be competitive in the current market; the pricing will also support new and emerging markets that require very high volumes. These new markets will be enabled by a device that is as small, low power, high precision and high throughput as InferX X1. Flex Logix brings AI to the masses with InferX X1, and that will open new markets and change history.

Share this post via:

Comments

2 Replies to “Flex Logix Brings AI to the Masses with InferX X1”

You must register or log in to view/post comments.