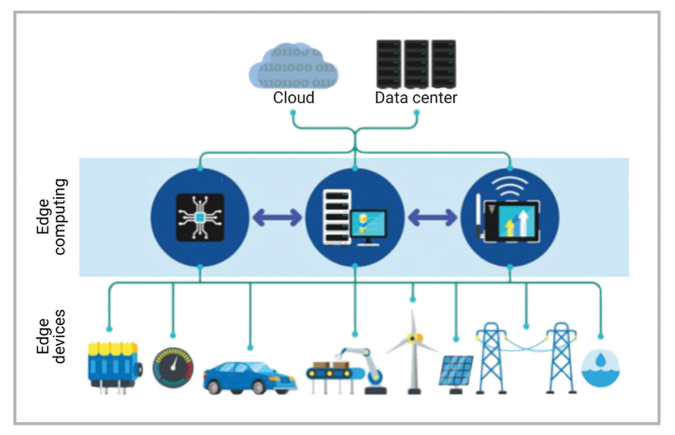

Ron Lowman, product marketing manager at Synopsys, recentl y posted an interesting technical bulletin on the Synopsys website entitled How AI in Edge Computing Drives 5G and the IoT. There’s been a lot of discussion recently about the emerging processing hierarchy of edge devices (think cell phone or self-driving car), cloud computing (think Google, Amazon or Microsoft) and the newest middle ground in between (edge computing). The current deployment of 5G networks delivers the capability of creating much more data than ever before, and how and where that data will be processed makes the processing hierarchy even more important.

y posted an interesting technical bulletin on the Synopsys website entitled How AI in Edge Computing Drives 5G and the IoT. There’s been a lot of discussion recently about the emerging processing hierarchy of edge devices (think cell phone or self-driving car), cloud computing (think Google, Amazon or Microsoft) and the newest middle ground in between (edge computing). The current deployment of 5G networks delivers the capability of creating much more data than ever before, and how and where that data will be processed makes the processing hierarchy even more important.

Some facts are in order. First an observation from me – please don’t think 5G is for your cell phone. While your carrier will likely make a big deal about 5G reception, that isn’t the primary use of this technology; your cell phone is plenty fast enough now. 5G holds the promise of wirelessly linking many other data sources in a high bandwidth, low latency way. This is part of the promise of IoT. A quote from Ron’s piece helps drive home the point:

“By 2020, more than 50 billion smart devices will be connected worldwide. These devices will generate zettabytes (ZB) of data annually growing to more than 150 ZB by 2025.” (Data courtesy of Cisco.)

A little perspective is in order. A zettabyte is a billion terabytes, or 1,000,000,000,000,000,000,000 bytes if you prefer the long form. According to Wikipedia, in 2012 there was upwards of 1 zettabyte of data in existence in the world. So, a 150-fold increase, just from edge devices, is kind of daunting. Given this situation, when you consider the traditional model of IoT (edge device) data processing in the cloud, a few problems come up. Ron’s article provides this catalog of issues:

- 150ZB of data will create capacity issues if all processed in a small number of places

- Transmitting that much data from its location of origin to centralized data centers is quite costly, in terms of energy, bandwidth, and compute power

- Power consumption of storing, transmitting and analyzing data is enormous

Regarding the second point, Ron goes on to report that estimates project only 12% of current data is even analyzed by the companies that own it and only 3% of that data contributes to any meaningful outcomes. So, finding an effective way to reduce cost and waste is clearly needed. Edge computing holds great promise to deal with these issues by decentralizing the processing task – essentially bringing it closer to the data source. More benefits reported by Ron include:

- Enable network reliability as applications can continue to function during widespread network outages

- Potential security improvements by eliminating some threat profiles such as global data center denial of service (DoS) attacks

- Provide low latency for real-time use cases such as virtual reality arcades and mobile device video caching

The last point is quite important. Ron points out that “cutting latency will generate new services, enabling devices to provide many innovative applications in autonomous vehicles, gaming platforms, or challenging, fast-paced manufacturing environments.” While local processing can go a long way to reduce waste and cost, more efficient methods are also important. Ron points to AI as a critical enabler for this to happen.

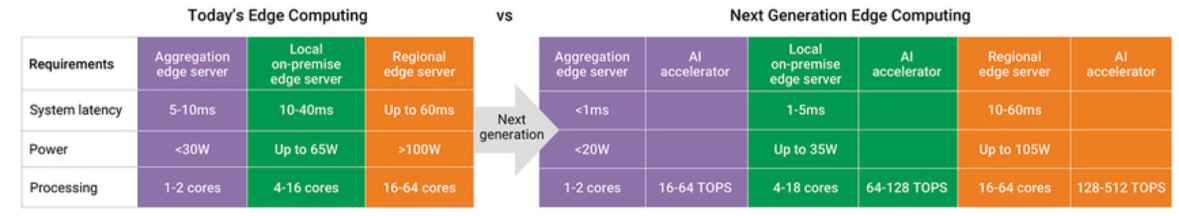

With this backdrop, Ron explores various edge computing use cases, market segments and the impact all this will have on server system SoCs. One use case described by Ron centers on the Microsoft HoloLens. It’s a fascinating case study of augmented reality and its demands for low latency and low power. Ron then talks about the power, processing and latency requirements of the various edge computing segments. If you think there’s one edge computing scenario, think again. The piece concludes with a discussion of the impact all this will have on server system SoCs. AI accelerators are a key piece of this discussion.

If any of this gets your attention, I strongly recommend reading Ron’s complete technical bulletin. There is a lot of compelling detail there regarding AI and the edge. For the chip designers out there, I’ll leave you with one excerpt from Ron’s piece that summarizes the challenges and opportunities of the next generation of edge computing.

Comments

There are no comments yet.

You must register or log in to view/post comments.