Key Takeaways

- Part 1 of the series discusses the complexities of developing software stacks on System on Chip (SoC) or Multi-die systems.

- It highlights the importance of software verification and validation in modern SoC designs, which is critical due to the inherent presence of errors in new code.

- The article outlines the various layers of a software stack in SoC, including Bare Metal Software, Operating System, Middleware, Drivers, and Application Layer.

Part 1 of this 4-part series introduces the complexities of developing and bringing up the entire software stack on a System on Chip (SoC) or Multi-die system. It explores various approaches to deployment, highlighting their specific objectives and the unique challenges they address.

Introduction

As the saying goes, it’s tough to make predictions, especially about the future. Yet, among the fog of uncertainty, a rare prescient vision in the realm of technology stands out. In 2011, venture capital investor Marc Andreessen crafted an opinion piece for The Wall Street Journal titled “Why Software Is Eating The World.” Andreessen observed that the internet’s widespread adoption took roughly a decade to truly blossom, and predicted that software would follow a similar trajectory, revolutionizing the entire human experience within ten years.

His foresight proved remarkably accurate. In the decade following Andreessen’s article, software’s transformative power swept through established industries, leaving a lasting impact. From industrial and agricultural sectors to finance, medicine, entertainment, retail, healthcare, education, and even defense, software reshaped landscapes disrupted traditional models. Those slow to adapt faced obsolescence. Indeed, software has been eating the world.

This rapid software expansion lies at the core of the challenges in developing and delivering fully validated software for modern SoC designs.

The Software Stack in a Modern SoC Design

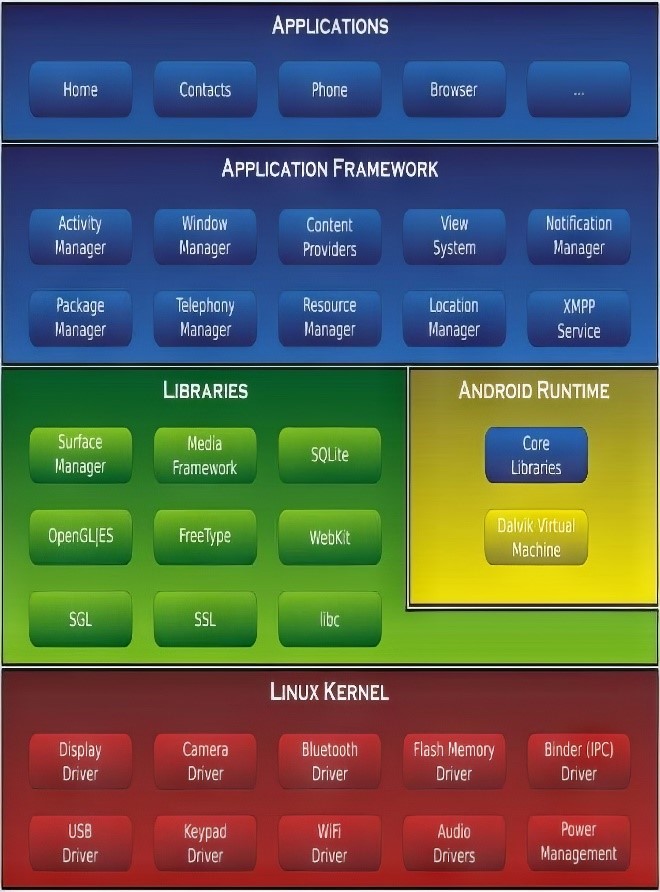

In a modern System on Chip (SoC) the software is structured as a software stack that consists of several layers, each serving specific purposes to ensure efficient operation and functionality:

1) Bare Metal Software and Firmware:

- Bare Metal Software: Specialized programs that run directly on the hardware without an underlying operating system (OS) into memory upon startup. This software interacts directly with the hardware components.

- Firmware: Low-level software that initializes hardware components and provides an interface for higher-level software. It is critical for the initial boot process and hardware management.

2) Operating System (OS):

- The OS is the core software layer that manages hardware resources and provides services to application software.

3) Middleware:

-

- Middleware provides common services and capabilities to applications beyond those offered by the OS. It includes libraries and frameworks for communication, data management, device management, dedicated security components such as secure boot, cryptographic libraries, and trusted execution environments (TEEs) to protect against unauthorized access and tampering.

4) Drivers and HAL (Hardware Abstraction Layer):

- Device Drivers: These are specific to each hardware component, enabling the OS and applications to interact with hardware peripherals like GPUs, USB controllers, and sensors.

- HAL: Provides a uniform interface for hardware access, isolating the upper layers of the software stack from hardware-specific details. This abstraction allows for easier portability across different hardware platforms.

5) Application Layer:

- This top layer consists of the end-user applications and services that perform the actual functions for which the SoC is designed. Applications might include user interfaces, data processing software, and custom applications tailored to specific tasks.

Figure 1 captures the structure of the most frequently used software stack in modern SoC design.

The Software Development Landscape

The global software development community, estimated to comprise around 12 million professional developers, is responsible for an astounding amount of code production. Various sources suggest an annual output ranging between 100 and 120 billion lines of code. This vast quantity reflects the ever-growing demand for software in diverse industries and applications.

However, this impressive volume comes with a significant challenge: the inherent presence of errors in new code. Web sources report a current error rate for new software code before debugging ranging from 15 to 50 errors per 1,000 lines. This translates to an estimated average of over 10 billion errors that need to be identified and fixed before software reaches the market. (See Appendix).

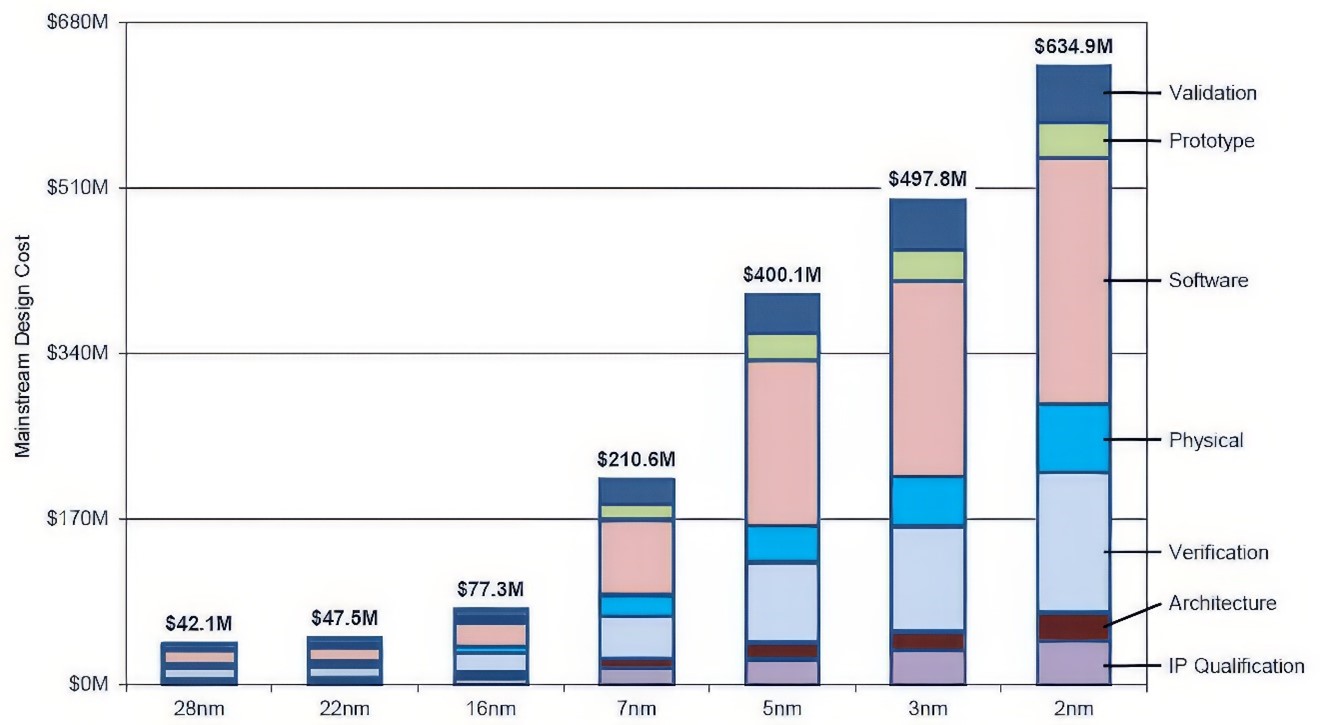

It’s no surprise that design verification and validation consume a disproportionately large portion of the project schedule. Tracking and eliminating bugs is a monumental task, particularly when software debugging is added to the hardware debugging process. According to a 2022 analysis by IBS, the cost of software validation in semiconductor and system design is double that of hardware verification, even before accounting for the cost of software development of end-user applications, see figure 2.

This disparity underscores the increasing complexity and critical importance of thorough software validation in modern SoC development.

SoC Software Verification and Validation Challenges

The multi-layered software stack driving today’s SoC designs cannot be effectively validated with one-size-fits-all approach. This complex task demands a diverse set of tools and methods sharing a common foundation: executing vast amounts of verification cycles, even for debugging bare-metal software, the smallest software block.

Given the iterative nature of debugging, which involves running repeatedly tens or hundreds of times the same software tasks, even basic tasks can quickly consume millions of cycles. The issue becomes more severe when booting operating systems and running large application-specific workloads, potentially requiring trillions of cycles.

1) Bare-metal Software and Drivers Verification

At the bottom of the stack, verifying bare-metal software and drives requires the ability to track the execution and interaction of the software code with the underlying hardware. Access to processor registers is crucial for this task. Traditionally, this is achieved using the JTAG connection to the processor embedded in the design-under-test (DUT), which is available for a test board accommodating the SoC.

2) OS Booting

As the task moves up the stack, next comes booting the operating system. Likewise debugging drivers, it is essential to have visibility into the hardware. The demand for verification cycles now jumps to hundreds of billions of verification cycles.

3) Software Application Validation

At the top of the stack sits the debugging of application software workloads with the needs to execute trillions of cycles.

These scenarios defeat traditional hardware-description-language-based (HDL) simulators, as they fall short of meeting the demand. They run out of steam when processing designs or design blocks in the ballpark of one hundred million gates. A major processor firm reported that their leading-edge HDL simulator could only achieve clock rates of less than one hertz under such conditions. This makes HDL simulators impractical for real-world development cycles.

The alternative is to adopt either hardware-assisted verification (HAV) platforms or virtual prototypes that operate at a higher level of abstraction than RTL.

Virtual prototypes can provide an early start before RTL reaches maturity. This adoption drove the shift-left verification methodology. See Part 3 of this series.

Once RTL is stable enough and the necessary hardware blocks or sub-systems for software development are available, HAV engines tackle the challenge by delivering the necessary throughput to effectively verify OS and software workloads.

Hardware-assisted Verification as the Foundation of SoC Verification and Validation

HAV platforms encompass both emulators and FPGA prototypes, each serving distinct purposes. Emulators are generally employed for software bring-up of existing software stacks or minor modifications of software for new SoC architectures, such as driver adaptations. In contrast, FPGA prototypes, due to their substantially higher performance—roughly 10 times faster than emulators—are advantageous for software development requiring higher fidelity hardware models at increased speeds. To remain cost-effective, FPGA prototypes often involve partial SoC prototyping, allowing for the replication of prototypes across entire teams.

Working in parallel, hardware designers and software developers can significantly accelerate the development process. Emulation allows hardware teams to verify that bare-metal software, firmware, and OS programming interact correctly with the underlying hardware. FPGA prototypes enable software teams to quickly validate application software workloads when hardware design visibility for debugging is not critical. Increasingly, customers are extending the portion of the design being prototyped to the full design as software applications require interaction with many parts of the design. The scalability of prototypes into realms previously reserved for emulators is now essential.

Hardware engineers can realize the benefits of running software on hardware emulation, too. When actual application software is run on hardware for the first time, it almost always exposes hardware bugs missed by the most thorough verification methodologies. Running software early exposes these bugs when they can be addressed easily and inexpensively.

This parallel workflow can lead to a more efficient and streamlined development process, reducing overall time-to-market and improving product quality.

Conclusion

Inadequately tested hardware designs inevitably lead to respins, which increase design costs, delay the progression from netlist to layout, and ultimately push back time-to-market targets, severely impacting revenue streams.

Even more dramatic consequences arise from late-stage testing of embedded software, which can result in missed market windows and significant financial losses.

Also Read:

Synopsys-AMD Webinar: Advancing 3DIC Design Through Next-Generation Solutions

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.