Traditional logic testing relies on blasting pattern after pattern at the inputs, trying to exercise combinations to shake faults out of logic and hopefully have them manifested at an observable pin, be it a test point or a final output stage. It’s a remarkably inefficient process with a lot of randomness and luck involved.

Getting beyond naïve (typified by the “walking 0 or 1” or transition sequences such as hex FF-00-AA-55 applied across a set of inputs) and random patterns hoping to bump into stuck-at defects, computational strategies for automated test pattern generation (ATPG) began modeling logic looking for more subtle faults such as transition, bridging, open, and small-delay. However, as designs have gotten larger, the gate-exhaustive strategy – hitting every input with every possible combination in a two-cycle sequence designed to shake both static and dynamic faults – quickly generates a lot of patterns but still fails to find many cell-internal defects.

A new white paper from Steve Pateras at Mentor Graphics explores a relatively new idea in ATPG that challenges many existing assumptions about finding and shaking faults from a design.

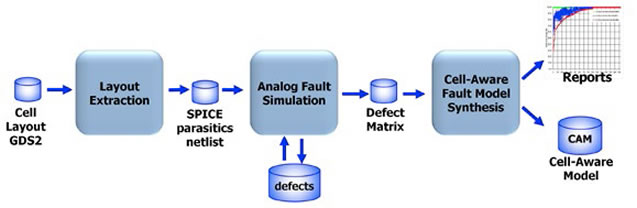

A cell-aware testapproach goes much deeper, seeking to target specific defects with a smaller set of stimuli. Rather than analyzing the logic of the design first, cell-aware test goes after the technology library of the process node. It begins with an extraction of the physical library, sifting through the GDSII representation looking at transistor-level effects with parasitic resistances and capacitances. These values then go into an analog simulation, looking for a spanning set of cell voltage inputs (still in analog domain) that can expose the possible faults.

After extending the simulation into a two-cycle version looking for the small-delay defects, the analog simulation is ready to move into the digital domain. The list of input combinations is converted into necessary digital input values to expose each fault within each cell. With this cell-aware fault model, now ATPG can go after the gate-level netlist using the input combinations, creating a much more efficient set of patterns to expose the faults.

A big advantage of the cell-aware test approach is its fidelity to a process node. Once the analog analysis is applied to all cells in a technology library for a node, it becomes golden – designs using that node use the same cells, so the fault models are reusable.

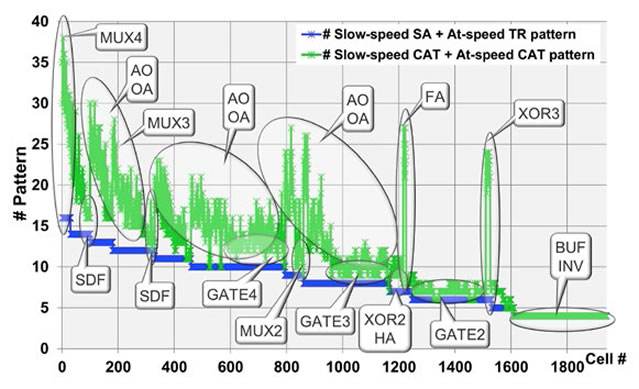

But how good are the patterns? Pateras offers a couple pieces of analysis addressing the question. The first is a bit trivial: cell-aware patterns cover defects better in every cell, by definition from the targeted analysis. The second observation is a bit more surprising: for a given cell, cell-aware analysis actually produces more patterns in many cases compared to stuck-at or transition strategies, but detects more defects per cell.

Play that back again: the cell-aware test approach is not about reducing pattern count, but rather about finding more defects. Pateras presents data for cell-aware test showing on average, the same number of patterns shakes out 2.5% more defects, and a 70% increase in patterns gets 5% more defects. Those gains are significant considering those defects are either all blithely passing through device level test, or requiring more expensive screening steps such as performance margining or system-level testing to be exposed.

The last section of the paper looks at the new frontier of FinFETs, with a whole new set of defect mechanisms. Cell-aware test applies directly, able to model the physical properties of a process node and its defects. A short discussion of leakage and drive-strength defects explains how the analog simulation can handle these and any other defects that are uncovered as processes continue to evolve.

For finding more defects, cell-aware test shows significant promise.

AI Semiconductor Market