In April, I covered a new AI inference chip from Flex Logix. Called InferX X1, this part had some very promising performance metrics. Rather than the data center, the chip focused on accelerating AI inference at the edge, where power and form factor are key metrics for success. The initial information on the chip was presented at … Read More

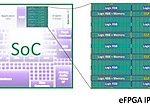

eFPGA – What’s Available Now, What’s Coming and What’s Possible!

eFPGA is now widely available, has been used in dozens of chips, is being designed into dozens more and it has an increasing list of benefits for a range of applications. Embedded FPGA, or eFPGA, enables your SoC to have flexibility in critical areas where algorithm, protocol or market needs are changing. FPGAs can also accelerate… Read More

Flex Logix CEO Update 2020

We started working with Felx Logix more than eight years ago and let me tell you it has been an interesting journey. Geoff Tate was our second CEO Interview so this is a follow up to that. The first one garnered more than 15,000 views and I expect more this time given the continued success of Flex Logix pioneering the eFPGA market, absolutely.… Read More

Using ML Acceleration Hardware for Improved DSP Performance

Some amazing hardware is being designed to accelerate AI/ML, most of which features large numbers of MAC units. Given that MAC units are like the lego blocks of digital math, they are also useful for a number of other applications. System designers are waking up to the idea of repurposing AI accelerators for DSP functions such as … Read More

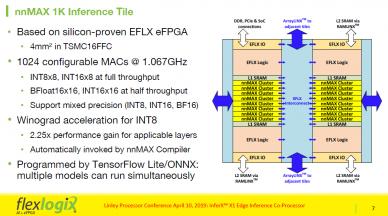

Accelerating Edge Inference with Flex Logix’s InferX X1

For a long time, memories were the primary technology driver for process development. If you built memories, you got access to cutting-edge process information. If you built other products, this could give you a competitive edge. In many cases, FPGAs are replacing memories as the driver for advanced processes. The technology… Read More

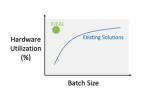

Characteristics of an Efficient Inference Processor

The market opportunities for machine learning hardware are becoming more succinct, with the following (rather broad) categories emerging:

- Model training: models are evaluated at the “hyperscale” data center; utilizing either general purpose processors or specialized hardware, with typical numeric precision of 32-bit

Efficiency – Flex Logix’s Update on InferX™ X1 Edge Inference Co-Processor

Last week I attended the Linley Fall Processor Conference held in Santa Clara, CA. This blog is the first of three blogs I will be writing based on things I saw and heard at the event.

In April, Flex Logix announced its InferX X1 edge inference co-processor. At that time, Flex Logix announced that the IP would be available and that a chip,… Read More

AI Inference at the Edge – Architecture and Design

In the old days, product architects would throw a functional block diagram “over the wall” to the design team, who would plan the physical implementation, analyze the timing of estimated critical paths, and forecast the signal switching activity on representative benchmarks. A common reply back to the architects was, “We’ve… Read More

eFPGA – What a great idea! But I have no idea how I’d use it!

eFPGA stands for embedded Field Programmable Grid Arrays. An eFPGA is a programmable device like an FPGA but rather than being sold as a completed chip it is licensed as a semiconductor IP block. ASIC designers can license this IP and embed it into their own chips adding the flexibility of programmability at an incremental cost.… Read More

Flex Logix InferX X1 Optimizes Edge Inference at Linley Processor Conference

Dr. Cheng Wang, Co-Founder and SVP Engineering at Flex Logix, presented the second talk in the ‘AI at the Edge’ session, at the just concluded Linley Spring Processor Conference, highlighting the InferX X1 Inference Co-Processor’s high throughout, low cost, and low power. He opened by pointing out that existing inference solutions… Read More