Some amazing hardware is being designed to accelerate AI/ML, most of which features large numbers of MAC units. Given that MAC units are like the lego blocks of digital math, they are also useful for a number of other applications. System designers are waking up to the idea of repurposing AI accelerators for DSP functions such as FIR filter implementation. Word of this demand comes from Flex Logix, who have seen their customers asking if their nnMAX based InferX X1 accelerator chips can be used effectively for DSP based FIR. Flex Logix’s response is a resounding yes, with supporting information given recently at the Spring Linley Processor conference in March.

Flex Logix Senior VP Cheng C. Wang gave a presentation titled “DSP Acceleration using nnMAX” that shows how effective the nnMAX architecture is when applied to DSP functions. Applications such as 5G, testers, base stations, radar and imaging all need high sample rates and large numbers of taps. Some system designers are using expensive FPGAs or high-end DSP chips to get the performance they need. In fact, many times expensive FPGA are being used just for their DSP units.

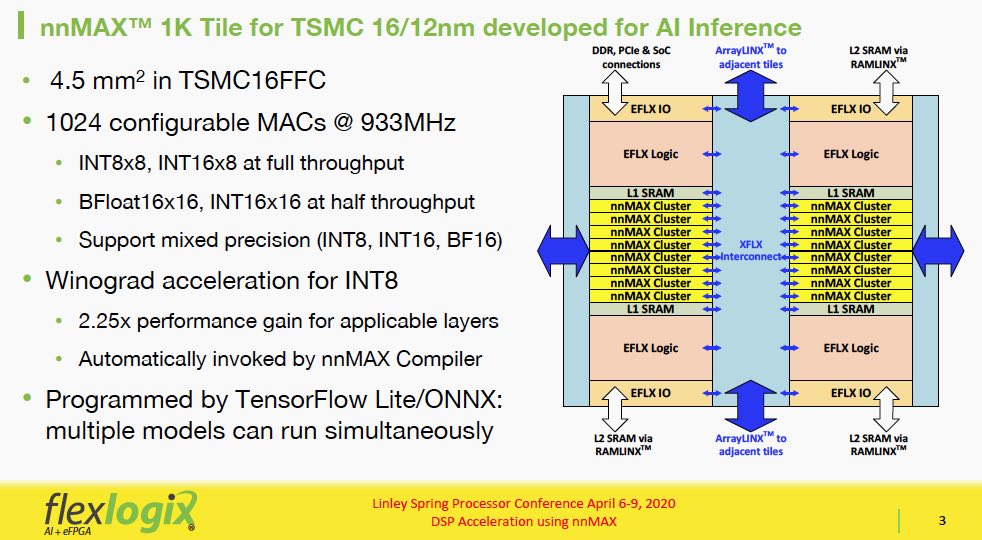

The nnMAX tiles used in the InferX X1 each contain 1024 configurable MACs that run at 933MHz. They support INT8x8 and INT16x8 at full throughput. BFloat16x16 and INT16x16 run at half throughput. There is also support for mixed precision as well. nnMAX also provides Winograd acceleration for INT8 that can boost performance by 2.25x. For AI/ML nnMAX can be programmed by TensorFlow Lite/ONNX, with multiple models running simultaneously.

The nnMAX tiles can be arrayed to add compute capacity. In the InferX X1 each tile has 2MB L2 SRAM. Going to a 2X2 or even up to 7X7 provides exponential improvement in performance. NMAX clusters are assembled from arrays, with each cluster performing a 32 bit tap filter. When longer filters are needed, NMAX clusters are chained to form thousands or tens of thousands of taps.

Cheng gave several examples of possible configurations. For instance, at 1,000 MegaSamples per second (1GHz clock), a nnMAX cluster gives 16 taps, a nnMAX 1K tile gives 256 taps and a 2×2 nnMAX array gives 1024 taps. So, what does all this translate to in terms of FIR operation?

In one of the slides for the Linley presentation Cheng compares nnMAX to a Virtex Ultrascale. The comparison shows that a nnMAX 2×2 array can run 1000 taps at the same rate as an Ultrascale 21 tap FIR. Considering that the Ultrascale is 100’s of mm*2 and hundreds of dollars, and the nnMAX 2×2 array with 8MB SRAM is just 26 mm*2, these are impressive results. Cheng also provides an eye-opening comparison with CEVA XC16 and nnMAX. There is a link below to the full presentation with all the comparison numbers.

Cheng pointed out that the FIR application was the first one they tackled with the nnMAX. They already have a major customer for this and are working on improved usability by taking Matlab output to map onto the nnMAX. There will also be a technology port for the nnMAX adding GF12LLP and TSMC N7/N6 as new nodes. Their next target application will be fast FFT.

So, it seems that there is collateral benefit from the development of AI accelerators. Of course, even just for AI/ML, the nnMAX technology offers very high performance and performance per dollar. The full slide deck for the Linley presentation is available on the Flex Logix website. It offers more detail than can be provided here. I suggest taking a look at it if you are looking for AI/ML or DSP acceleration.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.