Earlier this year semiconductor oracle Malcom Penn did his 2022 forecast which I covered here: Are We Headed for a Semiconductor Crash? The big difference with this update is the black economic clouds that are looming which may again highlight Malcolm’s forecasting prowess. I spent an hour with Malcolm and company on his Zoom cast yesterday and now have his slides. Great chap that Malcolm.

The $600B question is: When will the semiconductor CEOs start issuing warnings?

RECAP: PERFECT STORM BROKE IN JULY 2020 (BUT NOBODY WAS PAYING ATTENTION)

The 6.5% growth in 2020 set the stage for a big 2021 which ended up at 26%. Previous semiconductor records were 37% in 2000 and 32% in 2010 so 26% is not that big of a number meaning we will have a shorter distance to fall. Covid was the trigger for our recent shortages but it really was a supply/demand imbalance camouflaged by a crippled supply chain.

The big difference today is the daunting economic and geopolitical issues that could possibly raise Malcolm to genius forecasting level. The horrific geopolitics with Russia and China, rampant inflation, the workforce challenges around the world, and of course Covid is not done with us yet, not even close.

Let’s take a look at the four key drivers from Malcolm’s presentation:

- Economy: Determines what consumers can afford to buy.

- Unit Demand: Reflects what consumers actually buy plus/minus inventory adjustments.

- Capacity: Determines how much demand can be met (under or over supply).

- ASPs: Sets the price units can be sold for (supply – demand + value proposition).

The Economy is the big change since I last talked to Malcolm. In my 60 years I have never experienced a more uncertain time other than the housing crash in 2008 where a significant amount of my net worth would disappear overnight. Today, however, I am a financial genius for holding fast. Property values here are about double of the peak in 2008 which is great but is also a little concerning.

Bottom line: I think we can all agree the economy is in turmoil with the inflation spike and the jump in interest rates and debt. Maybe some financial experts can chime in here but in my experience this trend will get worse (recession?) before it gets better.

Unit Demand is definitely increasing due to the digitalization transformation that we have been working on for years. Here is a slide from a keynote at the Siemens EDA Users meeting last week. I will be writing about this in more detail next week. Unit volume is the great revealer of truth versus revenue so this is the one to watch. Unfortunately “take or pay” and “prepay” contracts are becoming much more common and that can disturb unit demand as a forecasting metric.

Bottom line: Long term semiconductor unit demand will continue to grow in my opinion (not at rate we experienced in 2021) but that will largely be due to the Covid backlog and inventory builds. The big risk here is China. China is in turmoil and they are the largest consumer of semiconductors. China stemmed the first Covid surge with draconian measures which they are again employing and again the electronics supply chain is impeded. Other parts of the world who are not paying attention to what is happening in China will suffer the consequences in the months to come, my opinion.

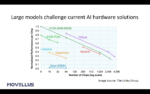

Capacity is a tricky one. Let’s break this one into two parts: Leading edge nodes (FinFETs) and mature nodes (Not FinFETs). We are building leading edge capacity with impunity. It’s a PR race between Intel, Samsung, and TSMC and since Intel is outsourcing significant FinFET capacity to TSMC it makes it even trickier.

To be clear, mature node capacity is being rapidly added but a lot of it is in China since they do not have access to leading edge technology but will pale in comparison to FinFET capacity. Reshoring semiconductor manufacturing and the record setting CAPEX numbers are also an important part of this equation which makes the over supply argument even easier.

On the other side of the equation the semiconductor equipment companies are hugely backlogged no matter who you are and the electronics supply chain is still crippled so announcing CAPEX and actually spending it is two different things.

In my opinion, if all of the announced CAPEX is actually spent there will be some empty fabs waiting for equipment and customers. Remember, Intel had an empty fab in AZ for years and there are still empty fabs all over China. Staffing new fabs will also be a challenge since the semiconductor talent pool is seriously strained.

Bottom line: We did not have a wafer manufacturing capacity problem before Covid, we do not have a wafer manufacturing capacity problem today, and I don’t see an oversupply risk in the future. We did have a surge in chip demand due to Covid but that will end soon and the crippled supply chain (the inability to get systems assembled and to customers) is easing the fab pressures and that will continue this year and next depending on Covid and how we respond to it.

ASPs are being propped up by the shortage narrative. Brokers, distributors, middlemen are hording and raising prices causing ever more supply chain issues. I have heard of 10x+ price increases for $1 MPUs. Systems companies are paying a premium for off-the-shelf chips and foundries are raising wafer prices in record amounts which, at some point in time, will calm demand.

Bottom line: Malcom is convinced a significant crash is coming but I do not agree based on my ramblings above. If someone asked me to place a 10% over/under bet for semiconductor revenue growth in 2022 I would bet the farm on over. My personal number was and still is 15% growth in 2022.

Let me know if you agree or disagree in the comment section and we can go from there. Exciting times ahead, absolutely.

Also read:

Design IP Sales Grew 19.4% in 2021, confirm 2016-2021 CAGR of 9.8%