SLAM – simultaneous localization and mapping – is already a well-established technology in robotics. This generally starts with visual SLAM, using object recognition to detect landmarks and obstacles. VSLAM alone uses a 2D view of a 3D environment, challenging accuracy; improvements depend on complementary sensing inputs such as inertial measurement. VISLAM, as this approach is known, works well in good lighting conditions and does not necessarily depend on fast frame rates for visual recognition. Now automotive applications are adopting SLAM but cannot guarantee good seeing and demand fast response times. LIDAR-based SLAM, aka LOAM – LIDAR Odometry and Mapping – is a key driver in this area.

SLAM in automotive

Before we think about broader autonomy, consider self-parking. Parallel parking is one obvious example, already available in some models. More elaborate is the ability for a car to valet park itself in a parking lot (and return to you when needed). Parking assist functions may not require SLAM, but true autonomous parking absolutely requires that capability and is generating a lot of research and industry attention.

2D-imaging alone is not sufficient to support this level of autonomy, where awareness of distances to obstacles around the car is critical. Inertial measurement and other types of sensing can plug this hole, but there is a more basic problem in these self-parking applications. Poor or confusing lighting conditions in parking structures or streets at nighttime can make visual SLAM a low-quality option. Without that, the whole localization and mapping objective is compromised.

LIDAR is the obvious solution at first glance. Works well in poor lighting, at night, in fog, etc. But there is another problem. The nature of LIDAR requires a somewhat different approach to SLAM.

The SLAM challenge

SLAM implementations, for example OrbSLAM, perform three major functions. Tracking does (visual) frame-to-frame registration and localizes a new frame on the current map. Mapping adds points to the map and optimizes locally by creating and solving a complex set of linear equations. These estimates are subject to drift due to accumulating errors. The third function, loop closure, corrects for that drift by adjusting the map when points already visited are visited again. SLAM accomplishes this by solving a large set of linear equations.

Some of these functions can run very effectively on a host CPU. Others, such as the linear algebra, run best on a heavily parallel system, for which the obvious platform will be DSP-based. CEVA already offers a platform to support VSLAM development through their SensPro solution. Providing effective real-time SLAM support in daytime lighting, at up to 30 frames per second.

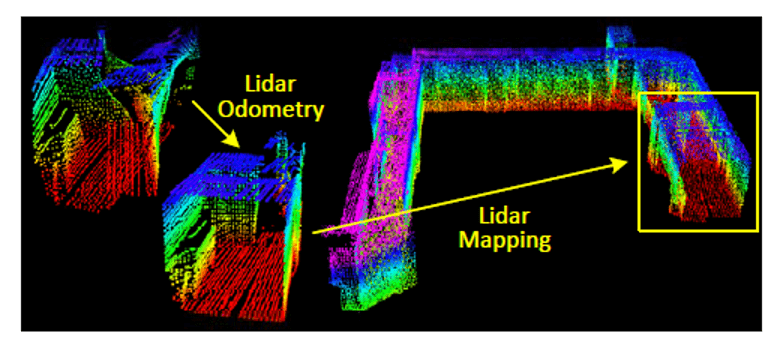

LIDAR SLAM as an alternative to VSLAM for poor light conditions presents a different problem. LIDAR works by mechanically or electronically spinning a laser beam. From this it builds up a point cloud of reflections from surrounding objects, together with ranging information for those points. This point cloud starts out distorted due to the motion of the LIDAR platform. One piece of research suggests a solution to mitigate this distortion through two algorithms running at different frequencies: one to estimate the velocity of the LIDAR and one to perform mapping. Through this analysis alone, without inertial corrections or loop closure, they assert they can get to accuracy comparable to conventional batch SLAM calculations. That paper does suggest that adding IM and loop closure will be obvious next steps

Looking forward

Autonomous navigation still has much to offer, even before we get to fully driverless cars. Any such solution operating without detailed maps – for parking applications for example – must depend on SLAM. VISLAM for daytime outdoors navigation and LOAM for bad seeing and indoor navigation in constrained spaces. As an absolutely hopeless parallel parker, I can’t wait!

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.