The surge in Edge AI applications has propelled the need for architectures that balance performance, power efficiency, and flexibility. Architectural choices play a pivotal role in determining the success of AI processing at the edge, with trade-offs often necessary to meet the unique demands of diverse workloads. There are three pillars of common AI processing architectures and related hardware and software requirements.

The Three Pillars of Common AI Processing Architectures

Scalar processing architectures are specifically designed for tasks that involve user interface management and decision-making based on temporal data with non-intensive compute requirements. These architectures excel in quickly and sequentially processing tasks, making them ideal for applications where swift decision-making is crucial. However, the trade-offs come in the form of limited parallelism compared to other architectures. While they are efficient for certain types of tasks, the sequential nature may pose limitations in handling workloads that benefit from parallel processing capabilities.

Vector processing architectures center on performing operations simultaneously on multiple data elements, making them particularly suitable for signal processing followed by AI perception tasks. Their key characteristics include enhanced parallelism, enabling efficient handling of tasks involving vectors and arrays. This parallel processing capability makes them well-suited for tasks that benefit from simultaneous data manipulation. However, a trade-off exists as vector processing architectures may not be as suitable for tasks with irregular or unpredictable data patterns. Their strength lies in structured data processing, and they may face challenges in scenarios where data patterns are less predictable or follow irregular structures.

Tensor (Matrix) processing architectures are specifically tailored for the demands of deep learning tasks, excelling in handling complex matrix operations integral to applications such as image recognition, computer vision, and natural language processing. Their key characteristics lie in the efficient handling of tasks involving large matrices and neural networks, making them essential for processing intricate data structures common in advanced AI applications. However, the trade-offs come in the form of intensive computational requirements, posing challenges in terms of power consumption. While these architectures deliver unparalleled capabilities in processing sophisticated tasks, the computational intensity may require careful consideration, especially in edge computing scenarios with limited power resources.

Hardware and Software Aspects

In terms of hardware for AI processing, a diverse array of solutions emerges to cater to varying requirements. Processor units, notably specialized ones like Neural Processing Units (NPUs), take the spotlight for their ability in handling intricate tasks associated with deep learning. Digital Signal Processors (DSPs) play an indispensable role, particularly in tasks involving signal processing, ensuring efficient and precise manipulation of data. Hybrid solutions mark a convergence of strengths, combining scalar, vector, and tensor processing architectures to achieve unparalleled versatility. This amalgamation provides a comprehensive approach to handling a broad spectrum of AI workloads. Further enhancing adaptability, customizable configurations enable hardware to be tailored and optimized for specific applications, ensuring efficiency and performance in diverse computing environments.

In terms of software for AI processing, a robust ecosystem is indispensable, characterized by optimized libraries, flexible frameworks, and developer tools. Optimized libraries stand as the basis for efficiency, offering specialized software components meticulously crafted to enhance the performance of AI tasks. Flexible frameworks play a pivotal role by providing a supportive environment that accommodates a variety of AI models, ensuring adaptability to evolving requirements. Complementing these, developer tools serve as catalysts in the configuration, optimization, and overall development of AI applications. Together, these software components form a cohesive foundation, empowering developers to navigate the intricacies of AI processing with efficiency, adaptability, and streamlined development workflows.

CEVA’s Pioneering Solutions

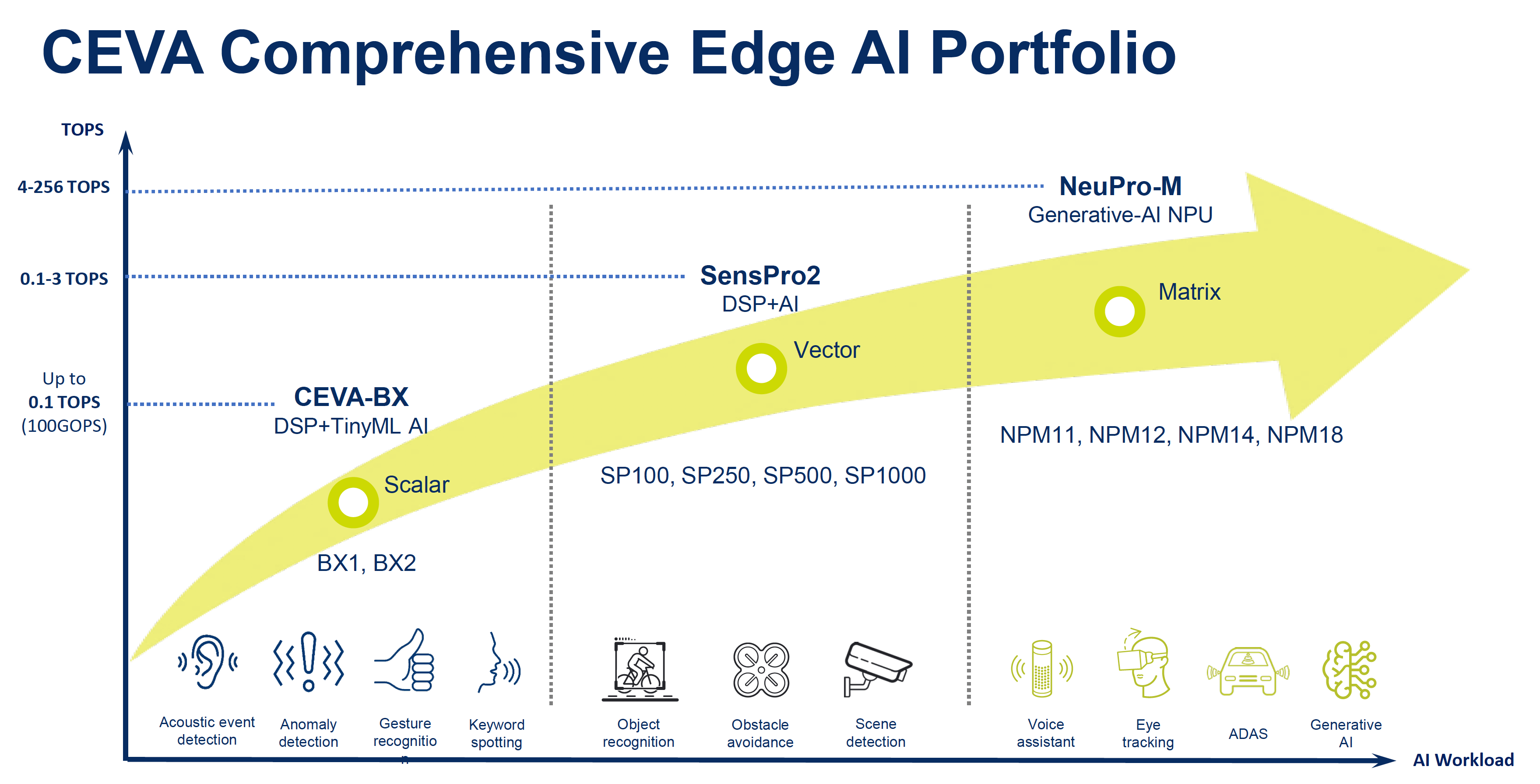

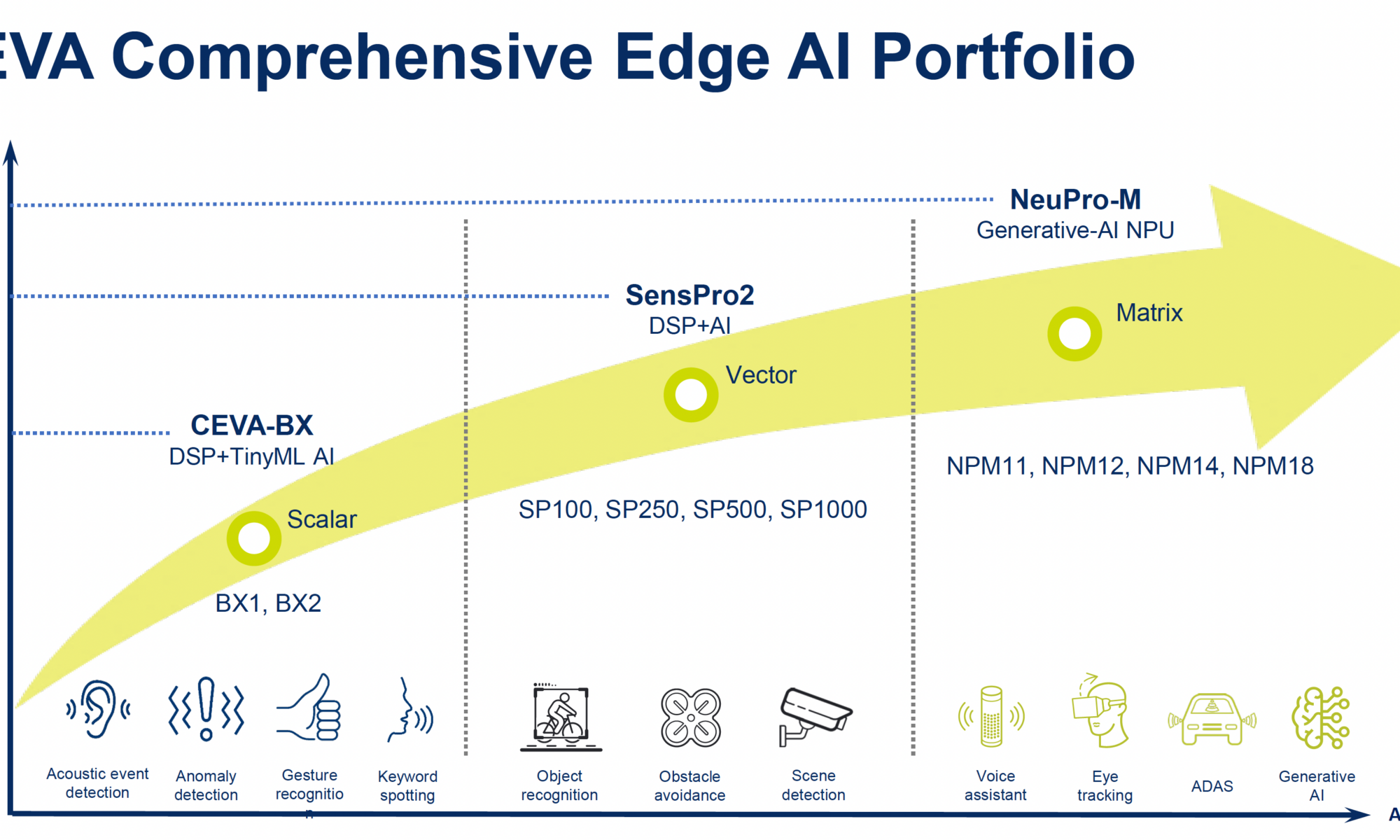

CEVA’s offerings address the full spectrum of AI workloads and architectures and stand out in fulfilling the power, performance, latency, versatility and future-proofing requirements of various AI applications.

CEVA-BX

The flexibility of CEVA-BX processors is a distinctive feature, enabling them to be finely configured and optimized for specific applications, offering a tailored approach to diverse computational needs. Their versatility delivers a delicate equilibrium between performance and power efficiency. This balance positions CEVA-BX as a fitting solution across a broad spectrum of edge computing applications.

SensPro2

Its vector DSP architecture, characterized by high configurability and self-contained functionality, positions SensPro2 as a versatile solution for a variety of applications. Particularly notable is its proficiency in parallel processing, where it excels in high data bandwidth scenarios, making it adept at addressing communication and computer vision tasks. In processing AI workloads, SensPro2 showcases remarkable efficiency, seamlessly handling tasks with a throughput of up to 5 TOPS (Tera-Operations Per Second).

NeuPro-M

The versatility of NeuPro-M shines through as it adeptly handles a diverse spectrum of Advanced Neural Network models, showcasing its adaptability to the evolving landscape of AI applications. Its Neural Processing Unit (NPU) IP stands as a testament to CEVA’s commitment to low-power, high-efficiency processing, making NeuPro-M a frontrunner in energy-constrained scenarios. A noteworthy feature contributing to its future-proofing capabilities is the built-in VPU (Vector Processing Unit). This allows NeuPro-M to efficiently manage not only current but also emerging and more complex AI network layers, ensuring sustained relevance and optimal performance in the dynamic and rapidly advancing field of Edge AI.

Summary

In navigating the diverse landscape of Edge AI architectures, the choice between scalar, vector, and tensor processing architectures involves weighing trade-offs and aligning solutions with specific workload requirements.

CEVA’s pioneering solutions, including CEVA-BX, SensPro2, and NeuPro-M, stand out by providing a comprehensive suite that addresses the three pillars of common AI processing architectures. With a focus on flexibility, efficiency, and future-proofing, CEVA empowers developers and businesses to navigate the complexities of Edge AI, making intelligent architectural choices tailored to the evolving needs of the industry.

Also Read:

Fitting GPT into Edge Devices, Why and How

Bluetooth Based Positioning, More Accurate, More Reliable, Safer

Democratizing the Ultimate Audio Experience

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.