While it’s interesting to hear a tool-vendor’s point of view on the capabilities of their product, it’s always more compelling to hear a customer/user point of view, especially when that customer is NVIDIA, a company known for making monster chips.

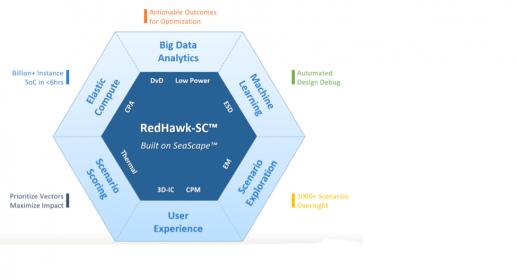

A quick recap on the concept. At 7nm, operating voltages are getting much closer to threshold voltages; as a result, margin management for power becomes much more challenging. You can’t get away with correcting by blanket over-designing the power grid, because the impact on closure and area will be too high. You also have to deal with a wider range of process corners, temperature ranges and other parameters. At the same time, surprise, surprise, designs are becoming much more complex especially (cue NVIDIA) in machine-learning applications with multiple cores and multiple switching modes and much more complexity in use-cases. Dealing with this massive space of possibilities is why ANSYS built the big-data SeaScape platform and RedHawk-SC on that platform, to analyze and refine those massive amounts of data, to find just the right surgical improvements needed to meet EMIR objectives.

Emmanuel Chao of NVIDIA presented on their use of RedHawk-SC on multiple designs, most notably their Tesla V100, a 21B gate behemoth. He started with motivation (though I think 21B gates sort of says it all). Traditionally (and on smaller designs) it would take several days to do a single run of full-chip power rail and EM analysis, even then needing to decompose the design hierarchically to be able to fit runs into available server farms. Decomposing the design naturally makes the task more complex and error-prone, though I’m sure NVIDIA handles this carefully. Obviously, a better solution would be to analyze the full chip flat for power integrity and EM. But that’s not going to work on a design of this size using traditional methods.

For NVIDIA, this is clearly a big data problem requiring big data methods, including handling distributed data and providing elastic compute. That’s what they saw in RedHawk-SC and they proved it out across a wide range of designs.

The meat of Emmanuel’s presentation is in a section he calls Enablement and Results. What he means by enablement is the ability to run multiple process corners, under multiple conditions (e.g. temperature and voltage), in multiple modes of operation, with multiple vector sets and multiple vectorless setting, and with multiple conditions on IR drop. And he wants to be able to do all of this in a single run.

For him this means not only all the big data capabilities but also reusability in analysis – that it shouldn’t be necessary to redundantly re-compute or re-analyze what has already been covered elsewhere. In the RedHawk-SC world, this is all based on views. Starting from a single design view, you can have multiple extraction views, for those you have timing views, for each of these you can consider multiple scenario views and from these, analysis views. All of this analysis fans-out elastically to currently available compute resources, starting on components of the total task as resource becomes available, rather than waiting for all compute resources to be available, as would be the case in conventional parallel compute approaches.

Emmanuel highlighted a couple of important advantages for their work, first that it is possible to trace back hierarchically through views, an essential feature in identifying root causes for any identified problems. The second is that they were able to build custom metrics through the RedHawk-SC Python interface, to select for stress on grid-critical regions, timing-critical paths and other aspects they want to explore. Based on this, they can score scenarios and narrow down to the smallest subset of all parameters (spatial, power domain, frequency domain, ..) which will give them maximum coverage for EMIR.

The results he reported are impressive, especially in the context of that earlier-mentioned multi-day, hierarchically-decomposed single run. They ran a range of designs from ~3M nodes up to over 15B nodes, with run-times ranging from 12 minutes to 14 hours, scaling more or less linearly with the log of design size. Over 15B nodes analyzed flat in 14 hours. You can’t tell me that’s not impressive.

Fast is good, but what about silicon correlation? This was equally impressive. They found voltage droop in analysis was within 10% of measurements on silicon and they also found peak-to-peak periods in ringing (in their measurement setup) were also within 10%. So this analysis isn’t just blazing fast compared to the best massively parallel approaches. It’s also very reliable. And that’s NVIDIA talking.

You can access the webinar HERE.

Share this post via:

Comments

One Reply to “Big Data Analytics and Power Signoff at NVIDIA”

You must register or log in to view/post comments.