Learning-Based Power Modeling. Innovation in Verification

Is it possible to automatically generate abstract power models for complex IP which can both run fast and preserve high estimation accuracy? Paul Cunningham (GM, Verification at Cadence), Raúl Camposano (Silicon Catalyst, entrepreneur, former Synopsys CTO) and I continue our series on research ideas. As always, feedback welcome.

The Innovation

This month’s pick is Learning-Based, Fine-Grain Power Modeling of System-Level Hardware IPs. We found this paper in the 2018 ACM Transactions on Design Automation of Electronic Systems. The authors are from UT Austin.

I find it easiest here to start from the authors’ experiments, and drill down along the way into their methods. They start from Vivado high-level synthesis (HLS) models of complex IP such as generalized matrix multiply and JPEG quantization. These they synthesize against a generic library and run gate-level simulations (GLS) and power estimation. Next they capture activity traces at nodes in the HLS model mapped at three levels of abstraction of the implementation: cycle-accurate traces for datapath resources, block-level IO activity within the IP, and IO activity on the boundary of the IP. Through ML methods they optimize representations to model power based on the three activity abstractions.

There is some sophistication in the mapping and learning phases. In resource-based abstractions, they adjust for out of order optimizations and pipelining introduced in synthesis. The method decomposes resource-based models to reduce problem dimensionality. At block and IP levels, the method pays attention to past states on IOs as a proxy for hidden internal state. The authors devote much of the paper to detailing these methods and justification.

Paul’s view

This is a very important topic, widely agreed to be one of the big still-unsolved problems in commercial EDA. Power estimation must span from low-level physical detail for accurate gate and wire estimation, up to a full software stack and apps running for billions of cycles for accurate activity traces. Abstracting mid-level behavior with good power accuracy and reasonable performance to run against those software loads is a holy grail for EDA.

The scope of this paper spans designs for which the RTL can be generated through high-level synthesis (HLS) from a C++ software model. I see two key contributions in the paper:

- Reverse mapping activity from gate level simulations (GLS) to the C++ model and using this mapping to create a software level power model that enables power estimation from pure C++ simulations of the design.

- Using an ML-based system to train these software level power models based on whatever low level GLS sims are available for the synthesized design.

A nice innovation in the first contribution is a “trace buffer” to solve the mapping problem when the HLS tool shares hardware resources across different lines in the C++ code or generates RTL that permissibly executes C++ code out of order.

The power models themselves are weighted sums of signal changes (activities), with weights trained using standard ML methods. But the authors play some clever tricks, having multiple weights per variable, with each weight denoting a different internal state of the program. Also, their power models conceptually convolve across clock cycles, with the weighted sum including weights for signal changes up to n clock cycles before and after the current clock cycle.

The results presented are solid, though for very small circuits (<25k gates). Also noteworthy is that HLS-based design is still a small fraction of market. Most designs have a behavioral C++ model and an independent hand coded RTL model without any well-defined mapping between the two. That said, this is a great paper. Very thought-provoking.

Raúl’s view

The article presents a novel machine learning-based approach for fast, data-dependent, fine grain, accurate power estimates of IPs. It can capture activity traces at three abstraction levels:

- individual resources (“white box” cycle level),

- block (“gray box” basic blocks), and

- I/O transitions (“black box”invocations).

The technique develops a power model for each of these levels, e.g. for power in cycle n and state s

pn = 𝛉sn·asn

with an activity vector a over relevant signals, and a coefficient vector 𝛉. The approach develops similar models, with less coefficients and relevant signals, for blocks and I/O. The method runs training using the same input vectors of an (accurate) gate-level simulation adjusting the coefficients, by various linear and non-linear regression models.

Experiments used the Vivado HLS (Xilinx), Synopsys DC and Primetime PX for power estimation. They tested 5 IP blocks between 700-22,000 gates. The authors were able to generate models within 34 minutes for all cases. Most of this time was spent generating the actual gate-level power traces. The accuracy at cycle-, block-, and I/O-level is within 10%, 9%, and 3% of the commercial gate-level estimation tool (presumably PrimeTime) and 2000x to 15,000x faster. They also write briefly about demonstrating benefits of these models for virtual platform prototyping and system-level design space exploration. Here they integrated into the GEM5 system simulator.

This is an interesting and possibly very useful approach power modeling. The results speak for themselves, but doubts remain about how general it is. How many signal traces are needed to train and what is the maximum error for a particular circuit; can this be bound? This is akin to “just 95% accuracy” of image recognition. There is perhaps the potential to develop and ship very fast/accurate power estimation models for IP.

My view

I spent quite a bit of time over the years on power estimation so I’m familiar with the challenges. Capturing models which preserve physical accuracy across a potentially unbounded state space of use cases is not a trivial problem. Every reasonable attempt to chip away at the problem is worthy of encouragement 😃

Also Read

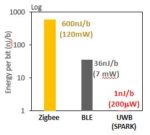

Battery Sipping HiFi DSP Offers Always-On Sensor Fusion

Memory Consistency Checks at RTL. Innovation in Verification