The old adage that “Time is Money” certainly rings true in the semiconductor world where IC designers are being challenged with getting their new designs to market quickly, and correctly in the first spin of silicon. Circuit designers work at the transistor-level, and circuit simulation is one of the most time-consuming tasks for SPICE tools, so any relief is quite welcomed, although there is one caveat and that is engineers need fast and correct answers, not fast incorrect answers, so accuracy is a high requirement.

Yes, there’s a category of Fast SPICE simulators out there, however they tend to work best for mostly digital circuits, so what choices do you have for the most challenging analog circuits?

One promising new development for SPICE circuit simulation is running jobs on a GPU, instead of a general-purpose CPU. I attended a webinar this month presented by Chen Zhao, of Empyrean, where he talked about their approach to GPU-powered SPICE.

Most EDA software companies are located in Silicon Valley, Austin, Boston, Europe or Japan, however Empyrean started out in Beijing, China back in 2009 and has grown to some 300 people. I’ve seen them at DAC for the last couple of years, and they’re becoming more visible in the US with an office in San Jose.

The challenges for Analog simulation are well known: FinFET devices have complex models that evaluate slowly, there are more parasitics with each new interconnect layer, process variations require more simulations, and all IP blocks must be verified.

The circuit simulator from Empyrean is called ALPS, and acronym for Accurate Large capacity Parallel SPICE. With ALPS they created a full-SPICE accurate simulator that is between 3X to 5X faster, has a capacity of 100M elements, and has been silicon proven down to 7nm. To reach this speed required a new approach to solving the matrices used in SPICE, and they call that technology Smart Matrix Solver (SMS).

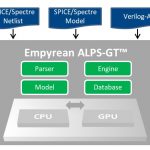

GPUs have a massively parallel architecture, so Empyrean created a version of their ALPS simulator called ALPS-GT to harness the GPU, which then provides up to a 10X speed improvement for circuit simulation run times.

With a 10X speed improvement you can now reach your tapeout goal more quickly, simulate more PVT corners and even simulate scenarios that weren’t feasible with older, slower simulators.

ALPS-GT accepts netlists in HSPICE and Spectre formats, handles all of the popular model files, accepts Verilog-A, and can even co-simulate with 3rd party Verilog simulators. Output file formats are the industry standard tr0 and fsdb, so you can keep using your favorite viewers.

The 10X speed improvement with ALPS-GT comes from the Smart Matrix Solver being optimized to run on a GPU, and SMS-GT has a 5X faster solver than what comes standard with the NVIDIA suppled CUDA matrix solver. Chen showed examples from customer circuits where ALPS-GT was much faster than a competitor:

As the netlist size grows and you start to add extracted parasitics, then the speed differences between ALPS-GT and the competition just grow larger, here’s two examples with millions of parasitics in the netlist:

You may have noticed in this comparison that the competitor SPICE tool would have taken over 100 days to complete a single simulation, so that’s not even practical to consider.

Summary

The need for speed, capacity and accuracy are ever present for SPICE circuit simulators, and the engineers at Empyrean have harnessed the capabilities of the GPU to speed up run times, while maintaining SPICE accuracy. If you’d like to view the recorded webinar, then here’s the link.

Related Blogs