I’m a fan of getting customer reality checks on advanced design technologies. This is not so much because vendors put the best possible spin on their product capabilities; of course they do (within reason), as does every other company aiming to stay in business. But application by customers on real designs often shows lower performance, QoR or whatever metrics you care about than you will see in ideal claims, not because the vendor wants to mislead you but because they can’t quantify the inefficiencies inherent in live design environments. That’s why customer numbers are so interesting; they reflect what you’re most likely to see.

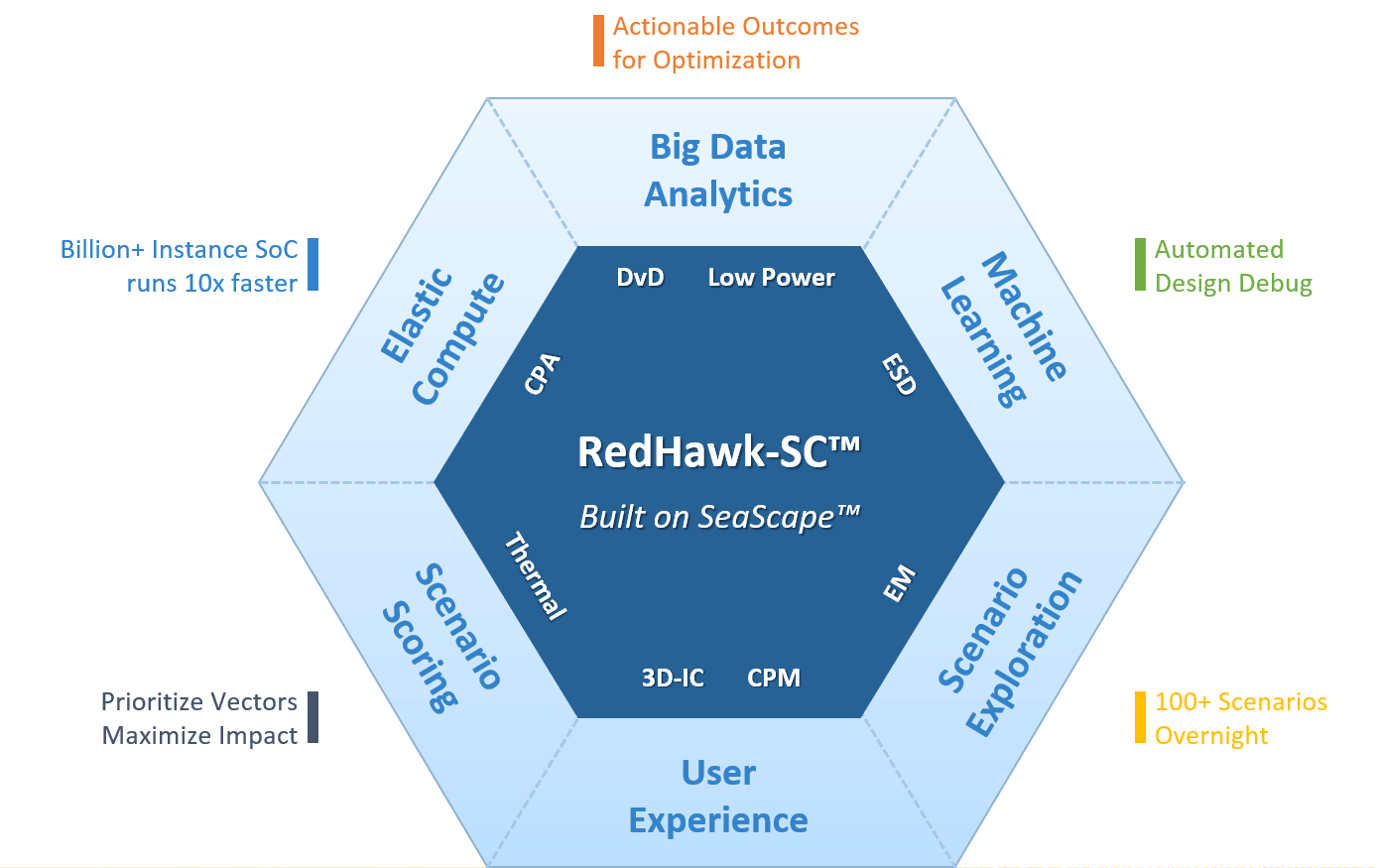

ANSYS has already hosted webinars with customers like NVIDIA and others talking about the benefits of big data/elastic compute in optimizing power integrity, EM and other factors for their designs. In a recent webinar Xilinx added their viewpoint in looking for scalability in analysis for their designs at 7nm and beyond, particularly in use of SeaScape, RedHawk-SC and Path FX. Why they felt the need to do this becomes apparent when you see some of the stats below.

The customer presenter for this webinar was Nitin Navale, CAD Manager at Xilinx responsible for timing analysis and EM/IR. He started by explaining a little about their architecture to give context for the rest of the discussion. The top-level of a die is made up of between 100 and 400 fabric sub-regions (FSRs), each of which contains between 2500 and 5000 IP block instances. A packaged part may contain one or more die in a 2.5D configuration on an interposer. In this context, analysis for timing, EM and IR is first at the die level and then across die in a multi-die package.

SeaScape

The first application Nitin discussed was STA. Surely this will all be managed by the standard signoff timing tools? It seems the days of full-flat STAs are behind us and would be pointless anyway for the kind of analyses Nitin and colleagues need. So you have to strip away all but a select set of logic for analysis, but he told us that even after stripping back to a single FSR an STA run would not complete. The problem here is apparently that in Xilinx designs you may have to blackbox (BB) millions of instances; all those BB boxes and pins still consume too much memory.

What they wanted was not just to BB millions of instances but remove them and floating nets completely. Xilinx programmed this operation in Python on top of standalone SeaScape. Remember their first test kept a single FSR, with everything but blocks of interest BBed, and that wouldn’t complete in STA. They ran their SeaScape script (taking just over 6 hours) then STA completed in 12 hours per corner. A medium-sized design (33 FSRs retained) after stripping back in SeaScape ran through STA in 4 days per corner. (Nitin also talks about running Path FX trials here, which ran much faster than the equivalent STA. I’ll touch on that tool below.)

Now you could do all this fancy stripping in the reference timing tools but not very quickly. There’s nothing intrinsically parallelizable about Tcl scripts and even if you do some very clever scripting you would have to make sure those scripts wouldn’t get confused on overlapping paths. SeaScape takes care of all this by directly managing map-reduce and compute distribution. Pretty neat that Xilinx were able to use SeaScape to make 3rd party tool runs viable.

RedHawk-SC

Next up, Nitin talked about their trials with RedHawk-SC (the SeaScape-based version of RedHawk) for EM and IR analysis. Here they have the same scale problems as for STA except that RedHawk-SC has SeaScape built-in so can natively work at full-chip scale. He mentioned that when they were doing analysis on Ultrascale back in 2015, they had to break the design into 7 partitions. It took one person-month to do the initial analysis and one person-week to do an iterative run with ECOs. On the Versal product (2018) this jumped to five person-months on the initial run and five person-weeks per iteration. Clearly this won’t be scalable to larger designs which is why they started looking at the ANSYS products.

Nitin shared preliminary data here. In both the small and mid-size experiments, run-time was reduced by 3X on the small job and 10X bigger job and they saw good correlation between RedHawk and RedHawk-SC results. Nitin said they’ve seen enough already – they’ll be deploying RedHawk-SC on their next chips. Also interesting, the RedHawk (not SC) runs need a machine with almost 1TB of memory. RedHawk-SC distributed to worker machines needing only 30GB of memory per machine. Worth considering versus when you’re thinking about requesting more expensive servers.

Path FX

Nitin wrapped up with a discussion on their trials on Path FX, ANSYS’ Spice-accurate path timer. In the SeaScape trials I mentioned earlier, Path FX was running 4X faster than STA on the mid-size design, already notable. Of course you don’t switch to a different reference signoff of that importance based on a couple of tests, but Xilinx have another application where Path FX looks like a very interesting potential fit. In Vivado (the Xilinx design suite) it would be impossibly expensive to re-time a compile each time so the tool uses lookup tables for combinational paths. Populating those lookup tables is something Xilinx calls timing capture, and requires that paths be timed independently. While some parallelism is possible through grouping, this can become complicated on a reference STA tool and still only runs on a single host even with multi-core and multi-threading. Apparently resolving path overlaps further reduces performance.

Path FX however can take advantage of the same underlying elastic compute technology, intelligently distributing paths to workers and calculating pin-to-pin delays simultaneously, even on conflicting paths. And it’s more accurate than Liberty-based timing since it’s closer to Spice. They again ran a trial on a single FSR-based design. Compile was 4X faster and timing was 10X faster, compared with STA running fully parallelized. Correlation was also pretty good, though Nitin cautioned they (Xilinx) made a mistake in setting up the libraries. They have to correct this and re-run to check correlation and run-time. However he likes where this number is starting, even if it might be a bit wrong.

Overall, pretty compelling evidence that the elastic compute approach is more widely effective than parallelism in accelerating big tasks. You can register to watch the full webinar HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.