I’ve written before about how Ansys applies big data analytics and elastic compute in support of power integrity and other types of analysis. A good example of the need follows this reasoning: Advanced designs today require advanced semiconductor processes – 16nm and below. Designs at these processes run at low voltages, much closer to threshold voltages than previously. This makes them much more sensitive to local power fluctuations; a nominally safe timing path may fail in practice if temporary local current demand causes a local supply voltage to drop, further compressing what is now a much narrower margin.

This presents two problems: first ensuring you have found all such possibilities on a massive design across a very wide range of use-cases, and second, fixing the problem in a way that does not either make design closure impossible or cause area to explode. Ansys recently hosted a webinar focusing particularly on the first of these problems, providing some revealing insights into how they and their customers go about scenario development in this big data approach, a critical aspect in getting to good coverage across those massive designs / use-cases.

To give a sense of problem scale, customers’ goals were to run power integrity analysis – flat – on the largest GPU today (20B instances) and on the largest FPGA today. To give a sense of analytics/compute scale, while analysis is typically farmed out to distributed systems/clouds, some cases have been run on the Intel-based Endeavour 900-core system at NASA. The point here being that this style of analysis can take advantage of 900 cores.

Now back to scenario development. The speaker defines a scenario in this context as a set of logic events on instances in the design, representing some use-case of the design. When dealing with a massive amount of data, the speaker rather nicely explains that the goal is to curate the most important use-case examples from that data to generate this information to provide high coverage in subsequent analysis. You want to narrow down to small time-slices out of potentially equivalent hours or longer of simulation runs, get to the important process corners for those cases, look at impact on IR drops and therefore on timing, but you want to get to this with a workable set of scenarios for detailed analysis.

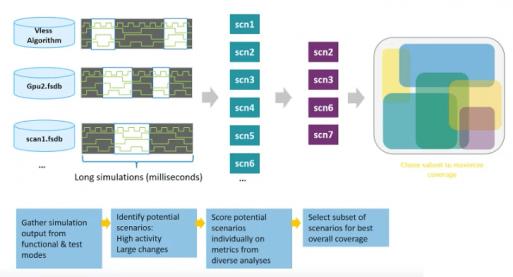

He describes how this can start from multiple inputs, from vectorless analysis to different use-cases analyses (eg. GPU and scan). Remember, this is through distributed compute so can be very efficient, even though dealing with multiple data sources. Analysis starts by identifying likely scenarios with high activity and substantial changes. These are then scored through a variety of metrics and, based on those metrics, a subset of scenarios is selected to offer best coverage.

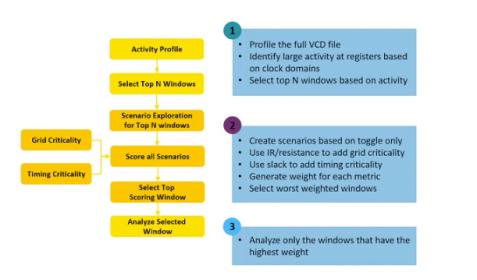

The platform (SeaScape under, in this case, RedHawk-SC) provides a Python interface to the underlying analytics engines, making it easy to develop metrics while making the big data part of the analysis transparent. Here first-cut high activity domains are identified at registers by clock domain, from which they select some top set of candidates from this first cut. Then they dive into a multi-dimensional analysis where they look simultaneously at activity (toggle rate), corresponding IR drop (based on the grid) and slack (from STA). Here the engine is pulling in data from multiple sources to provide a multi-physics view. Through Python, metrics are generated to weight these cases and the worst-cases are selected for more detailed analysis.

The speaker goes on to talk about how this approach is used to do local hot-spot analysis, for example through a multi-core design. He also shows though a number of layout/weighting visualizations how hot-spot conclusions based on complex weighting over multiple parameters (timing, IR drop, etc.) can look quite different from those based on a single-dimension analysis, an indicator that this kind of approach will deliver higher confidence in results than those earlier approaches. This is echoed by a customer who noted that they were used to <50% coverage of use-cases based on very long runs but with this approach now feel they are getting to more like 76% coverage, now within 2 hour run-times.

I talked here just about power noise analysis versus timing criticality. I should add that this is only one aspect of the analysis that can be performed. RedHawk-SC also covers thermal, EM and other aspects of the integrity problem using the same approach with the same multi-physics perspective. Effective scenario development is an important part of getting to high-confidence results. This webinar will give you more insight into how customers, working with Ansys, have been able to optimize scenario section. You can view the webinar HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.