Compute environments have advanced significantly over the past several years. Microprocessors have gotten faster by including more cores, available RAM has increased significantly, and the cloud has made massive distributed computing more easily and cheaply available.

HFSS has evolved to take advantage of these new capabilities, and as a result is magnitudes more capable of solving large designs, designs that you could barely imagine solving before. For some customers, it means being able to solve more design variations in parallel to find the optimal design before manufacturing the first prototype. For other customers, it means spending less time and thought in simplifying designs and instead creating models that include more of the electronic component details, its surrounding system, including the device enclosure and/or even placing it in its operating environment.

The process of preparing a model to be solved with HFSS has decreased significantly over the years as various steps in the process have become automated. But whether the steps are automated or manually done, a decision is made every time a simplification occurs – is removing that detail going to impact accuracy? Is removing that detail going to save significant computational time? And making those decisions, or compromises, requires experience and expertise.

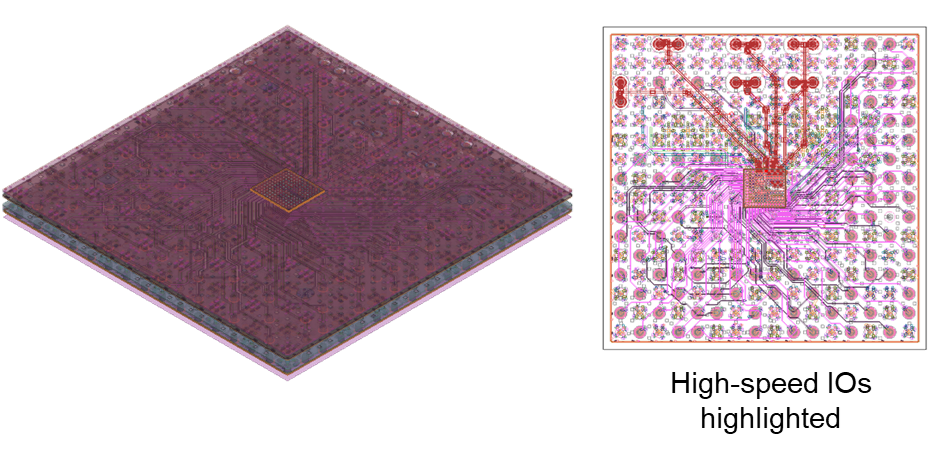

Customers are compromising less and achieving more when using current best practices in the latest version of HFSS. Take, for example, this PAM4 Package model from Socionext where the goal is to extract 12 critical high-speed IO nets.

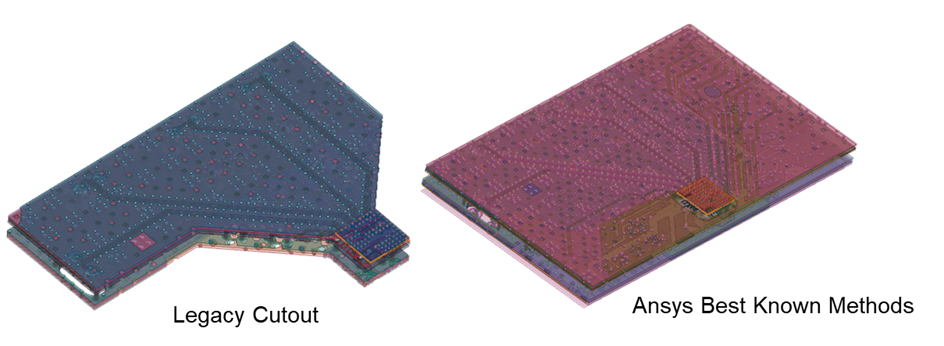

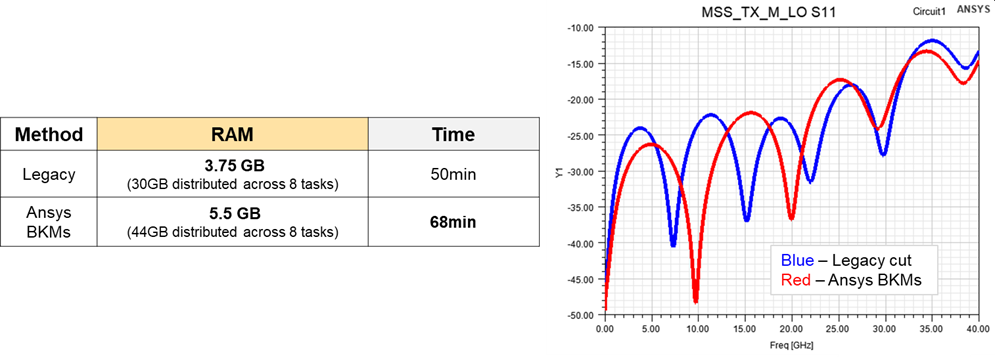

A legacy best practice is to cut the package model down as much as possible to reduce overall RAM footprint. This often results in a complex shaped cutout with boundaries very close to the critical nets of interest. How close is too close? That decision requires experience and expertise so that accuracy is not compromised. Let’s compare this legacy complex cutout method to a simpler, rectangular cut that preserves the true boundaries of the original package model.

The additional RAM and time to solve is not significant, especially considering the time and thought that goes into creating a conformal cutout and the unfortunate compromise in accuracy when, as in the case above, a differential pair proved to be “too close” to the conformal cut boundary! With the cost of RAM greatly reduced and compute resources more readily accessible, there is no longer a need to compromise on accuracy with legacy best practices like creating conformal cutouts.

But wait – did you miss the fine print in the table above? What do we mean when we indicate that the Ansys BKM model used “5.5GB (44GB distributed across 8 tasks)”? This means the model was solved on 8 compute nodes where it required a maximum of 5.5GB on any given node and 44GB total RAM when you add up the RAM used on all 8 nodes.

Getting your model to distribute the solve process across multiple nodes is enabled by default with HFSS’s automatic HPC setting – in other words, you don’t need to do anything more than submit the job to run on multiple nodes and HFSS will automatically distribute the solve process. Using automatic HPC settings along with submitting jobs to Ansys Cloud are two HFSS HPC best practices, and they were both used to solve the above Socionext PAM4 package model. Simply following those two HPC best practices make it easy to solve bigger problems faster than ever before.

Not sure how much of your layout you can preserve when performing a full 3D extraction with HFSS? Get ready to be amazed by HFSS’s speed and capacity when you read this blog post to discover that HFSS on Ansys Cloud can be used to model an entire RFIC!

HFSS best practices have evolved to reduce time spent in both pre-processing, by eliminating compromises made when simplifying models, and solving, by taking advantage of distributed computing and the cloud, especially Ansys Cloud. To summarize a few of the best practices described in this blog: 1) using the latest version of HFSS, 2) forgoing complex shaped cutouts because the risk to accuracy no longer warrants insignificant RAM-savings, 3) using automatic HPC settings on Ansys Cloud. The best practices discussed here are just a few examples that span across many layout-based applications; please work with the Ansys Customer Excellence team to ensure that you’re using all the latest best practices for your specific application.

If it has been some time since you’ve reviewed and updated your HFSS practices and scripts, you are leaving performance and accuracy on the table. You are entitled to the advantages of HFSS’ advancements. You are paying for it. Make sure you use it. It can boost your productivity by 10x or more.

Related link: The Easiest New Year’s Resolution: Better, Faster Simulations

Also Read

System-level Electromagnetic Coupling Analysis is now possible, and necessary

HFSS – A History of Electromagnetic Simulation Innovation

HFSS Performance for “Almost Free”

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.