Semiconductor capital expenditures (CapEx) increased 35% in 2021 and 15% in 2022, according to IC Insights. Our projection at Semiconductor Intelligence is a 14% decline in CapEx in 2023, based primarily on company statements. The biggest cuts will be made by the memory companies, with a 19% drop. CapEx will drop 50% at SK Hynix and 42% at Micron Technology. Samsung, which only increased CapEx by 5% in 2022, will hold at about the same level in 2023. Foundries will decrease CapEx by 11% in 2023, led by TSMC with a 12% cut. Among the major integrated device manufacturers (IDMs), Intel plans a 19% cut. Texas Instruments, STMicroelectronics and Infineon Technologies will buck the trend by increasing CapEx in 2023.

Companies which are significantly cutting CapEx are generally tied to the PC and smartphone markets, which are in a slump in 2023. IDC’s June forecast had PC shipments dropping 14% in 2023 and smartphones dropping 3.2%. The PC decline largely affects Intel and the memory companies. The weakness in smartphones primarily impacts TSMC (with Apple and Qualcomm as two of its largest customers) as well as the memory companies. The IDMs which are increasing CapEx in 2023 (TI, ST, and Infineon) are more tied to the automotive and industrial markets, which are still healthy. The three largest spenders (Samsung, TSMC and Intel) will account for about 60% of total semiconductor CapEx in 2023.

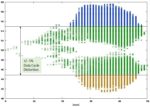

The high growth years for semiconductor CapEx tend to be the peak growth years for the semiconductor market for each cycle. The chart below shows the annual change in semiconductor CapEx (green bars on the left scale) and annual change in the semiconductor market (blue line on the right scale). Since 1984, each significant peak in semiconductor market growth (20% or greater) has matched a significant peak in CapEx growth. In almost every case, the significant slowing or decline in the semiconductor market in the year following the peak has resulted in a decline in CapEx in one or two years after the peak. The exception is the 1988 peak, where CapEx did not decline the following year but was flat two years after the peak.

This pattern has contributed to the volatility of the semiconductor market. In a boom year, companies strongly increase CapEx to increase production. When the boom collapses, companies cut CapEx. This pattern often leads to overcapacity following boom years. This overcapacity can lead to price declines and further exacerbate the downturn in the market. A more logical approach would be a steady increase in CapEx each year based on long-term capacity needs. However, this approach can be difficult to sell to stockholders. Strong CapEx growth in a boom year will generally be supported by stockholders. But continued CapEx growth in weak years will not.

Since 1980, semiconductor CapEx as a percentage of the semiconductor market has averaged 23%. However, the percentage has varied from 12% to 34% on an annual basis and from 18% to 29% on a five-year-average basis. The 5-year average shows a cyclical trend. The first 5-year average peak was in 1985 at 28%. The semiconductor market dropped 17% in 1985, at that time the largest decline ever. The 5-year average ratio then declined for nine years. The average eventually returned to a peak of 29% in 2000. In 2001 the market experienced its largest decline ever at 32%. The 5-year average then declined for twelve years to a low of 18% in 2012. The average has been increasing since, reaching 27% in 2022. Based on our 2023 forecasts at Semiconductor Intelligence, the average will increase to 29% in 2023. 2023 will be another major downturn year for the semiconductor market. Our Semiconductor Intelligence forecast is a 15% decline. Other forecasts are as low as a 20% decline. Will this be the beginning of another drop in CapEx relative to the market? History shows this will be the likely outcome. Major semiconductor downturns tend to scare companies into slower CapEx.

The factors behind CapEx decisions are complex. Since a wafer fab currently takes two to three years to build, companies must project their capacity needs several years into the future. Foundries account for about 30% of total CapEx. The foundries must plan their fabs based on estimates of the capacity needs of their customers in several years. The cost of a major new fab is $10 billion and up, making it a risky proposition. However, based on past trends, the industry will likely see lower CapEx relative to the semiconductor market for the next several years.