I talked recently with Stelios Diamantidis (Distinguished Architect, Head of Strategy, Autonomous Design Solutions) about Synopsys’ announcement on the 100th customer tapeout using their DSO.ai solution. My concern on AI-related articles is in avoiding the hype that surrounds AI in general, and conversely the skepticism in reaction to that hype prompting some to dismiss all AI claims as snake oil. I was happy to hear Stelios laugh and agree whole-heartedly. We had a very grounded discussion on what DSO.ai can do today, what their reference customers see in the solution (based on what it can do today) and what he could tell me about the technology.

What DSO.ai does

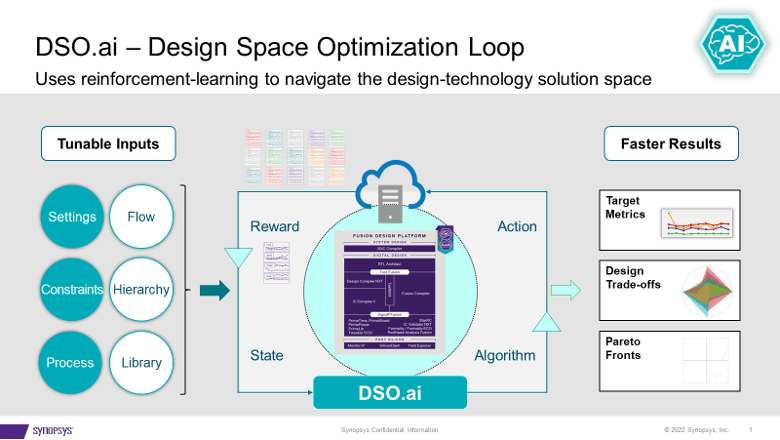

DSO.ai couples with Fusion Compiler and IC Compiler II, which as Stelios was careful to emphasize means this is a block-level optimization solution; Full SoCs are not a target yet. This fits current design practices as Stelios said an important goal is fit easily into existing flows. The purpose of the technology is to enable implementation engineers, often a single engineer, to improve their productivity while also exploring a larger design space for a better PPA than might have been discoverable otherwise.

Synopsys announced the first tapeout in the summer of 2021 and have now announced 100 tapeouts. That speaks well for the demand for and effectiveness of a solution like this. Stelios added that the value becomes even more obvious for applications which must instantiate a block many times. Think of a many-core server, a GPU, or a network switch. Optimize a block once, instantiate many times – that can add up to a significant PPA improvement.

I asked if customers doing this are all working at 7nm and below. Surprisingly, there is active use all the way up to 40nm. One interesting example is a flash controller, a design which is not very performance sensitive but can run to tens to hundred of million units. Reducing size even by 5% here can have a big impact on margins.

What’s under the hood

DSO.ai is based on reinforcement learning, a hot topic these days but I promised no hype in this article. I asked Stelios to drill down a bit more though wasn’t surprised when he said he couldn’t reveal too much. What he could tell me was interesting enough. He made the point that in more general applications, one cycle through a training set (an epoch) assumes a fast (seconds to minutes) method to assess next possible steps, through gradient comparisons for example.

But serious block design can’t be optimized with quick estimates. Each trial must run through the full production flow, mapping to real manufacturing processes. Flows which can take hours to run. Part of the strategy for effective reinforcement learning given this constraint is parallelism. The rest is DSO.ai secret sauce. Certainly you can imagine that if that secret sauce can come up with effective refinements based on a given epoch, then parallelism will accelerate progress through the next epoch.

To that end, this capability really must run in a cloud to support parallelism. Private on-premises cloud is one option. Microsoft has announced that they are hosting DSO.ai on Azure, and ST report in the DSO.ai press release that they used this capability to optimize implementation of an Arm core. I imagine there could be some interesting debates around the pros and cons of running an optimization in a public cloud across say 1000 servers if the area reduction is worth it.

Customer feedback

Synopsys claims customers (including ST and SK Hynix in this announcement) are reporting 3x+ productivity increases, up to 25% lower total power and significant reduction in die size, all with reduced use of overall resources. Given what Stelios described, this sounds reasonable to me. The tool allows exploration of more points in the design state space within a given schedule than would be possible if that exploration were manual. As long as the search algorithm (the secret sauce) is effective, of

course that would find a better optimum than a manual search.

In short, neither AI hype nor snake oil. DSO.ai suggests AI is entering the mainstream as an credible engineering extension to existing flows. You can learn more from the press release and from this blog.

Also Read:

Webinar: Achieving Consistent RTL Power Accuracy

Synopsys Crosses $5 Billion Milestone!

Configurable Processors. The Why and How

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.