Coverage analysis is how you answer the question “have I tested enough?” You need some way to quantify the completeness of our testing; coverage is how you do that. Right out of the gate this is a bit deceptive. To truly cover a design our tests would need to cover every accessible state and state transition. The complexity of that task routinely invokes comparisons with the number of protons in the universe so instead you use proxies for coverage. Touching every line in the RTL, exercising every branch, every function, every assertion and so on. Each a far cry from exhaustive coverage., but as heuristics, they work surprisingly well.

Where does coverage-based analysis start?

Granting that testing cannot be exhaustive, it should at least be complete against a high-level specification, it should be reasonably uniform (no holes), and it should be efficient.

Specification coverage is determined by the test plan. Every requirement in the specification should map to a corresponding section in the plan, which will in turn generate multiple sub-requirements. All functional testing starts here. Coverage at this level depends on architecture and design expertise in defining the testplan. It can also often leverage prior testplans and learning from prior designs. Between expertise and reuse product teams build confidence that the testplan adequately covers the specification. This process is somewhat subjective, though traceability analytics have added valuable quantification and auditability in connecting between requirements and testplans.

Testing and coverage analysis then decomposes hierarchically into implementation test development, which is where most of us start thinking about coverage analysis. Each line-item in the testplan will map to one or more functional tests. These will be complemented by implementation-specific tests around completeness and sequencing for control signal, registers, test and debug infrastructure and so on.

Refining implementation coverage

Here is where uniformity and efficiency become important. When you start writing tests, you’re writing them to cover the testplan and the known big-ticket implementation tests. You’re not yet thinking much about implementation coverage. At some point you start to pay attention to coverage and realize that there are big holes. Chunks of code not covered at all by your testing. Sure, exhaustive testing isn’t possible, but that’s no excuse for failing to test code you can immediately see in a coverage analysis.

Then you add new tests, or repurpose old tests from a prior design. You add constrained random testing, all to boost coverage however you choose to measure it (typically across multiple metrics: function, line, branch for example). The goal here is to drive reasonably uniform coverage across the design to an achievable level, with no unexplained gaps.

Efficiency is also important. Fairly quickly (I hope) you get to a point where adding more tests doesn’t seem to improve coverage much. Certainly you want to find the few special tests you can add that will further advance your coverage, but you’d also like to know which tests you can drop. Because they’re costing you expensive regression runtime without contributing any advantage in coverage improvement.

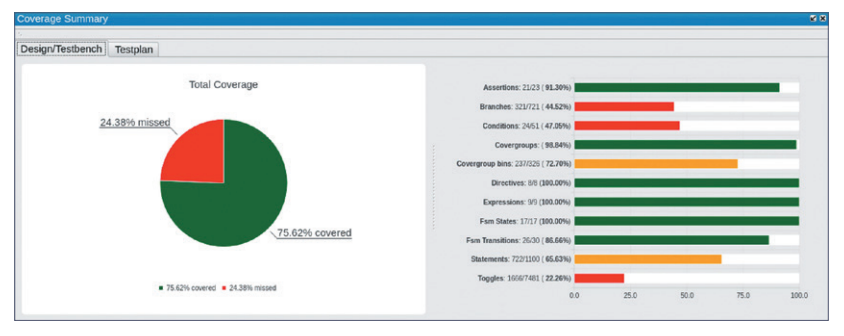

Questa Visualizer adds coverage analysis

It should be apparent that coverage analysis is the guiding metric for completeness in verification. Questa recently released coverage visualization in their Visualizer product to guide you in coverage analysis and optimization, during the course of verification and debug. Check it out.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.