Tony Casassa has served as the General Manager of METTLER TOLEDO THORNTON since 2015, after having joined the company in 2007 to lead the US Process Analytics business. Prior to spending the last 16 years with METTLER TOLEDO, Tony held various business leadership positions for 2 decades with Rohm and Haas Chemical. A common thread throughout his 35+ year career has been bringing new innovations to market that delivers enhanced process control and automation for the Semiconductor and Life Science industries.

Tell us about Mettler Toledo Thornton?

METTLER TOLEDO THORNTON takes important or critical Pure water process measurements that are currently OFFLINE, and brings them online in a real-time, continuous measurement approach. Measuring in-situ on a real-time and continuous basis eliminates sampling errors/contamination risks and provides increased process transparency.

Our focus is on segments that use pure water for critical process stages and where water quality has high value and process impact. Our customers benefit from the deep segment competency & process expertise that our experts have developed over decades in the industry.

What application areas are your strongest?

Mettler Toledo Thornton is the global leader in the measurement and control of the production and monitoring of Pure and Ultrapure water (UPW) for the Semiconductor (Microelectronics) industry. We provide a full portfolio of the process analytics that are required for the real time monitoring of these critical waters in the UPW loop, distribution system or reclaim.

What problems are you solving in semiconductor?

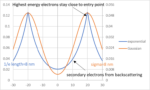

The semiconductor industry is constantly developing new ICs that are faster, more efficient and have increased processing capability. To accomplish these advances, the architecture and line width on the ICs has become narrower and narrower, now approaching 2 to 3 nanometers. To produce these state-of-the-art ICs, the SEMI Fab requires vast quantities of Ultrapure water that is virtually free of any ionic or organic impurities. Mettler Toledo Thornton R&D has developed, and we have introduced to the market the UPW UniCond® resistivity sensor that exceeds the measurement accuracy of previous or current resistivity sensors on the market. This sensor exceeds the stringent recommended measurement limits published by the SEMI Liquid Chemicals committee. Measurement and control of ionic impurities in Ultrapure water is critical but so is the measurement and control of organics. Our online Low PPB 6000TOCi provides the semiconductor industry a TOC instrument that accurately measures in the sub ppb TOC range but also uses 80% less water than other instruments. The control of dissolved oxygen and silica are critical measurements for the SEMI Fab and our analytics provide industry setting accuracy with longer service life and reduced maintenance.

Another key priority for the industry is sustainability and the need to reclaim water & reduce overall water consumption. To minimize adverse impact on wafer yield, it is imperative to fully understand the water quality & risk of contaminants to enable informed decision making on where & how the various reclaimed water streams can be recycled or reused. Mettler Toledo Thornton’s continuous, real-time measurements enable fast, confident decisions in the water reclaim area.

This full range of process analytics helps the SEMI industry monitor and control the most critical solvent for wafer quality, increased yield and reduced defects while obtaining sustainability goals.

How are your customers using your products?

For the semiconductor facility the production of Ultrapure water is the first step in the SEMI water cycle, but the UPW is the universal solvent used in the Tools for cleaning the wafers after photolithography, epitaxy, RCA process and other processing steps. Our instruments are critical in the monitoring of the Tools process and assure the cleaning of the wafers. The next step in the water cycle for the UPW is in the Reclaim/Reuse/Recycle, where the SEMI Fab utilizes Mettler Toledo Thornton process analytics to decide if the UPW can be brought back to the UPW purification process to reduce water discharge and improve industry sustainability.

What does the competitive landscape look like and how do you differentiate?

The current super cycle in the semiconductor industry has resulted in numerous companies pursuing opportunities for their products in this space. Some are historical suppliers while there are new competitors in the market. Mettler Toledo Thornton has been involved in the semiconductor industry for over 40 years and active in working with the industry to establish the accuracy and technical specifications for UPW. The research work at Mettler Toledo Thornton conducted by that Dr. Thornton, Dr. Light and Dr. Morash established the actual resistivity value of Ultrapure water which is the current standard for the industry. We have been a partner with all the leading global semiconductor companies to develop the most accurate online analytical instruments. Our focus on continuous, real-time measurements provides our customers with the highest level of process transparency relative to other batch analyzers or offline measurements.

A key factor in the establishment of Mettler Toledo Thornton as a global leader in the semiconductor industry has been our active participation and membership of the SEMI committees that are establishing the recommended limits for the SEMI industry. Our global presence and service organization provides the semiconductor facility with the ability to standardize on an analytical parameter and employ it across all their facilities and locations.

What new features/technology are you working on?

Our focus is responding to the needs of the market as they strive to improve yield, minimize water consumption, increase throughput and reduce costs. We have recently brought a rapid, online microbial detection to market that replaces the need for slow, costly and error-prone offline test methods. Our new Low PPB TOC continuous online sensor reduces water consumption by 80% vs other company’s batch instruments. Our latest innovation is the UPW UniCOND sensor that delivers the highest accuracy on the market today. We continue to develop new technologies that enables the industry to achieve sustainability goals without sacrificing wafer yield.

How do customers normally engage with your company?

Mettler Toledo Thornton has a global presence with our own sales, marketing, and service organizations in all the countries with semiconductor facilities and Tool manufacturers. The local sales and service teams have been trained on the complete water cycle for semiconductor which gives them the expertise to engage with the semiconductor engineers to provide the technical solutions for UPW monitoring and control. We conduct numerous global webinars and in-person seminars to provide the semiconductor industry the opportunity to learn about the most recent advances in analytical measurements. These seminars also provide the local semiconductor facilities the opportunity to be updated on the most recent UPW recommended limits because of our participation in the technical committees. With our industry specialists we produce and publish numerous White Papers and Technical documents that give the industry the opportunity to gain further insight into the latest advancements.

Also Read:

A New Ultra-Stable Resistivity Monitor for Ultra-Pure Water

Water Sustainability in Semiconductor Manufacturing: Challenges and Solutions