There are two main approaches to building a substructure on which to do software development and architectural analysis before a chip is ready: virtual platforms and FPGA prototyping.

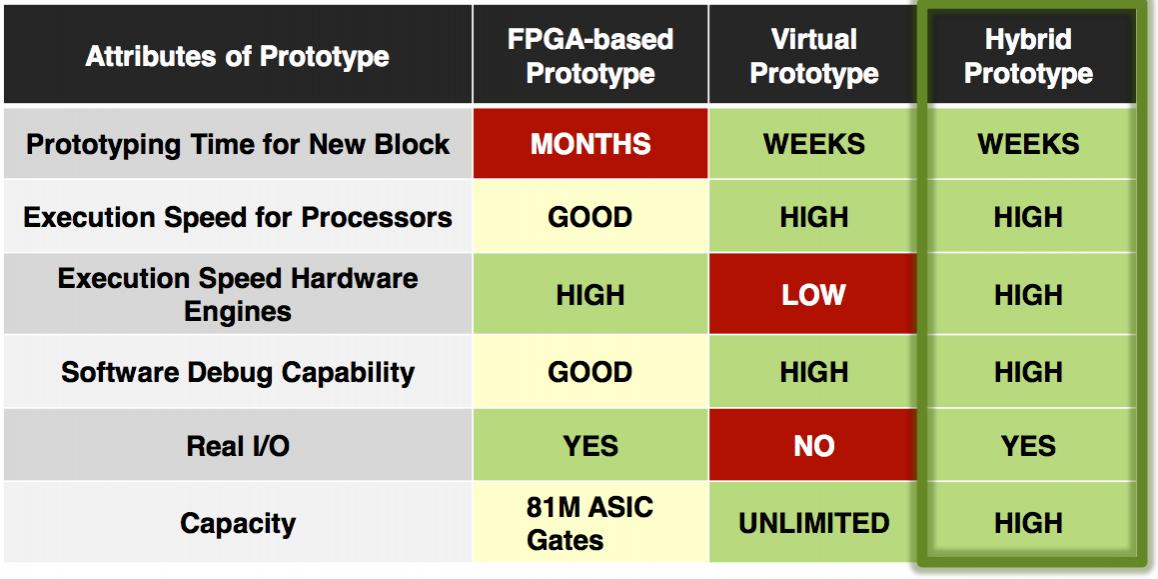

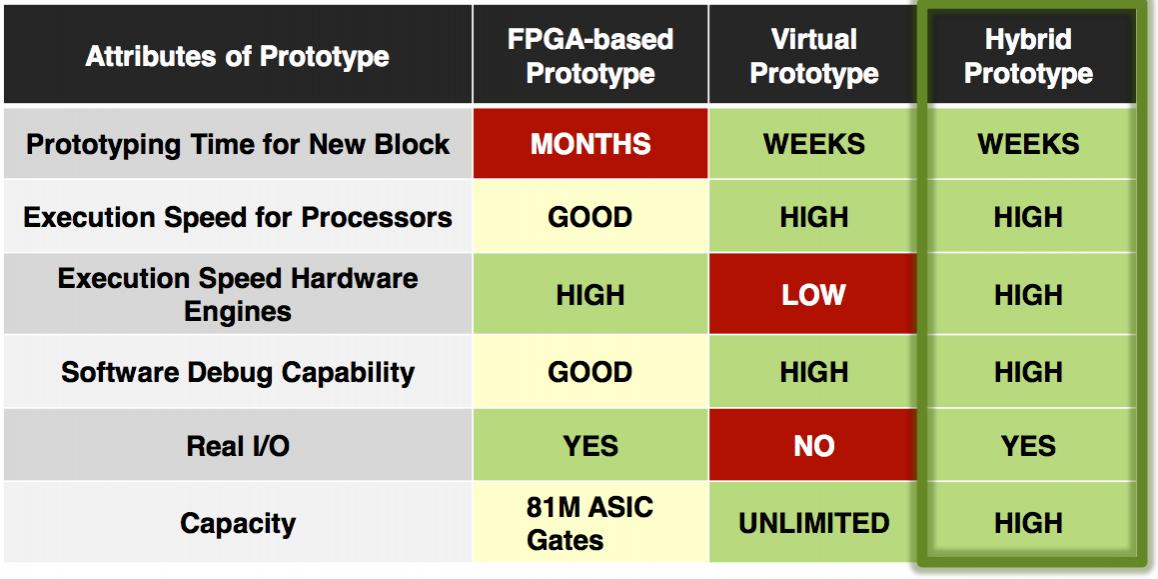

Virtual platforms have the advantage that they are fairly quick to produce and can be created a long time before RTL design for the various blocks is available. They run fast and have very good debugging facilities. However, when it comes to incorporating real hardware (sometimes called hardware-in-the-loop HIL) they are not at their best. Another weakness is that when IP is being incorporated, for which RTL is available by definition, then creating and validating a virtual platform model (usually a SystemC TLM) is an unnecessary overhead, especially when a large number of IP blocks are concerned.

Virtual platforms have the advantage that they are fairly quick to produce and can be created a long time before RTL design for the various blocks is available. They run fast and have very good debugging facilities. However, when it comes to incorporating real hardware (sometimes called hardware-in-the-loop HIL) they are not at their best. Another weakness is that when IP is being incorporated, for which RTL is available by definition, then creating and validating a virtual platform model (usually a SystemC TLM) is an unnecessary overhead, especially when a large number of IP blocks are concerned.

FPGA prototyping systems have the opposite set of problems. They are great when RTL exists or something close to its final form but they are hopeless at modeling blocks where there is no RTL. Their debugging facilities vary since it is not possible to monitor every signal on the FPGA but you never know which ones you need until a bug occurs.

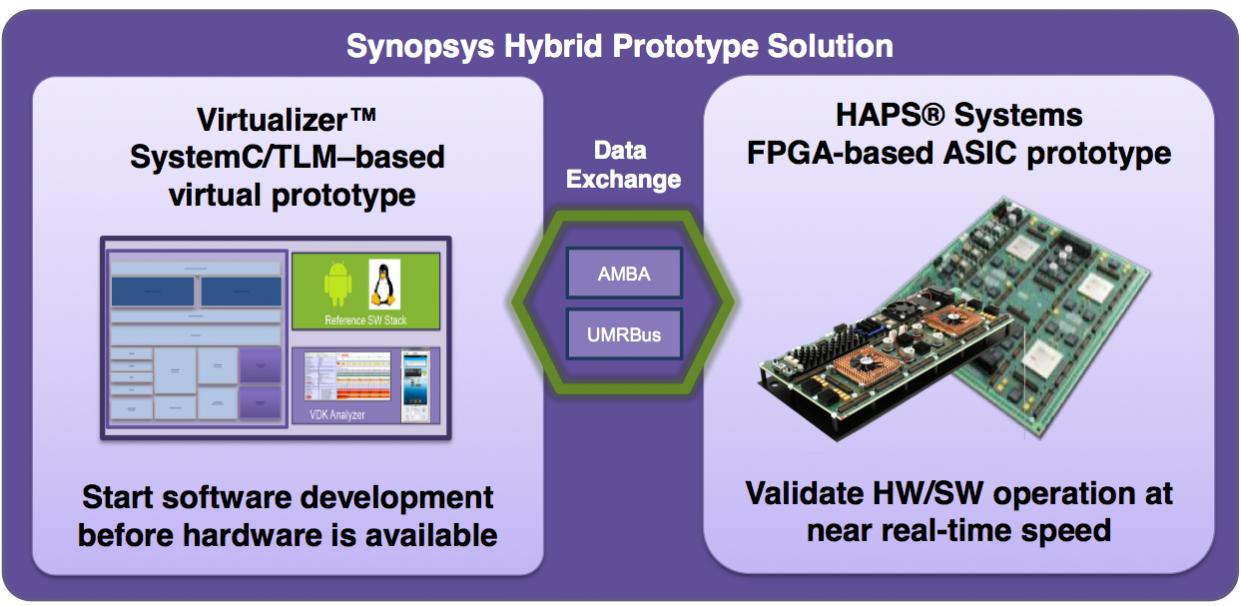

Synopsys have products in both these spaces. Virtualizer is a SystemC/TLM-based virtual prototype. Synopsys acquires Virtio, VaST and CoWare’s virtual platform technologies so somewhere under the hood I’m sure these technologies are lurking. And according to VDC they are the #1 supplier of virtual prototyping tools.

They also have an FPGA based ASIC prototyping system called HAPS, which is also the market leader.

Projects often use both of these. Early in the design cycle they use a virtual platform since there isn’t any RTL. Later, when RTL is nearly complete, they switch to the FPGA approach so that they guarantee that the software is running on the “real” hardware.

Now Synopsys have combined these two technologies into one. That is, part of the design can be a virtual prototype and part of the design can be represented as an FPGA-based prototype. The two parts communicated through Synopsys’s UMRBus which has been around for a couple of years. This brings the best of both worlds and removes the biggest weaknesses of each approach. And, as a design progresses, it is no longer necessary to start in the virtual prototype world and make a big switch to FPGA. It can starts as a mixture and the mixture can simply change over time depending on what is wanted. There are libraries in both worlds, processor models, TLM models, RTL of IP, daughter boards. All these can be used without needing to be translated into the other world.

Now Synopsys have combined these two technologies into one. That is, part of the design can be a virtual prototype and part of the design can be represented as an FPGA-based prototype. The two parts communicated through Synopsys’s UMRBus which has been around for a couple of years. This brings the best of both worlds and removes the biggest weaknesses of each approach. And, as a design progresses, it is no longer necessary to start in the virtual prototype world and make a big switch to FPGA. It can starts as a mixture and the mixture can simply change over time depending on what is wanted. There are libraries in both worlds, processor models, TLM models, RTL of IP, daughter boards. All these can be used without needing to be translated into the other world.

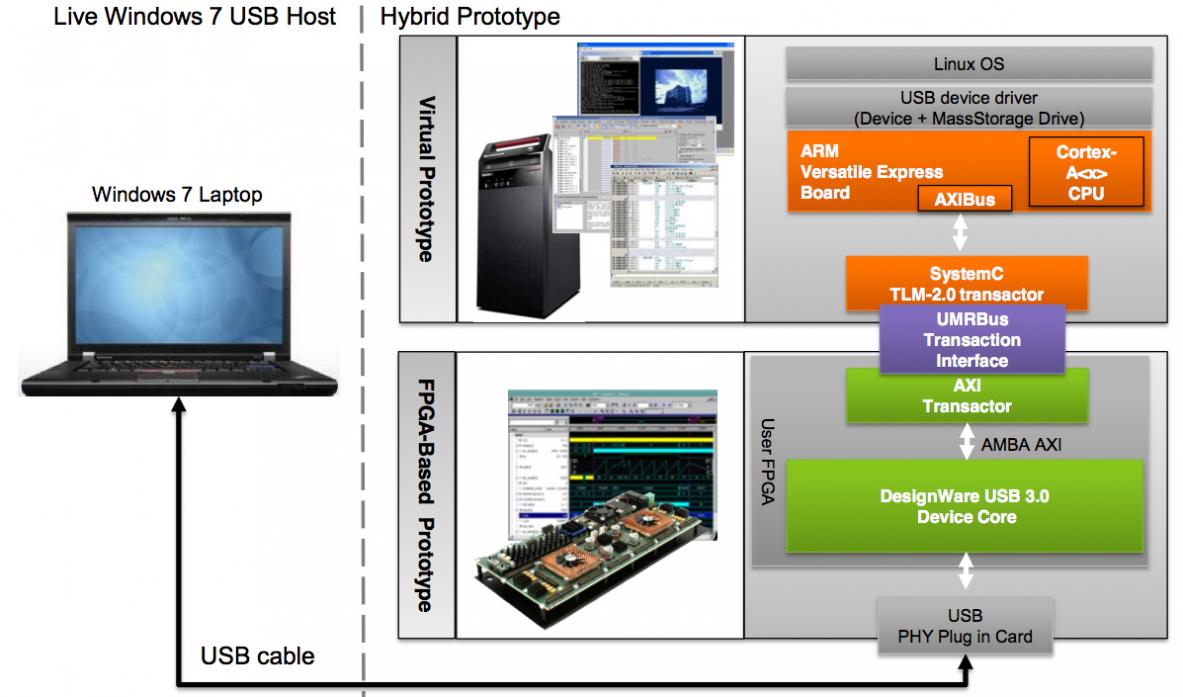

Here’s an example. A new USB PHY and a device driver. In between a DesignWare USB core, an ARM fast model with some peripherals (as a virtual prototype) and Linux. This can all be put together an run communicating with the real world (in this case a Windows PC).

Here’s an example. A new USB PHY and a device driver. In between a DesignWare USB core, an ARM fast model with some peripherals (as a virtual prototype) and Linux. This can all be put together an run communicating with the real world (in this case a Windows PC).

Comments

There are no comments yet.

You must register or log in to view/post comments.