In the race to get people out of the driver’s seat, the developers of autonomous vehicles (AV) and advanced driving assistance systems (ADAS) have gone off road and into the virtual world.

Using simulation to design, train and validate the brains behind self-driving cars — the neural networks of sensors and systems that perceive the world then react to split-second changes in the environment — is an essential tool for building the AV/ADAS platform.

Without simulation, developers are limited to naturally occurring events on public roads as their proving grounds. That means they’d spend far more time and money creating specific scenarios to test that sensors recognize and algorithms respond appropriately and safely to routine and hazardous conditions: red lights, pets and wildlife, oncoming traffic, or a child darting into the street.

Real-world driving and simulation work together to advance ADAS/AV technology. Real-world driving data is an important measure of road-worthiness and system intelligence, and it provides additional inputs to improve their algorithms. Simulation complements on-road testing with its ability to run orders of magnitude more scenarios and challenging events that are rare in real-world driving but essential to get right.

CNET writer Kyle Hyatt describes how simulation technology gives Alphabet’s Waymo engineers the capacity to “simulate a century’s worth of on-road testing virtually in just a single 24-hour period.” Another way of looking at it: It took Waymo 10 years to log 20 million actual driving miles, and a single year to simulate 2.5 billion.

As valuable as simulation is, sometimes it needs to pick up the pace, too.

That’s where the real-time radar (RTR) simulation engine takes the wheel. Ultra-fast and physics-based RTR can accomplish in minutes what used to take days.

Images Made Faster than Ever

Along with LIDAR, cameras and ultrasonic sensors, the typical AV also has multiple radar sensors for short-, medium-, and long-range sensing tasks. Long-range radars monitor traffic down the road for adaptive cruise control and collision avoidance. Shorter range sensors handle blind spots, cross traffic, and collision avoidance.

Traditionally, central processing units (CPUs) were used for automotive radar simulations. CPU architecture is fast, but not nearly fast enough to simulate complex radar systems at real-time frame rates.

Radars sample the world at up to 30 frames per second (fps). Automotive radars have multiple transmitters that broadcast hundreds or thousands of radar chirps and multiple receiving antennas that measure those signals at hundreds of frequencies for a single frame of data. Multiple-input multiple-output (MIMO) radars measure millions of data points per frame – hundreds of channels, chirps per channel, and frequencies per chirp. That’s all for one radar, and autonomous vehicles have multiple radars.

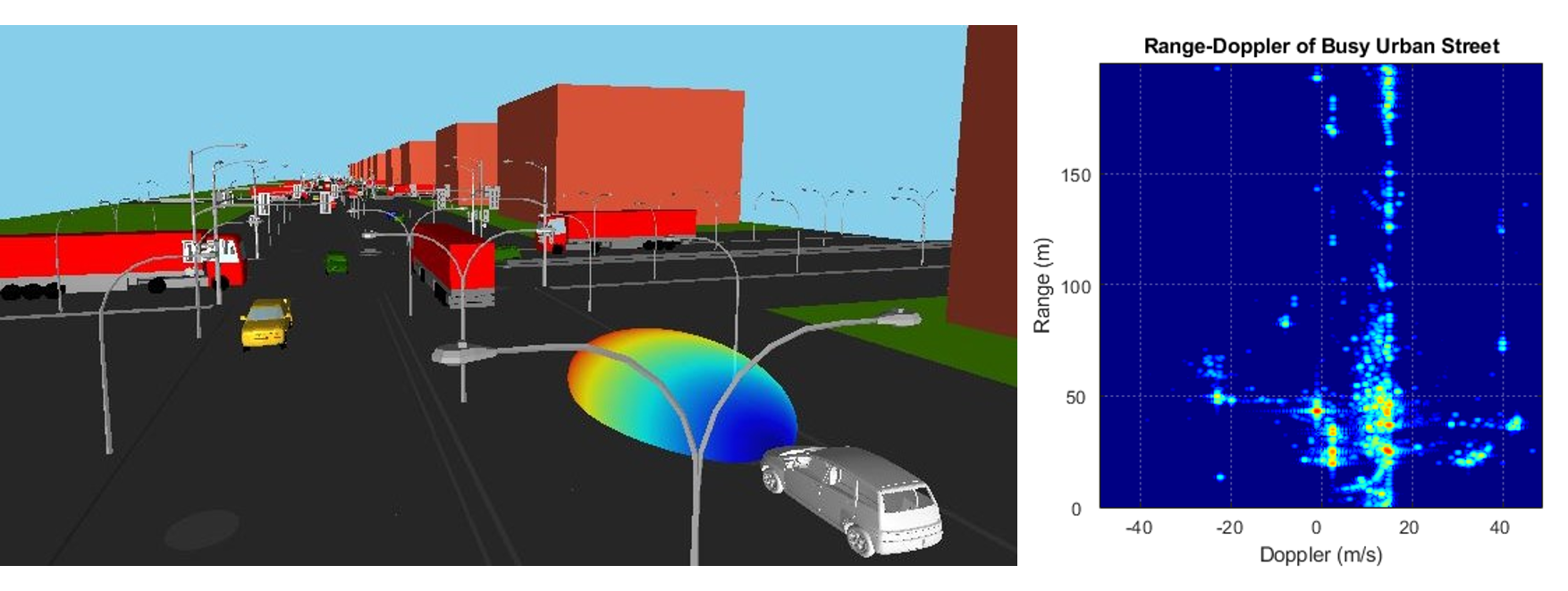

Caption: Range-Doppler image of busy street shows the radar mounted on the white car detecting distance (range) and relative velocity (Doppler) of objects from a moving vehicle.

CPU-based simulation requires up to a minute to simulate one frame of data from one radar, even with new algorithms invented by Ansys. A revolutionary leap forward is needed.

Ansys’ Real-Time Radar (RTR) overcomes these limitations with graphics processing units (GPUs) . The combined power of Ansys simulation and NVIDIA GPU acceleration can not only generate data from single-channel, multi-channel, and MIMO radars, it generates images faster than real-time. Scenarios that took days, months, or years to simulate before RTR are possible in seconds or minutes. Single-channel radars are over 5000x faster. MIMO radars that would have taken so long to simulate that we never tried also run thousands of times faster.

Real-Time Simulation Enables New Applications

Ansys engineers are using RTR to create amazing new capabilities, and the difference is astonishing.

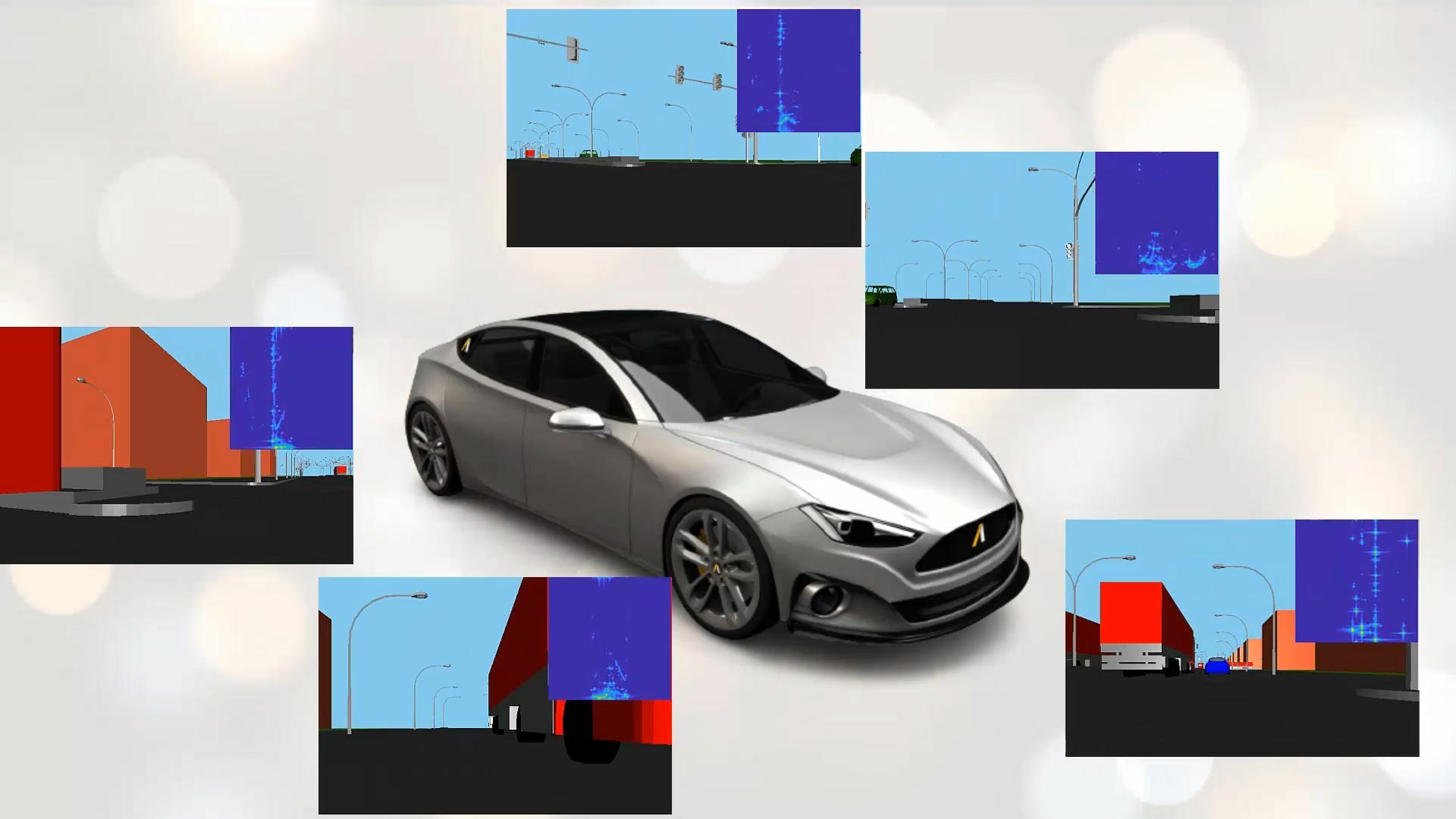

For example, Ansys RTR took just 11 seconds to simulate a car with five radars at 250 fps traveling down a 1-kilometer (0.6-mile) busy street for a 20-second scenario using an NVIDIA RTX A6000 GPU. Because safe urban driving means contending with all kinds of hazards and distractions, we packed our scenario with 70 vehicles, 14 buildings, over 300 streetlights and a nightmarish 42 traffic signals.

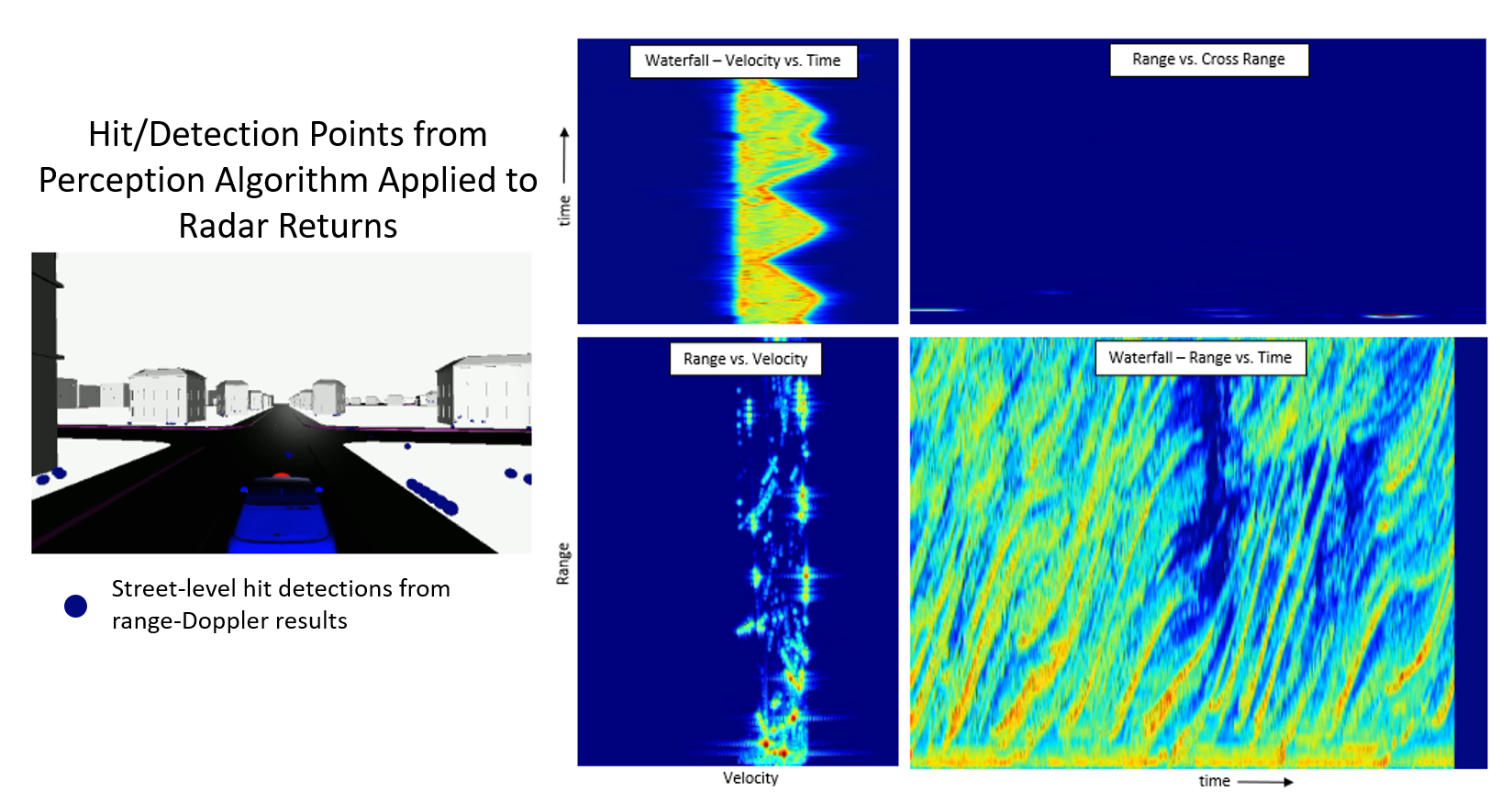

Ansys RTR simulates a vehicle with five radars at over 250 fps in a busy urban environment.

Before RTR, that same simulation would have taken more than 25 hours. If that seems like a vast improvement, consider this: Before Ansys developed new algorithms for Doppler processing, the simulation would have taken more than four years. RTR cuts simulation time to 11 seconds and maintains 57 fps for five radars, far faster than the 30 fps real-time metric. This 8000x speedup compared to 25 hours and nearly 3 million times speedup over four years.

“With real-time radar, high-fidelity simulation is no longer a barrier in the development of ADAS data pipelines. Radar sensor data can be generated at a rate never thought possible for physics-based simulations.”

- Arien Sligar, senior principal application engineer, Ansys

RTR’s dramatic performance improvement has already paid off as an enabling technology in downstream analysis. Labeling images – identifying locations of objects such as people, cars, and buses in a radar image – is a time-consuming effort when done by hand. RTR users produced more than 160,000 labeled images overnight with RTR compared to 9,000 images in five days with slower simulation or several dollars per image labeling by hand.

Ansys engineers also connected RTR to a machine learning algorithm to teach a car to drive through reinforcement learning. Ansys principal application engineer Dr. Kmeid Saad conducted a week-long webinar, Reinforcement Learning with Physics-based Real Time Radar for Longitudinal Vehicle Control, that trained a throttle-control algorithm using GPUs on Microsoft’s Azure cloud. RTR simulated radar returns at a faster-than-real-time 50-60 fps on one GPU while three other GPUs ran the driving simulator and machine learning.

Physics-Based Simulation Built on Established Methodology

The RTR simulation engine is based on the well-established shooting-and-bouncing-rays (SBR) technique for large, high-frequency scenes. RTR generates range-Doppler images that display the distance and relative velocity of objects in driving scenarios under various traffic conditions. SBR models radar reflection off objects, multi-bounce propagation through the scene, material properties, transmission through windows, and radar antenna patterns. RTR incorporates all of these real-world interactions to produce physics-based simulation results.

RTR simulates both range-Doppler images and “raw” radar chirp versus frequency data. Raw data is used post-processing like angle-of-arrival (AoA), inverse synthetic aperture radar (ISAR), object detection, perception, and object classification analysis.

RTR models radar waveforms, which influence the radar outputs. The frequency modulated continuous wave (FMCW) waveform is common in automotive radars. RTR users enter waveform details from the radar’s specification, and RTR outputs capture physics specific to the waveform, such as range-velocity coupling.

Being able to measure the target range and its velocity has considerable practical applications, not the least of which is keeping the AV from bumping into the car in front of it as it slows or if it suddenly stops. Given that studies indicate AVs are involved in rear-end collisions more than any other type of accident, training forward sensors to detect when there’s danger ahead is critically important.

Trained for Any Situation

Human error contributes to 94 percent of severe traffic accidents according to the U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA). The ADAS/AV community is working to make ADAS/AV systems safer than humans by eliminating the preoccupied, tired, or careless person behind the wheel.

At the same time, ADAS/AV systems lack human intuition and experience. People can differentiate whether a hazard up ahead is harmless litter to ignore, a stick in the road to swerve around, or a more serious roadblock that requires jamming on the brakes. Currently, most ADAS/AV systems cannot yet make the distinction.

But the day when they can might not be too far off. With ultra-fast tools like RTR, which can model radar bouncing off the walls and through the windows with Autobahn-like speed, automakers will be able train driverless cars to handle almost any situation, including scenarios that were impossible to model before. And that will put consumer confidence and acceptance into high gear.

ANSYS will discuss the new RTR solver and show results of automotive scenarios in busy, complex environments at the Nvidia GTC Conference April 12-16. Click to register.

For more presentations on autonomy, attend Ansys Simulation World, April 20-22. Click to register.

To learn more about Autonomous Vehicle Safety click here.

Also Read

The Electromagnetic Solution Buyer’s Guide

Need Electromagnetic Simulations for ICs?

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.