Plunify, powered by machine learning and the cloud, delivers cloud-based solutions and optimization software to enable a better quality of results, higher productivity and better efficiency for design. Plunify is a software company in the Electronic Design Market with a focus on FPGA. It was founded in 2009, has its HQ in Singapore and is a privately funded company focused on applying machine learning algorithms to FPGA timing and space optimization problems to solve design problems and achieve better optimized more efficient designs. Plunify has offices in Singapore, Malaysia, China, and Japan and has a sales representation network covering all major markets. Plunify is part of the Xilinx alliance partner and Intel EDA partners programs.

What Plunify solves

Complex FPGA designs require significantly iterative flows going from static timing analysis back to RTL code modification to achieve timing closure or the desired results. FPGA designers have long used this traditional approach when facing problems, consuming a lot of expensive engineering time in the process. FPGA vendor tools like Intel Quartus II and Xilinx Vivado/ISE provide the standard tool flow and the engineer’s time is then spent on (re-)writing RTL source code and constraints to achieve the target results. Plunify saw opportunities to reduce redundancies and extract the maximum level of optimization from the existing workflow by fully utilizing the inherent optimization directives of the FPGA tools.

Coupled with the emergence of cloud computing and their own machine learning algorithms, designers can achieve or get close to attaining timing closure in a shorter amount of time. This allows design cycles to complete faster and to significantly accelerate the process of getting complex products to market.

Products and Services

InTimeis a machine learning software that optimizes FPGA timing and performance. FPGA tools such as Vivado, Quartus and ISE provide massive optimization benefits with the right settings and techniques. InTime uses machine learning and built-in intelligence to identify such optimized strategies for synthesis and place-and-route. It actively learns from results to improve over time, extracting more than a 50% increase in design performance from the FPGA tools.

Plunify Cloud is a platform that simplifies the technical and security management aspects of accessing a cloud infrastructure. It enables any FPGA designer to compile and optimize FPGA applications on the cloud without having to be an IT expert. This is achieved with a suite of cloud-enabled tools, such as the FPGA Expansion Packand AI Lab, that provide easy accessibility to the cloud without the complexity of infrastructure and software configuration and maintenance.

[table] border=”1″ cellspacing=”0″ cellpadding=”0″ align=”left”

|-

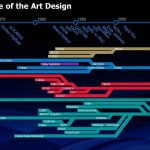

| style=”width: 115px; height: 9px” | Timeline

2009/ 2010

| style=”width: 480px; height: 9px” | • First lines of code were written

|-

| style=”width: 115px; height: 14px” | 2011

| style=”width: 480px; height: 14px” | • Plunify receives seed funding from Spring Singapore

|-

| style=”width: 115px; height: 9px” | 2012

| style=”width: 480px; height: 9px” | • Private Cloud Prototype – EDAxtend is released

|-

| style=”width: 115px; height: 9px” | 2013

| style=”width: 480px; height: 9px” | • InTime Development begins

|-

| style=”width: 115px; height: 20px” | 2014

| style=”width: 480px; height: 20px” | • InTime is released.

• The first major customer of InTime.

• Official Altera EDA partner

|-

| style=”width: 115px; height: 19px” | 2015

| style=”width: 480px; height: 19px” | • Official Xilinx Alliance partner.

• Adds Macnica and Avnet as reps in Asia.

|-

| style=”width: 115px; height: 9px” | 2016

| style=”width: 480px; height: 9px” | • Investment from KMP and Lanza TechVentures

|-

| style=”width: 115px; height: 19px” | 2017

| style=”width: 480px; height: 19px” | • Released Plunify Cloud platform.

• AWS selected partner in Asia for F1 instance

|-

| style=”width: 115px; height: 24px” | 2018

| style=”width: 480px; height: 24px” | • Partnership with Xilinx to enable FPGA development in the cloud.

• Released FPGA Expansion Pack and AI Lab

|-

Plunify Costs for Cloud Usage

Plunify Cloud is a platform that simplifies the technical and security management aspects of accessing a cloud infrastructure for FPGA designers. Free client tools are provided for FPGA designers to access the cloud. The platform uses a pre-paid credits system.

[table] style=”width: 500px”

|-

|

| Pre-paid credits at $0.084 each

Credits cover the cost for

1. Cloud Servers

2. Cloud Data Storage

3. Cloud Bandwidth

4. Tool Licenses, e.g. Vivado, InTime

5. Free use of client tools

|-

Cloud Enabled Tools

FPGA Expansion Pack

Expansion Pack Webpage

AI Lab

AI Lab provides greater access to Vivado and other Xilinx tools. There are no restrictions on the OS. Vivado HLx can run on Macs and even Chromebook. Converts IT to an operating expenditure and thus eliminates capital expenditure and on-premise maintenance. This allows more accurate forecasts and scalability based on actual demand.

InTime is a machine learning software that optimizes FPGA timing and performance. InTime does this by identifying optimized strategies for synthesis and place-and-route. It actively learns from multiple build results to improve over time, extracting up to 50% increase in design performance from the FPGA tools.

There are usually 3 ways to optimize timing and performance

- Optimizing RTL & Constraint. This requires experience, is risky at a late stage, can introduce bugs and re-verification delays.

- Optimizing Synthesis & Place and Route Settings

- Using faster device types impacting cost and design

It makes sense to use all three for improved overall performance.

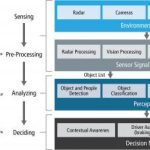

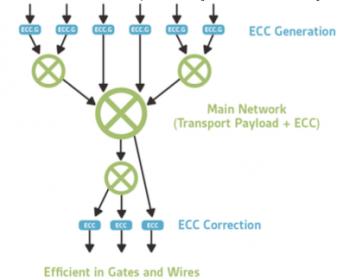

InTime works by:

- Generating strategies based on database

- Implementing all strategies and running them in parallel

- Using machine learning to analyze results

- Updating the database with new knowledge

Strategies are combinations of synthesis and place-&-route settings, including placement constraints.

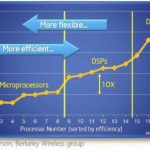

InTime machine learning algorithms provide predictable improvements with optimal groups of settings as default settings are rarely adequate.

There are advantages in large deployments where multiple designs are analyzed due to additional data in the database increasing machine learning positive inferences.

Summary Overview of the Tools

Resources: