In wearables and hearables, low power is king. Earbuds for example still only manage a half-day active use before we need to recharge. Half a day falls short of truly convenient for most of us – a full day would be much better, allowing for overnight recharge. Physics limits battery sizes so system designers must look to SoC architectures to reduce power further. BLE is the obvious choice for communication with a nearby phone or other device but is a minimum requirement; further extending battery life requires more ingenuity. Some will come from cleverness in software running on an MCU or DSP, managing sensing, audio, automatic noise reduction and gesture recognition. However one significant opportunity is often overlooked – power reduction through application-specific SRAMs.

Why look to SRAMs for power reduction?

Embedded SRAM compilers provide some help in managing power, though typically fairly coarse-grained. Memory compiler vendors have consolidated significantly and together with foundry IP services must serve a broad range of market needs. Those providers can’t optimize for every possibility; instead, they tune for most popular options. Any design with an application-specific requirement must make do with a close but probably less than ideal fit. Second, all competitors have access to the same compilers. Since a significant percentage of a power budget consumed is likely in embedded memory, using a mainstream memory is a missed opportunity to differentiate on power.

Is it really possible to do better than the standard compilers? Dynamic power control in a memory doesn’t work the same way as in logic. Standard compilers offer a variety of compile options which primarily control the periphery and array-wide power switching. One way to go deeper while still using standard bitcells is to augment standard memory architectures. Clever address coding, limiting long line voltage swings and better control of bitline voltage swings are possible techniques here. For leakage, support for multiple banks allows for independent control of each bank and bitline control can also reduce leakage. Together these options can contribute significant power saving, both dynamic and static.

Going lower

Another familiar way to reduce power is to reduce voltage in DVFS operation. Here again, the constraints for memory are a little different than those for logic. The lower bound is the minimum voltage at which a memory cell can retain its value – Vret. However, the lowest voltage at which that memory content can be reliably accessed – Vmin – is normally close to the nominal operating voltage. A memory design which could drop Vmin closer to Vret could deliver significant power saving thanks to the V2 factor in dynamic power.

sureCore memory solutions

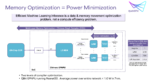

sureCore, based in the UK, has built application specific compilers for low power memories taking advantage of such ideas. One product, PowerMiser, offers single port SRAM IP with programmable sleep modes. PowerMiser delivers up to 50% dynamic and 20% static power reduction over competing options and is available as a compiler or in single instances. EverOn is the ultra-low power option, supporting operating voltages as low as Vret. In banked memory, a single bank can be powered at this low level to support wake functionality while other banks are powered off. This option is also available in compiler and single instance options. EverOn has shown 70% reduction in dynamic power and 60% in leakage power.

Another product line, MiniMiser, builds register files tuned to application-specific needs. The single rail design is not based on foundry bit cells and can be connected directly to system logic. MiniMiser memories commonly deliver power savings of >50% over competing options and are typically delivered as instances.

Not magic but careful design

How is sureCore able to offer these advantages? They build their own memory architectures, their own compilers and in some instance their own bit cells, guided by discussions with customers and their system application needs. They have also built their own automation for verification and robust characterization. Paul Wells, the CEO tells me that in many instances sureCore see common themes, around wearable, around AI, and around other applications. These drive in some cases standardized compilers, though they also advise clients on how to optimize configurations to system needs. Discussions might examine factors such read versus write dominance in an application for example.

In cases where a client needs the best possible power profile, sureCore offer a custom program called sureFIT. Here they work with a client using every trick in the book to build a one-of-a-kind solution. Techniques here include segmented arrays and bit-line voltage control, near-threshold operation, pipelined read circuitry and more.

sureCore memories already has deployments in several wearable, AI and RISC-V applications. You should check them out. As an IP service provider ranging between standard solutions and bespoke options to deliver best-in-class power profiles for many edge applications, sureCore is an intriguing proposition. It will be interesting to see how their business evolves!