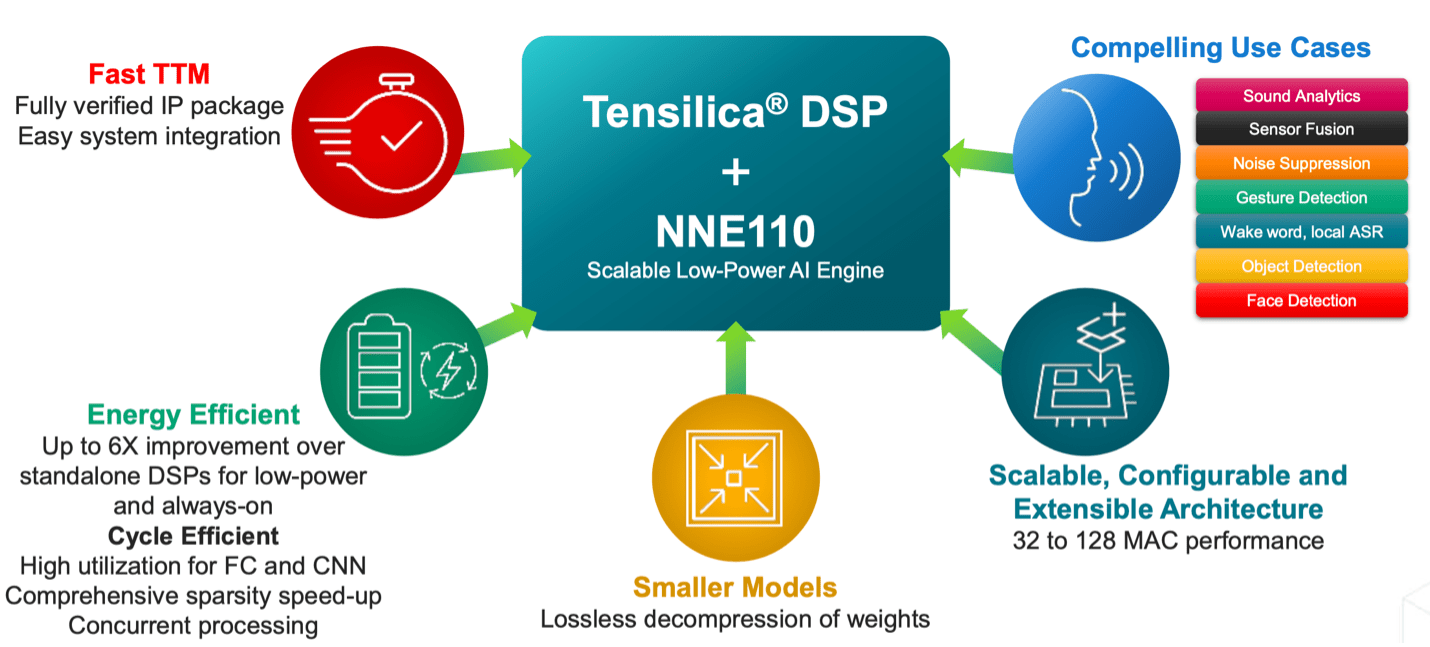

The Linley spring conference this year had a significant focus on AI at the edge, with all that implies. Low power/energy is a key consideration, though increasing performance demands for some applications are making this more challenging. David Bell (Product Marketing at Tensilica, Cadence) presented the Tensilica NNE110 engine to boost DSP-based AI, using a couple of smart speaker applications to illustrate its capabilities. Amid a firehose of imaging AI in the media, I for one am always happy to hear more about voice AI. The day when voice banishes keyboards can’t come soon enough for me 😎 These Tensilica Edge advances also support vision applications naturally.

The Need

DSPs are strong platforms for ML processing since ML needs have much in common with signal processing. Support for parallelism and accelerated MAC operations has been essential in measuring, filtering and compressing analog signals for many decades. The jump to ML applications is obvious. As those algorithms rapidly evolve, DSP architectures are also evolving for more parallelism, more MACs and more emphasis on keeping big data sets (weights, images, etc) on-chip for as long as possible to limit latencies and power.

Another area of evolution is in specialized accelerators to augment the DSP for specialized functions with even lower latency and power. In voice-based applications, two very important examples are noise suppression and trigger word detection. In noise suppression, intelligent filtering can now do better than conventional active noise filtering. Trigger word detection must be always-on, running at ultra-low power to allow the rest of the system to remain off until needed. Recognizing trigger words requires ML, at ultra-low power.

Meeting these needs with NNE110

A now popular method for de-noising is based on an LSTM network trained to separate speech from environmental noise. This allows adapting across a wide variety of environment possibilities. Profiling reveals that 77% of these operations running on pure DSP implementation are matrix and vector operations, and about half the remaining operations are in activation functions such as sigmoid or tanh. These are obvious candidates to run on the accelerator. Comparing between pure DSP and DSP+NNE implementations, both latency and power improve by over 3X. For a different de-noising algorithm , latency and power reduce even more dramatically, by 12X and 15X respectively. This is for a CNN based on U-NET, here adapted from a different domain.

Implementation

The NNE accelerator looks like it slips in very cleanly to the standard Tensilica XAF flow. In mapping instructions from TensorFlow Lite for Microcontrollers, standard Tensilica HiFi options are reference ops and HiFi optimized ops. NNE ops are just another option connected through a driver to the accelerator. In development, supported operations simply map to the accelerator rather than one of the other classes of ops.

David pointed out that multiple applications can benefit from this fast and very low-power always-on extension. This is in the visual domain as well as in voice recognition. Obvious candidates include trigger word recognition, visual wake words, gesture detection and more.

If you want to learn more, you probably had to be registered to the Linley conference to get the slides, however Cadence has a web page on NNE. Also you can learn more about the LSTM algorithm HERE and the U-NET algorithm HERE.

Also read:

ML-Based Coverage Refinement. Innovation in Verification

Cadence and DesignCon – Workflows and SI/PI Analysis

Symbolic Trojan Detection. Innovation in Verification

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.