The various algorithms that comprise artificial intelligence (AI) are finding their way into the chip design flow. What is driving a lot of this work is the complexity explosion of new chip designs required to accelerate advanced AI algorithms. It turns out AI is both the problem and the solution in this case. AI can be used to cut the AI chip design problem down to size. Synopsys has been developing AI-assisted design capabilities for quite a while, beginning with the release of a design space optimization capability (DSO.ai) in 2020. Since then, several new capabilities have been announced, significantly expanding its AI-assisted footprint. You can get a good overview of what Synopsys is working on here. One of the capabilities in the Synopsys portfolio focuses on verification space optimization (VSO.ai). The real test of any new capability is its use by a real customer on a real design, and that is the topic of this post. Read on to see how AMD puts Synopsys AI verification tools to the test.

VSO.ai – What it Does

Test coverage of a design is the core issue in semiconductor verification. The battle cry is, “if you haven’t exercised it, you haven’t verified it.” Stimulus vectors are generated using a variety of techniques, with constrained random being a popular approach. Those vectors are then used in simulation runs on the design, looking for test results that don’t match expected results.

By exercising more of the circuit, the chance of finding functional design flaws is increased.

Verification teams choose structural code coverage metrics (line, expression, block, etc.) of interest and automatically add them to simulation runs. As each test iteration generates constrained-random stimulus conforming to the rules, the simulator collects metrics for all the forms of coverage included. The results are monitored, with the goal of tweaking the constraints to try to improve the coverage. At some point, the team decides that they have done the best that they can within the schedule and resource constraints of the project, and they tape out.

Code coverage does not reflect the intended functionality of the design, so user-defined coverage is important. This is typically a manual effort, spanning only a limited percentage of the design’s behavior. Closing coverage and achieving verification goals is quite difficult.

A typical chip project runs many thousands of constrained-random simulation tests with a great deal of repetitive activity in the design. So, the rate of new coverage slows, and the benefit of each new test reduces over time.

At some point, the curve flattens out, often before goals are met. The team must try to figure out what is going on and improve coverage as much as possible within time and resource constraints. This “last mile” of the process is quite challenging. The amount of data collected is overwhelming and trying to analyze it and determine the root cause of a coverage hole is difficult and labor-intensive. is it an illegal bin for this configuration or a true coverage hole?

The design of complex chips contains many problems that look like this – the requirement to analyze vast amounts of data and identify the best path forward. The good news is that AI techniques can be applied to this class of problems quite successfully.

For coverage definition, Synopsys VSO.ai infers some types of coverage beyond traditional code coverage to complement user-specified coverage. Machine learning (ML) can learn from experience and intelligently reuse coverage when appropriate. Even during a single project, learnings from earlier coverage results can help to improve coverage models.

VSO.ai works at the coarse-grained test level and provides automated, adaptive test optimization that learns as the results change. Running the tests with highest ROI first while eliminating redundant tests accelerates coverage closure and saves compute resources.

The tool also works at the fine-grained level within the simulator to improve the test quality of results (QoR) by adapting the constrained-random stimulus to better target unexercised coverage points. This not only accelerates coverage closure, but also drives convergence to a higher percentage value.

The last mile closure challenge is addressed by automated, AI-driven analysis of coverage results. VSO.ai performs root cause analysis (RCA) to determine why specific coverage points are not being reached. If the tool can resolve the situation itself, it will. Otherwise, it presents the team with actionable results, such as identifying conflicting constraints.

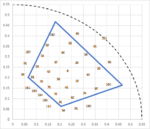

The figure below summarizes the benefits VSO.ai can deliver. A top-level benefit of these approaches is the achievement of superior results in less time with less designer effort. We will re-visit this statement in a moment.

What AMD Found

At the recent Synopsys Users Group (SNUG) held in Silicon Valley, AMD presented a paper entitled, “Drop the Blindfold: Coverage-Regression Optimization in Constrained-Random Simulations using VSO.ai (Verification Space Optimization).” The paper detailed AMD’s experiences using VSO.ai on several designs. AMD had substantial goals and expectations for this work:

Reach 100% coverage consistently with small RTL changes and design variants, but in an optimized, automated way.

AMD applied a well-documented methodology using VSO.ai across regression samples for four different designs. The figure below summarizes these four experiments.

AMD then presented a detailed overview of these designs, their challenges and the results achieved by using VSO.ai, compared to the original effort without VSO.ai. Recall one of the hallmark benefits of applying AI to the design process:

Achievement of superior results in less time with less designer effort

In its SNUG presentation, awarded one of the Top 10 Best Presentations at the event, AMD summarized the observed benefits as follows:

- 1.5 – 16X reduction in the number of tests being run across the four designs to achieve the same coverage

- Quick, on-demand regression qualifier

- Can be used to gauge how well the test distribution of a regression is if user is uncertain on iterations needed

- Potentially target more bins under same budget

- If default regression(s) do not achieve 100% coverage, VSO.ai can potentially exceed this (i.e., experiment #1)

- Testcase(s) removal in coverage regressions if not contributing

- More reliable test grading for constrained random tests

- URG (Unified report generator): seed-based v/s

- VSO.ai: probability-based

- Debug

- Uncover coverage items that have a lower probability of being hit than expected

This presentation put VSO.ai to the test and the positive impact of the tool was documented. As mentioned, this kind of user application to real designs is the real test of a new technology. And that’s how AMD puts Synopsys AI verification tools to the test.

Also Read:

WEBINAR: Why Rigorous Testing is So Important for PCI Express 6.0