Atrenta did an online survey of their users. Of course Atrenta’s users are not necessarily completely representative of the whole marketplace so it is unclear how the results would generalize for the bigger picture, your mileage may vary. About half the people were design engineers, a quarter CAD engineers and the rest split between test engineers, verification and other things.

There are some questions that focus on use of Atrenta’s tools that I don’t think are of such wide interest, I’ll focus on the things that caught my eye.

Firstly, the method to design your RTL. Do you create it from scratch or modify existing RTL? It is now a 40:60 split with 40% of designers writing their own RTL and 60% modifying existing RTL.

When it comes to the top level RTL, there is a split between doing it manually (57%), with scripts (57%) and using a 3rd party EDA tool (12%). Yes, those numbers total more than 100%, some people obviously use more than one technique.

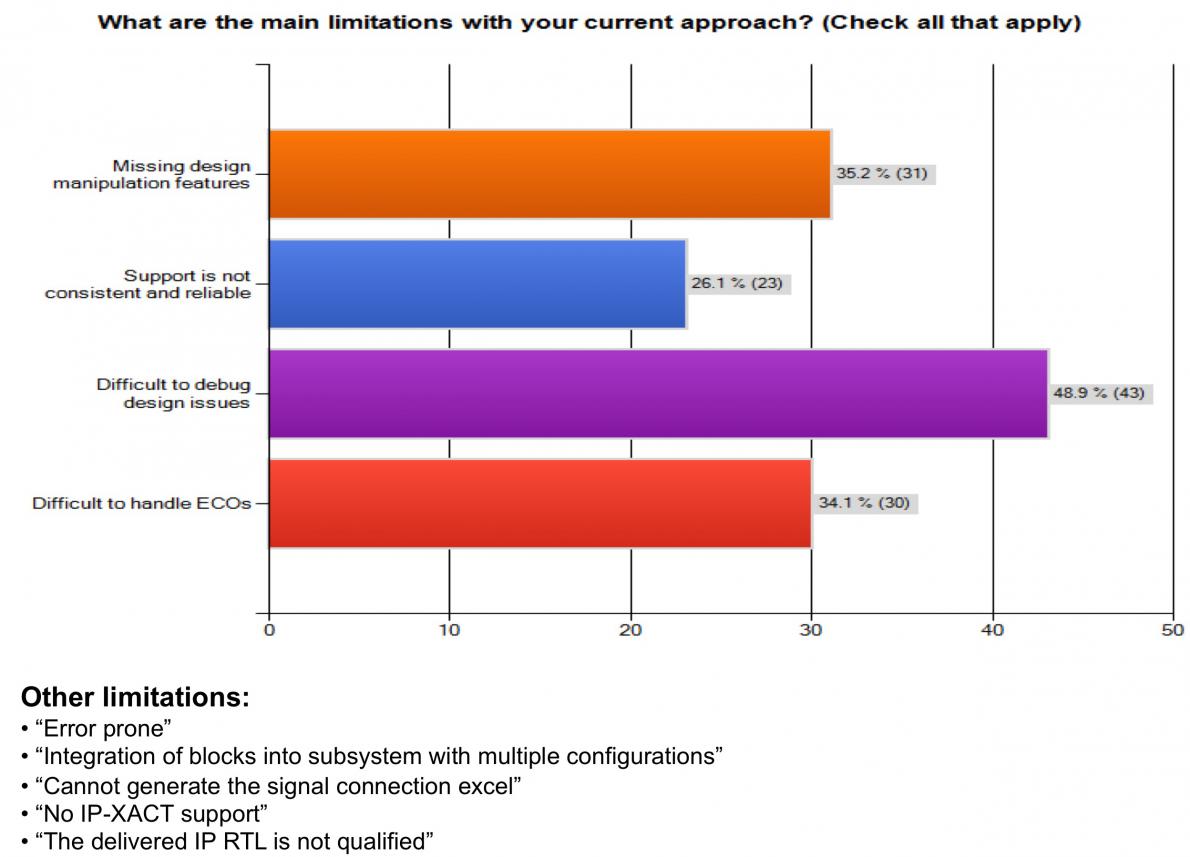

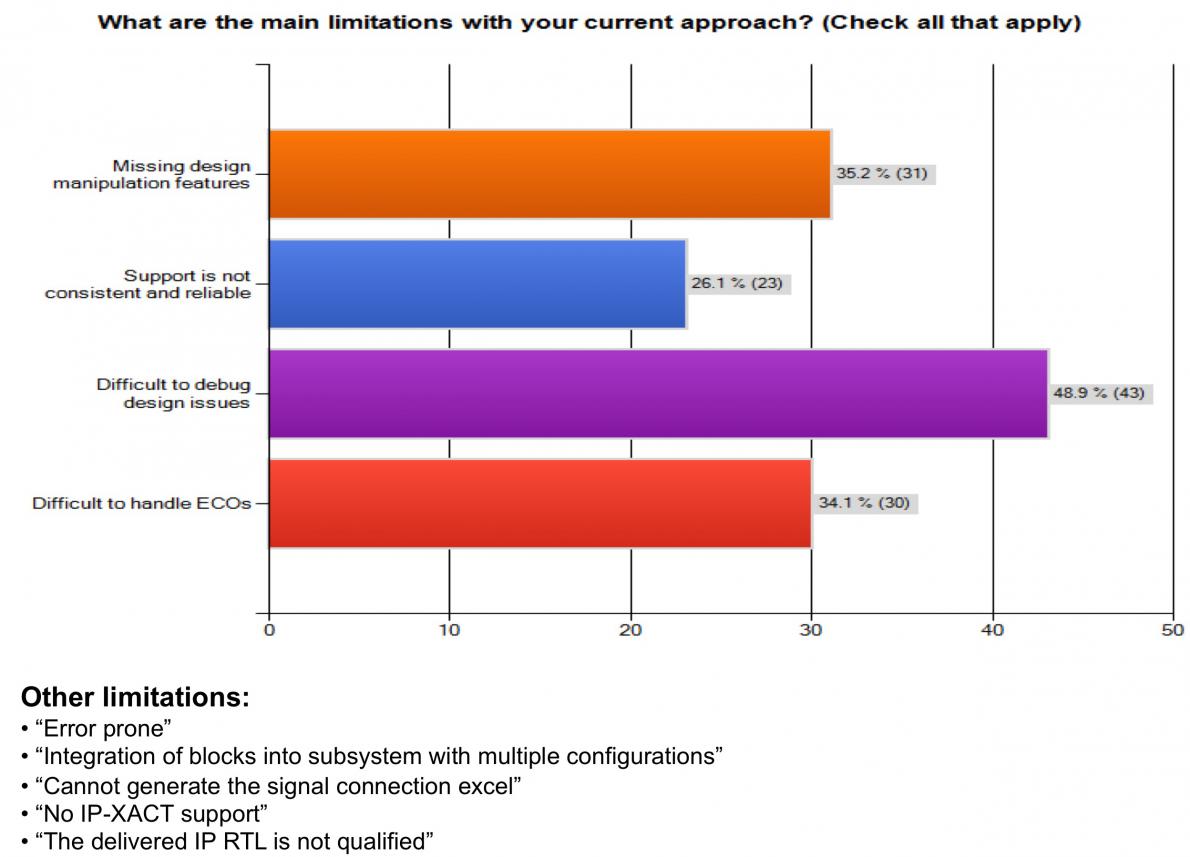

On the main limitations of their current approach, designers had a litany of woes. Missing design manipulation features (35%), support not consistent and reliable (26%) and ECOs hard to handle (34%). But clearly the #1 problem is the difficulty of debugging design issues at 49%. There were many other things listed from missing IP-XACT files, IP being unqualified, to just plain “error prone”.

On the main limitations of their current approach, designers had a litany of woes. Missing design manipulation features (35%), support not consistent and reliable (26%) and ECOs hard to handle (34%). But clearly the #1 problem is the difficulty of debugging design issues at 49%. There were many other things listed from missing IP-XACT files, IP being unqualified, to just plain “error prone”.

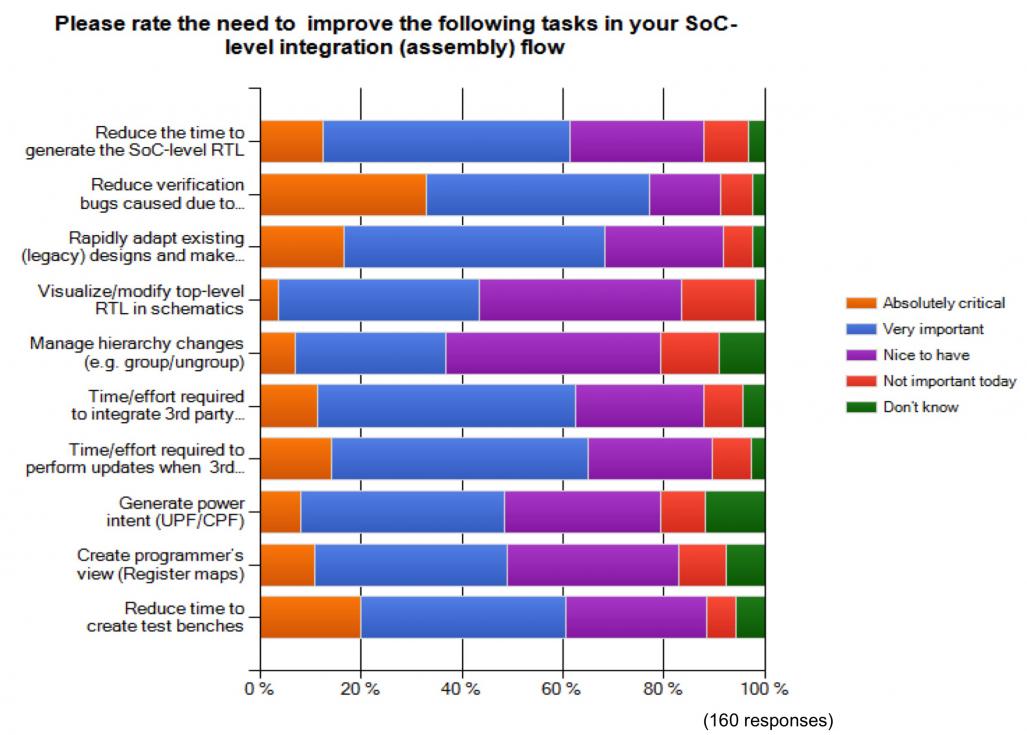

The final question was about what aspects of the design flow were most critical to improve. The choices for each feature were critical, very important, nice to have, not important and don’t know. So let’s take the critical and very important groups and see what the top concerns were.

First was reduce verification bugs due to connectivity problems. The next 3 are all facets of a similar problem: rapidaly adapt legacy designs, effort to integrate 3rd party IP and effort to make updates when 3rd party IP is in use. Slightly behind that is to reduce the time and effort to create test benches.

Has ASML Reached the Great Wall of China