Many of us are now somewhat fluent in IoT-speak, though at times I have to wonder if I’m really up on the latest terminology. Between edge and extreme edge, fog and cloud, not to mention emerging hierarchies in radio access networks – how this all plays out is going to be an interesting game to watch. Ron Lowman, DesignWare IP Product Marketing Manager at Synopsys, recently released a technical bulletin which provides some quantified insight in the motivation of moving compute and AI closer to the edge, and how these changes affect IP selection and system architectures.

Hierarchy in radio access networks

The basics are well-understood by now, I think. Shipping zettabytes of data from billions or trillions of edge devices to the cloud was never going to happen – too expensive in power and bandwidth. So we start moving more of the compute closer to the edge. Handling more of the data locally, requiring only short hops. Ron cites one Rutgers/Inria study using a Microsoft HoloLens in an augmented reality (AR) application. This was tasked to do QR code recognition, scene segmentation and location and mapping. In each case the HoloLens first connects to an edge server. For one experiment, AI functions are shipped off to a cloud server. In a second experiment, these are performed on the edge server. Total roundtrip latency in the first case was 80-100ms or more. In the second case, only 2-10ms.

Not surprising, but the implications are important. The cloud latency is easily long enough to induce motion sickness in that AR user. In other applications it could be a problem for safety. The edge-compute round-trip latency is much less of a problem. Ron goes on to add that 5G offers use-cases which could drop latency under 1ms. Making the case for edge-based compute no contest. Going to the cloud is fine for latency-insensitive applications (as long as you don’t mind the cost overhead in all that transmission. And privacy concerns. But I digress.) For any real-time application, compute and AI has to sit close to the application.

Architectures from the cloud to the edge

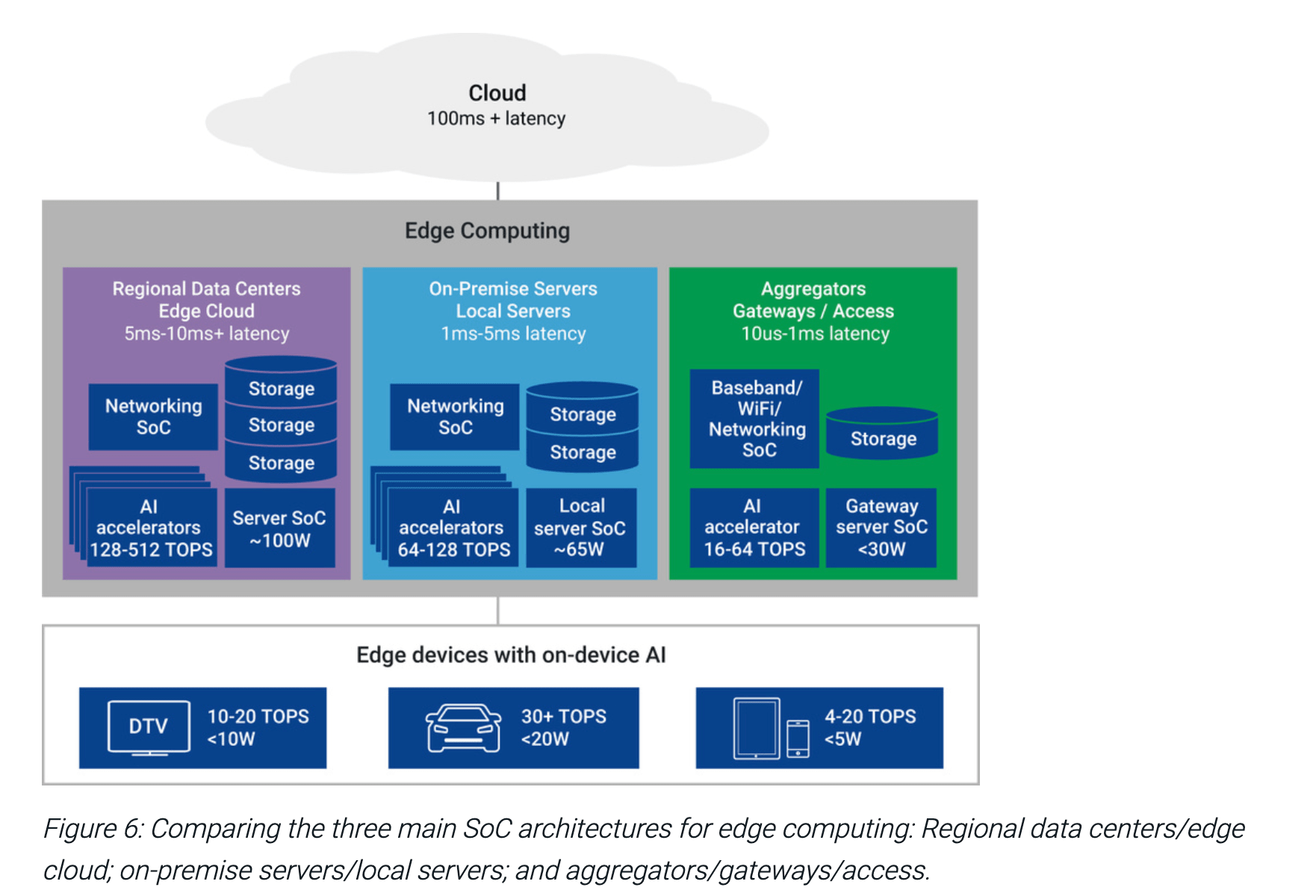

Ron goes on to talk about three different architectures for edge computing, in a way I found novel. He sees edge as anything other than the cloud, drawing on use models and architectures from a number of sources. At the top end are regional data centers, somewhat more locally you have on premise servers (maybe in a factory or on a farm) and more locally still you have aggregators/gateways. Each with their own performance and power profiles.

Regional data centers are scaled-down clouds, with same capabilities but lower capacity and power demand. For on-premise servers, he cites an example of Chick-Fil-A who have these in the fast food outlets, to gather and process data for optimizing local kitchen operations.

The aggregators/gateways he sees performing quite limited functions. I get the higher-level steps in this architecture; however, I’ve seen this hierarchy go further, right down into the edge device, even battery-operated devices. In a voice-activated TV remote for example. I know of remotes in which voice activation and trigger word recognition happens inside the remote. Ron’s view still looks pretty good, maybe just need to add one more level. And possibly consider the gateway may do a bit more heavy lifting (command recognition for example).

He wraps up with a discussion on impact on SoC architectures and the IP that goes into server SoCs and AI accelerators. I agree with his point that the x86 vector neural network extensions probably aren’t going to make much of a dent. After all, Intel developed Nervana (and now Habana) for a reason. More generally, AI accelerator architectures are exploding. Very much in support of vertical applications, from the extreme edge to 5G infrastructure to the cloud. AI is finding its place throughout this regime, in every form of edge and non-edge compute.

You can read the technical bulletin HERE.

Also Read:

Synopsys Introduces Industry’s First Complete USB4 IP Solution

Synopsys – Turbocharging the TCAM Portfolio with eSilicon

Synopsys is Changing the Game with Next Generation 64-Bit Embedded Processor IP

Share this post via:

Comments

3 Replies to “Quantifying the Benefits of AI in Edge Computing”

You must register or log in to view/post comments.