Wikipedia … “In chaos theory, the butterfly effect is the sensitive dependence on initial conditions in which a small change in one state of a non-linear system can result in large differences in a later state”. In other words, a butterfly bats its wings in Argentina and the path of an immense tornado in Oklahoma is changed some time later.

In 1980, IBM undertook a very secret project. They had decided to develop a personal computer. Apple Computer was making a killing in the personal computer market. (See my upcoming weeks #13 and #14 dealing with Apple). IBM owned the big computer market. They weren’t about to allow upstart Apple to horn in on their territory! Normal IBM policy was to design their products in a central design group in New York and to use primarily IBM manufactured ICs. They recognized that sticking to this policy would slow things down. They didn’t want to go slowly. They wanted to announce the product in the summer of 1981. They formed a task-force group in Boca Raton, Florida working under a lab manager named Don Estridge. The task: get a personal computer on the market and do it by August 1981. Use outside ICs. Use outside software. Do whatever it takes, but get it out on time!!! And keep it secret!!!

Meanwhile, Intel was in a tough place. The memory market was already extremely competitive. (See my week #6. “Intel let there be RAM”). The microprocessor market was becoming so as well. Seemingly every company was offering their own version of a microprocessor. (At AMD we were a microprocessor partner of Zilog who was offering a 16 bit microprocessor called the Z8000.) Over the past decade Intel had gone from a place where — having introduced the first commercially successful DRAM and microprocessor — they controlled the market to a place where they had become just one of the pack. They didn’t like that! They created Operation Crush — a massive project aimed at regaining domination in the microprocessor space. Bill Davidow managed the effort. Andy Grove supported it strongly via a message to the field sales organization saying essentially, “If you value your jobs, you’ll produce 8086 design wins”.

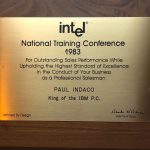

Paul Indaco (Now the CEO of Amulet Technologies) was a young kid just out of school. He was working at Intel in the Applications Department. As part of a rotational program (common in those days.), he was sent out into the field to learn the selling side of the business. As luck would have it, he ended up in the Intel sales office in Fort Lauderdale, Florida. The custom was (And I’d imagine still is) to give the new guy the account scraps that didn’t much matter while the experienced guy kept the important accounts. So — Earl Whetstone, the existing salesman in the office, took the accounts to the south of Ft Lauderdale and Indaco got the less important ones to the north. One of the accounts that “didn’t matter” was IBM Boca Raton. How could IBM “not matter”? Because Boca Raton was not a design site. That is, it wasn’t where decisions regarding what parts to use were made. Those decisions always came down from Poughkeepsie. — or so everyone thought.

One day not long after Indaco had moved to Florida, he happened to be talking with a salesman from his distributor (Arrow). “Oh. By the way. An IBM guy asked me today for some info on the 8086. He works in some secretive new group. He didn’t say why he wanted to know.” With nothing better to do, Paul got the name and number and called the guy.

Yes. It turned out that IBM was up to something. They wouldn’t say what it was. That was top secret. But — they said they were in a huge hurry trying to make a very short deadline. They said that they had more or less decided to go with a Motorola processor (Probably the 68000) but they conceded that they might be willing to take a quick look at the Intel 8086 along the way. That wasn’t good for Intel. It was generally acknowledged that the Motorola 68000 was technically superior to the 8086. It looked like a longshot for Intel, and they weren’t even sure what they were shooting at.

Intel had a few advantages though. The first was their development system — the 8086 in-circuit emulator. It was better than what Motorola had to offer. That would be helpful in speeding up the design and software debugging process. Given the tight deadline, that could be important! Paul loaned them one. Then came good news. The IBM engineer soon said something like, “Hey, I like this development system, would you loan me another one?” The Intel policy was one loaner to a customer. The issue was clear though: “Any work they do on an Intel development system applies to Intel only, so let’s help them do a lot!” So Paul talked with Arrow who happily agreed to loan three more. IBM often needed help on site from the Intel FAE. The project was so secretive, though, that when the FAE went to help with the emulation work, the emulator was separated from the rest of the lab by curtains. All he could see was the door, the emulator, and the curtains. IBM would escort him in, he would solve the problem, and then IBM would escort him out.

Intel had three other advantages: Bill Davidow, Paul Otellini, and Andy Grove. Those were good advantages to have!! Bill ran Intel’s microprocessor division, Paul ran Intel’s strategic accounts, and Andy ran Intel. They wanted this win! Operation Crush was in full force! Any number of issues had to be solved. Among them was the ever-present issue of needing to beat Motorola. And of course, there was the issue of pricing. IBM wanted a price that was in the neighborhood of one half the current 8086 ASP. Then, the Intel team had an epiphany! Why not switch from the 8086 to the 8088? (The 8088 was an 8 bit external bus version of the 8086.) Pricing would be less of an issue with the 8088 and IBM might like it because it would speed up the design cycle. Why? Because Intel had a complete family of 8 bit peripherals which would eliminate the time required to design the functions that the peripherals handled. The available peripherals would not only speed up the project, they’d also reduce the number of components required to do the job. Neither the 68000 nor the 8086 had a complete family of peripheral chips at that time. In the end the Indaco/Whetstone/Otellini/ Davidow/Grove team pulled put a victory. Even after they won, though, they didn’t know what they had won until the day IBM announced. The design win report that Indaco filed listed a win in a new IBM “Super intelligent terminal”.

It ended up being the most important design win in semiconductor history.

What does this have to do with butterflies and chaos theory? …… Intel is the biggest semiconductor company in world. To a great extent that is due to the IBM design win. I wonder what company would be biggest if Indaco hadn’t happened to be talking with the Arrow salesman that day? What if the Arrow guy happened to talk with a Zilog salesperson or an AMD salesperson instead? Or one from National or Motorola or Fairchild?!!! The world might be very, very different!

Grove went on to be Time Magazine’s Man of the Year in 1997. Otellini went on to be CEO of Intel for a decade. Davidow went on to become a very successful venture capitalist with the distinction of leading one of Actel’s financing rounds. They all ended up well.

But Indaco has them topped. He went on to become Actel’s Vice President of Sales!!

Next week: Steve Jobs

Picture #1. The Plaque awarded to Paul Indaco for winning the IBM PC design

Picture #2. Paul Indaco holding his plaque earlier this year.

See the entire John East series HERE.