Time to Change our Thinking:

It is time to work the other side of the communications equation. Send less data. But apply new synthetic data science and machine learning to get more and richer information: a way that requires the transfer of radically fewer actual bits – perhaps 1 or 2% of the bits – but with higher model fidelity. The time for AI based Data Twinning has arrived.

The Conundrum:

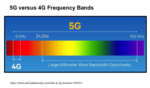

If you make cars smart enough to support comms-free autonomy then you’ve more than doubled, and perhaps tripled, the price of basic transportation. If you rely on comms for off-board intelligence then autonomous mobility is geo-fenced to areas that have brilliant LTE Broadband and 5G without “LTE-Brownouts,” “Not-Spots,” or “LTE deserts”.

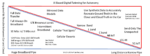

The graph above demonstrates how the LTE broadband through-put behaves at a stationary position during the course of a day. Low Earth Orbit (LEO) mobile satellite broadband solution-set overlay for OEM vehicles is having challenges getting to market. Not a happy situation.

The Data Requirement:

The data communications requirement, as estimated by the Automotive Edge Computing Consortium[1], for a fleet of 100 million autonomous vehicles will be measured in the thousands of exabytes per month with latencies measured variously, by applications, in weeks, minutes, and seconds. The data demand of millions of additional AVs per year will swamp any communication system. Even if you gave away the autonomous vehicle application and all the associated hardware, the data cost would make autonomy affordable only for the wealthy.

The Big Idea: Shrink the pipe but increase fidelity

It is time to work the other side of the equation. Send less data. Get more and richer information, but do so in a way that requires the transfer of radically fewer actual bits – perhaps 1 or 2% of the bits. This approach opens up non-broadband communications technologies that are designed to cover wider areas and long distances like NB IoT and LTE-M. These systems are less design intrusive on the vehicle than today’s Ku space antennas, and the terrestrial towers are already in place. For those hard to reach places, LEO L band antennas are already integrated with shark fins. Profoundly fewer bits transmitted means profoundly smaller data bills delivering happier OEMs and consumers.

Data Twinning: shrink the pipe, increase the fidelity

The solution to this conundrum is to obviate the requirement for Always-On full broadband and full data transfer of data between the vehicle and the cloud and back to vehicle. The requirement is to set the data transfer at the lowest possible level and then work on getting the highest quality, physically accurate simulation data mirrored in cloud and car. Send enough data to confirm the expected and send complex data for the unexpected. The rest of the reality would be synthetically recreated using visual, non-visual, LiDAR, RADAR modeling on both ends. As more data is twinned, the smaller the pipe needs be. Eventually it is very small.

The graph above illustrates the more data that is twinned the smaller the pipe to keep it fresh needs be.

Data Twinning requires more than visual images

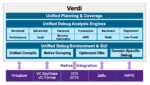

Companies like Rendered.ai have combined physics based synthetic data and AI. This means that the objects that the AI is trained on are not based on an AI game-engine model of the world, instead they are trained on physics based synthetic data. What is important here is that these physic-based objects have accurate light transfer characteristics and fully ray traced caustics which improves realism that delivers ground truth. The result is that synthetic data can be used for machine learning, testing data sets, and twinning.

This approach allows for physically accurate simulations of non-visual data (LiDAR, RADAR – including active scanning and SAR/IR and Ultrasound.) Full-wave electromagnetic simulations of RADAR and other common and novel sensors allow for a richer picture of the ground truth than visual images alone. Vehicles are sensor-rich environments and data from other vehicle sensors can be repurposed for the twinning applications.

The Point:

Synthetic, visual, non-visual plus vehicle data can simultaneously accurately recreate the ground truth in the cloud and the network truth in the vehicle to provide for safe autonomy in high and low connectivity environments.

So, who is pioneering this radical approach to data transfer?

Companies like Rendered.ai (www.rendered.ai) work in concert with existing AI simulation tools and data repositories for adding novel data types. These additions accelerate AI efforts by improving data labeling, and fortify AI against edge conditions, concept drift and model decay. This package integrates new data-types from the vehicle and highway-based sensors and measure their efficacy. Additionally, using a Data Science friendly cloud native workflow, Rendered.ai integrates visual and non-visual data and improves the control of simulations by the data scientist for safer autonomy and more robust data insights.

Image courtesy of Rendered.ai

[1] AECC January 2020 Whitepaper: General Principal and Vision https://aecc.org/

Tom Freeman

I have worked for the best part of the last 10 years on the problem of satellite broadband to the vehicle and all the associated challenges. Changes in the ground and space segment landscape in the last quarter played into my growing anxiety that even if we committed every bit of deployed, planned and dreamt of LEO capacity to vehicle communication it would not be enough. Enough for infotainment perhaps, but not for the core consumption of AVs cloud to vehicle to cloud throughput for network, cruise assist, HD mapping and all the others.