-ASML weakness is evidence of deeper chip down cycle

-When ASML sneezes other chip equip makers catch a cold

-Will backlog last long enough? Will EUV demand hold up?

-“Unthinkable” event, litho cancelations, could shock industry

ASML has in line quarter but alarm bells ring on wavering outlook

ASML reported Euro6.7B in revenues and Euro4.96 in EPS which was more or less in line with expectations. There had been reports coming out of Asia of order slowdowns and cancelations coming out of TSMC and potentially others which had sent the stock down ahead of earnings today.

Those rumors seem at least partially true if not fully true as ASML talked about order book re-arrangements and softening outlook.

ASML’s backlog which has been viewed as solid as a rock extends out to mid 2024 but now appears to be seeing some weakening in the second half of 2024. Right now the order book is not full for the second half of 2024 but management expects (hopes) it will fill.

A defensive conference call

The tone of the conference call was not the normal bullish bravado of a market dominating monopoly but sounded much more defensive about weakening prospects and defending their outlook which was perhaps more concerning than the comments themselves.

Thinking the “unthinkable” litho cancelations & pushouts

It has been long thought in the industry that no chip maker in their right mind would cancel or delay a litho tool for fear of getting back on the end of a very long line later on and being in a much worse position.

That fear seems to have gone away as there is clearly movement in ASML’s order book with tools pushed out and other customers pulled in the fill the otherwise empty slots.

We would have also expected ASML to have already been sold out for 2024 by now but they are only booked halfway through the year with some uncertainty about the second half.

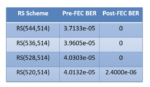

As with the overall chip industry itself, trailing edge technology appears to be holding up better as DUV demand seems good (maybe better in some ways than EUV). One of the issues is that much of the DUV demand is coming out of China which puts even that demand at risk due to embargo issues

In short the order book has softened from a virtual rock to quivering Jello. ASML will be fine but other equipment makers will fare far worse. When ASML sneezes other equipment companies catch a cold.

ASML will likely skate through the down cycle with enough of a backlog to make it to the other side without seeing their earnings and financials take a hit. Their backlog and monopolistic position will help protect the company as we doubt there will be much actual downside impact before the down cycle turns. So they remain the strongest, safest ship in the current semiconductor storm.

Other equipment makers not so much

If you don’t buy litho tools you need less other tools, less deposition, less etch, less yield management etc; etc;. Litho tools are the locomotive that pulls the semiconductor equipment train along with the caboose being assembly and test.

After ASML the company that typically has almost as strong backlog is KLAC whose yield management tools are needed to support all those new litho tools driving to smaller feature sizes. KLACs backlog in some tools is multi year in nature and their business model and “steady Eddy” performance is based on working from a backlog position. We would expect similar softness on KLACs backlog, likely worse than ASML as you don’t need the KLAC yield management tools if you don’t have or delayed the ASML litho tools.

The same obviously goes for both AMAT and LRCX who typically run with even less backlog than KLAC, usually more of a “turns” business during “normal” times.

Semiconductor makers are voting with their feet (capex budgets)

Its clear that chip makers, such as TSMC, Intel & Samsung and others , must feel that demand is not getting any better any time soon if they are willing to delay critical litho tools. This suggests a deeper, longer semiconductor downturn than currently or previously thought. If you think the industry will “bounce back” you are not going to delay a new litho tool. This has very ominous repercussions across the industry as it belies the view of a quick recovery.

Is this a “second leg down” in the semiconductor down cycle?

Our long held view of the semiconductor industry is to look at things through two distinct components of supply on one side and demand on the other.

In our view, it is very clear that the “first” leg down in the current down cycle was primarily supply side driven as the industry built capacity like crazy after it was caught short through the Covid and supply chain crisis. The industry built and built, with obviously reckless abandon until we “overshot” the needed supply and now found ourselves in an oversupply condition that started the current down cycle.

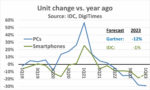

Meanwhile, the demand side has softened and perhaps the downward pressure on demand has somewhat accelerated along with global macro economic concerns.

It feels to us that there is a high likelihood of a “second leg” to the current chip cycle that is driven more by weakening demand than the first half which was driven by oversupply.

This could potentially be worse as supply issues tend to be easier to fix than demand issues as you can control supply if you are a chip maker as we have seen with the memory market and Micron and Samsung taking capacity and product off line to support pricing.

The problem is that there isn’t that much that the industry can do to stimulate demand for chips. Lowering pricing on chips used in cars doesn’t stimulate demand.

The stocks

Chip stocks have been on a roll since the beginning of the year and in our view have become prematurely “overheated”. Many investors have falsely thought that we were at a bottom in the industry and it was time to buy back in as it could only get better from here.

That thought process was not unreasonable as in prior down cycles we saw a bottom after a few quarters and it was a signal to buy and it turned out well. That may not be the case if it was more of a “false bottom” created by supply cut backs that then fell apart when demand weakened creating a further drop.

If the current down cycle were just a supply side issue, chip makers would not delay/cancel litho plans so this is clear evidence that chip makers are bracing for a longer, deeper downturn that has more demand side concerns on top of the prior supply side concerns.

2023 is clearly not a recovery year. We think that typical, unsubstantiated hopes for a second half recovery in 2023 will likely fade as chip companies report and analysts figure it out. The bigger question now becomes when/if in 2024 we will start to see a recovery.

Fab projects and equipment will be pushed out much further, especially in the very over supplied memory space. The main point of light in the industry remains non leading edge semis which has been saving many in the industry including DUV for ASML.

ASML is certainly not a good beginning of earnings season for chips and likely will send a much needed chill through the chip stock market.

About Semiconductor Advisors LLC

Semiconductor Advisors is an RIA (a Registered Investment Advisor),

specializing in technology companies with particular emphasis on semiconductor and semiconductor equipment companies. We have been covering the space longer and been involved with more transactions than any other financial professional in the space. We provide research, consulting and advisory services on strategic and financial matters to both industry participants as well as investors. We offer expert, intelligent, balanced research and advice. Our opinions are very direct and honest and offer an unbiased view as compared to other sources.

Also Read:

Gordon Moore’s legacy will live on through new paths & incarnations

Sino Semicap Sanction Screws Snugged- SVB- Aftermath more important than event

Report from SPIE- EUV’s next 15 years- AMAT “Sculpta” braggadocio rollout