SLAM – simultaneous localization and mapping – is critical for mobile robotics and VR/AR headsets among other applications, all of which typically operate indoors where GPS or inertial measurement units are either ineffective or insufficiently accurate. SLAM is a chicken and egg problem in which the system needs to map its environment to determine its place and pose in that environment, the pose in turn affects the mapping, and so on. Today there are multiple ways to do the sensing for SLAM, among which you’ll often hear LIDAR as a prime sensing input.

But LIDAR is expensive, even for newly emerging solid-state implementations and it’s still not practical for VR/AR. Also often overlooked for industrial robots, say for warehouse automation applications, is that the proofs of concept look great but current costs are way out of line. I recently heard that Walmart ordered a couple of these robots at $250k each – nice for exploring the idea, maybe even building warehouse maps, but not to scale up. Practical systems obviously have to be much cheaper in purchase, maintenance and down-time costs.

Part of reducing that cost requires switching to low-cost, low-power sensing and fast, low-power SLAM computation. Sensing might be a simple monocular or RGBD camera, so this becomes visually-based SLAM, which appears to be the the most popular approach today. For the SLAM algorithm itself, the best-known reference is ORBSLAM, designed unsurprisingly for CPU platforms. The software is computationally very expensive – just imagine all the math and iteration you have to do in detecting features in evolving scenes while refining the 3D map. That leads to pretty high power consumption and low effective frame-rates. In other words, not very long useful life between charges and not very responsive mapping, unless the robot (or headset) doesn’t move very quickly.

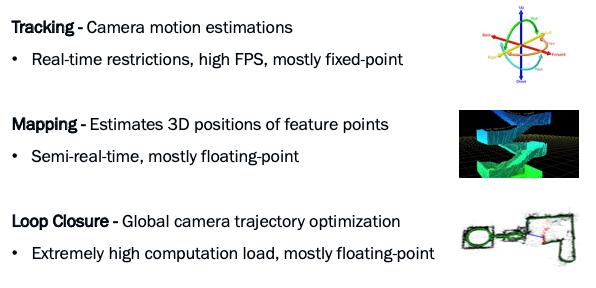

There are three main components to SLAM: tracking, mapping and loop closure. Tracking estimates the camera location and orientation. This has to be real-time responsive to support high frame rates (30FPS or 60FPS for example). This component depends heavily on fixed point operations for image processing and feature detection, description and matching (comparing features in a new frame versus a reference frame). Features could be table edges, pillars, doors or similar objects or even unique markers painted on the floor or ceiling for just this purpose.

Mapping then estimates the 3D positions of those feature points; this may add points to the map if the robot (or you) moved or the scene changed, and will also refine existing points. Computation here has to be not too far off real-time, though not necessarily every frame, and is mostly a lot of transformations (linear algebra) using floating-point data types.

In the third step, loop closure, you return to a point you have been before, and the mapping that you built up should in principle show you at that same point. It probably won’t, exactly, so there is opportunity for error correction. This is a lot more computationally expensive than it may sound. Earlier steps are probabilistic so there can be ambiguities and mis-detections. Loop closure is an opportunity to reduce errors and refine the map. This stage also depends heavily on floating-point computations.

So you want fast image processing and fixed-point math delivering 30 or 60FPS, a lot of floating-point linear algebra, and yet more intensive floating-point math. All at the low power you need for VR/AR or low-cost warehouse robots. You can’t do that in a CPU or even a cluster of CPUs. Maybe a GPU but that wouldn’t be very power-friendly. The natural architecture fit to this problem is a DSP. Vector DSPs are ideally suited to this sort of image processing, but a general-purpose solution can be challenging to use.

Why? Because a lot of what you are doing works with small patches around features in the captured (2D) images. These patches are very unlikely to nicely localize themselves into consecutive locations in memory, making them vector-unfriendly. For similar reasons, operations on these features (eg a rotation) become sparse matrix operations, also vector-unfriendly. This means that conventional cache-based approaches to optimization won’t help. You need an application-specific approach using local memory to ensure near-zero latency in accesses, which requires carefully-crafted DMA pre-fetching based on an understanding of the application need.

This works but then you have to be careful about fragmenting the image thanks to the inevitably limited size of that local memory which can, among other things, lead to a need to process overlaps between tiles, actually increasing DDR traffic. Finally, coordination with the CPU adds challenges, in sharing memory with the CPU virtual memory, in managing data transfers and in control, sync and monitoring between the CPU and DSP.

CEVA has added a SLAM SDK to their Application Developer Kit (ADK) for imaging and computer vision, designed to run with their CEVA-XM and NeuPro processors. The SLAM SDK enables an interface from the CPU allowing you to offload heavy-duty SLAM building-blocks to the DSP. Supplied blocks include feature detection, feature descriptors and feature matching, linear algebra (matrix manipulation and equation solving), fast sparse equation solving for bundle adjustment and more.

The ADK also includes a standard library of OpenCV-based functions, CEVA-VX which manages system resource requirements, including those tricky data transfers and DMA optimizations to optimize memory bandwidth and power, and an RTOS and scheduler for the DSP which abstracts and simplifies the CPU-DSP interface.

All good stuff, but what does this deliver? Talking to Danny Gal (Dir of computer vision at CEVA), I learned that the CEVA-SLAM SDK, running a full SLAM tracking module with a frame size of 1280×720 on the CEVA-XM6 DSP at 60 frames per second consumes only 86mW in a TSMC 16nm process. So yeah, it seems like this approach to SLAM is a big step in the right direction. You can learn more about this HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.