At DVCon I sat in on a series of talks on using higher-level abstraction for design, then  met Adam Sherer to get his perspective on progress in bringing SystemC to the masses (Adam runs simulation-based verification products at Cadence and organized the earlier session). I have to admit I have been a SystemC skeptic (pace Gary Smith) but I came away believing they may have a path forward.

met Adam Sherer to get his perspective on progress in bringing SystemC to the masses (Adam runs simulation-based verification products at Cadence and organized the earlier session). I have to admit I have been a SystemC skeptic (pace Gary Smith) but I came away believing they may have a path forward.

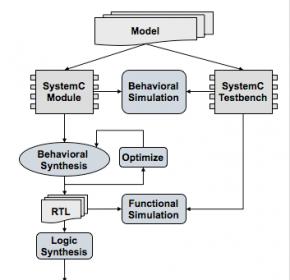

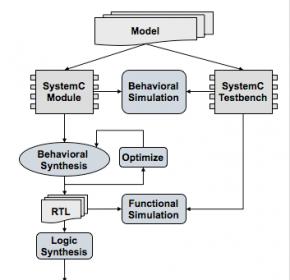

Adam has a nice characterization for what a level of abstraction needs to become successful, which he modestly calls “Adam’s law”. You need tools to develop an abstract model, you need tools to verify the model and you need a path to the next lower level of abstraction, not just for the design data but also for verification. We have this for transistors/gates to GDSII, we have it for RTL to gates, and we are getting closer for SystemC, where we already have modeling and synthesis and we are starting to see a path for verification in the emerging portable stimulus standard and interoperability of SystemC, UVM and ‘e’.

One of the problems I had always had with SystemC was the apparent magical promise of converting software algorithms into hardware. In the earlier session Frederic Doucet of Qualcomm did a great job of dispelling the magic and showing that HLS was doing 3 very understandable (to an RTL-head) things:

- Managing latency by letting you parallel blocks of algorithm (run two additions in parallel rather than one after another)

- Letting you tradeoff resource-sharing versus parallelism (sharing one multiplier block versus parallelizing multiple blocks)

- Making it simpler to experiment though tool options rather than explicit structure, by removing sequencing choices from the abstract model

The Portable Stimulus Working Group (PSWG) is developing a standard which aims to address the verification need. I have talked more about this in other blogs so I won’t repeat that material here. Essentially the goal is to support stimulus which can interoperate or be easily moved between virtual modeling, HLS models, RTL simulation, emulation and hardware prototyping – to the greatest extent feasible. This should ease adoption of the verification part of Adam’s law. PSWG development is still underway and expected to produce a release in early 2017.

There was a good talk from Intel on the realities of using SystemC in production design. Bob Condon made several interesting points:

- Many teams in Intel are using SystemC HLS flows as a part of production design, both for algorithm-dominated and control-dominated designs

- Compelling reasons to switch from RTL to SystemC include time-pressure, a significant amount of new code, a line of sight to a derivative and an existing starting point with some kind of C/C++ model.

- Groups happiest using the approach don’t try to fool with the generated RTL and see a big compression in design and verification time. Groups most unhappy are those that do mess with the RTL. On a related note, satisfied groups felt absolute best QoR was less important (within reasonable bounds) than schedule, which was why they didn’t feel the need to tweak.

- Overall big pluses are faster time to validated RTL and ease of modifying the code. Less prominent are retargeting technologies and sharing code between VP and verification teams.

Dirk Seynhaeve of Intel/Altera gave an interesting talk. Their objective is to expand FPGA appeal to software developers who need to accelerate algorithms, obviously aiming to expand the accessible market. Software developers don’t want to learn hardware design but they do understand how to parallelize threads on multi-processor systems. So Altera is supporting software development in C or C++ with OpenCL for parallelization. Their view is that SystemC is just too big a step for software developers.

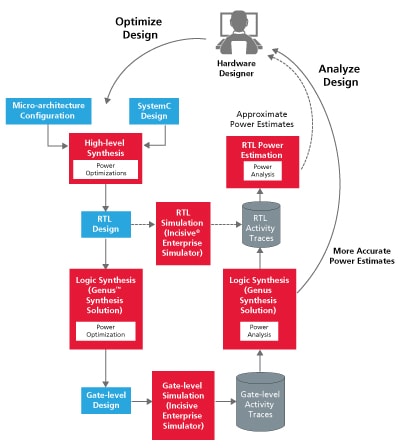

I wrapped up with Adam on directions and drivers for HLS flows. He said we’re still early in SystemC adoption – maybe 10% of the audience in the first session raised their hands when asked if they were users. Outside the Bay Area uptake has been stronger, but valley engineers have built a lot of expertise in RTL and will take them longer to change. Quite likely a driver will be power optimization with acceptable performance. Power is most impacted by software but the hooks for optimization have to be in hardware – connecting the two through high-level design is a logical step.

Design sizes and compressed schedules will help. And as Moore’s law slows down, focus on improving algorithm performance is increasing. That will force more experimentation with parallelism which be easier in SystemC than in RTL. And he does believe that verification complexity will force more (verification) sharing across levels, again encouraging top-down approaches. Sounds like we should expect continued gradual transition.

To learn more about Cadence system-level design and verification solutions, click HERE.

Share this post via:

Comments

0 Replies to “SystemC and Adam’s Law”

You must register or log in to view/post comments.