I attended the Jasper User Group this week, at least the keynotes, the first by Kathryn Kranen the CEO of Jasper and the second by Bob Bentley of Intel.

I attended the Jasper User Group this week, at least the keynotes, the first by Kathryn Kranen the CEO of Jasper and the second by Bob Bentley of Intel.

Kathryn went over some history, going back to when the company was started (under the name Tempus Fugit) back in August 2002 with a single product for protocol verification. Now, since Q3 2010 Jasper has had 10 quarters of profitability and a growth rate of 35% since 2008. The company is private so doesn’t publish real numbers for revenue etc but Kathryn did say that the company just passed the 100 employee mark so you can make your own guesses.

Kathryn went on to talk about the multi-app approach where she feels they have cracked the code. This makes it easier to work with lead customers on specific apps with joint customer/AE/R&D initiatives and then do what she calls massification, making it widely deployable. A new white paper on JasperGold Apps is here.

Bob Bentley told the story of formal verification within Intel. His basic philosophy is that proving correctness is much better than testing for correctness. As Djikstra said in the context of software development, “testing shows the presence of bugs not their absence.” Bob started off giving Intel’s policy of not endorsing vendors and thus saying nothing should be taken that way. In fact Intel use a mixture of internal tools and commercial tools.

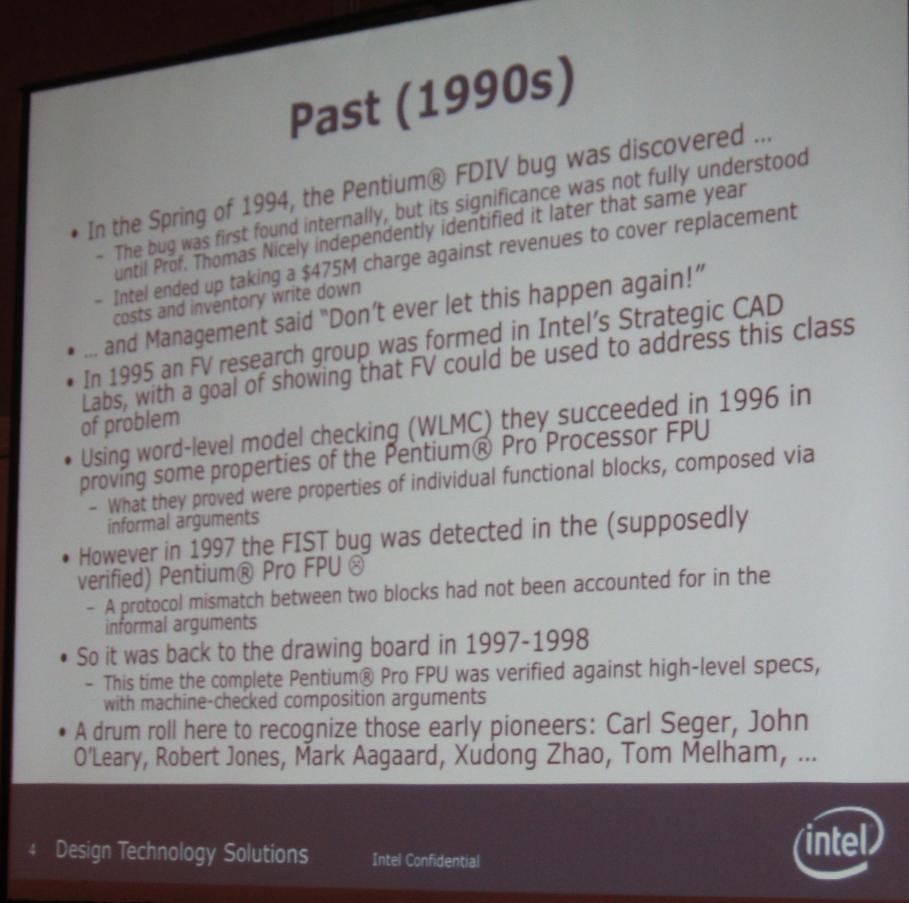

Formal approaches suddenly gained a lot of traction after the 1994 Pentium floating-point divide bug. This caused Intel to take a $475M charge against earnings and management “don’t ever let this happen again”. In 1996 they started proving properties of the Pentium processor FPU.

Then in 1997 a bug was discovered in the FIST instruction (that converts floating point numbers to integers) in the formally verified correct Pentium Pro FPU. It was a protocol mismatch between two blocks not accounted for in the informal arguments. Another escape.

So they went back to square one and during 1997-98 the verified the entire FPU against high-level specs so that mismatches like the FIST bug could no longer escape. During 1997-99 the Pentium 4 processor was verified and there were no escapes.

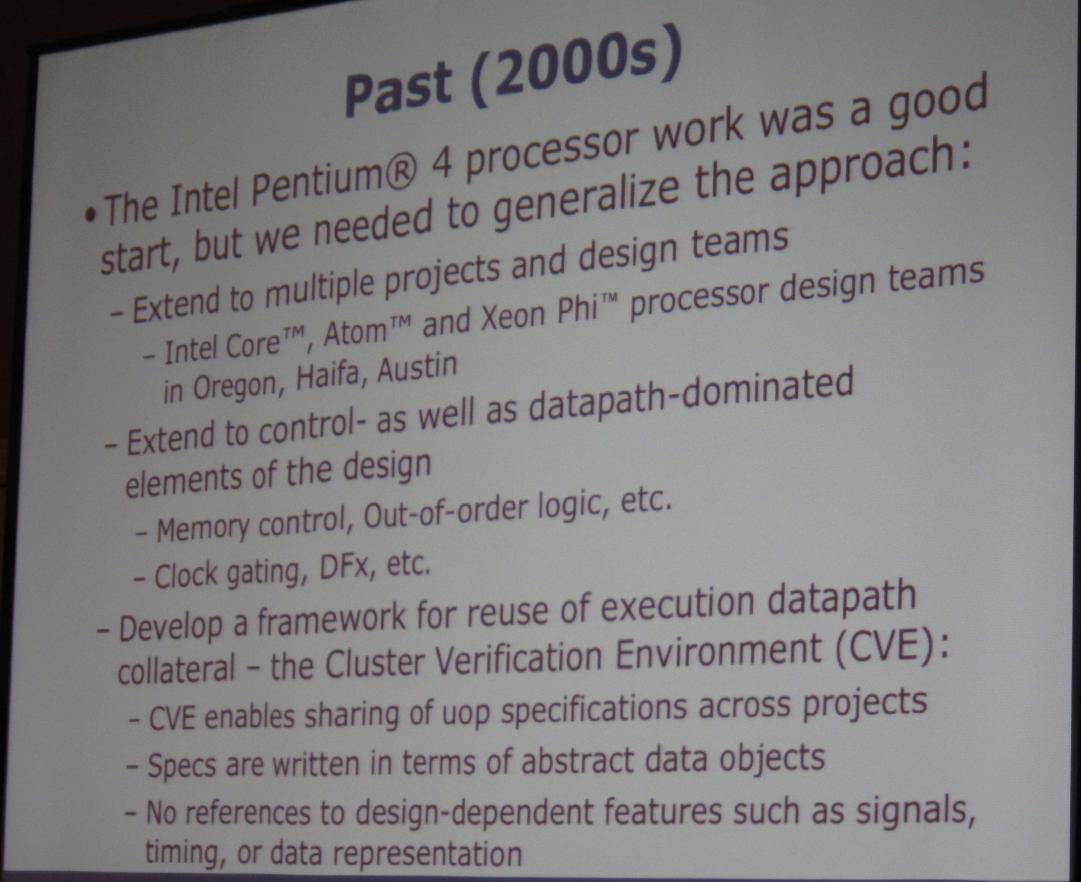

That formed the basis of work done at Intel in the 2000s as they generalized the approach and also scaled it out to other design teams and simplified the approach so that it was usable “by mere mortals” rather than formal verification gods.

They also extended the work to less datapath-dominated parts of designs such as out-of-order instruction logic or clock gating for power reduction.

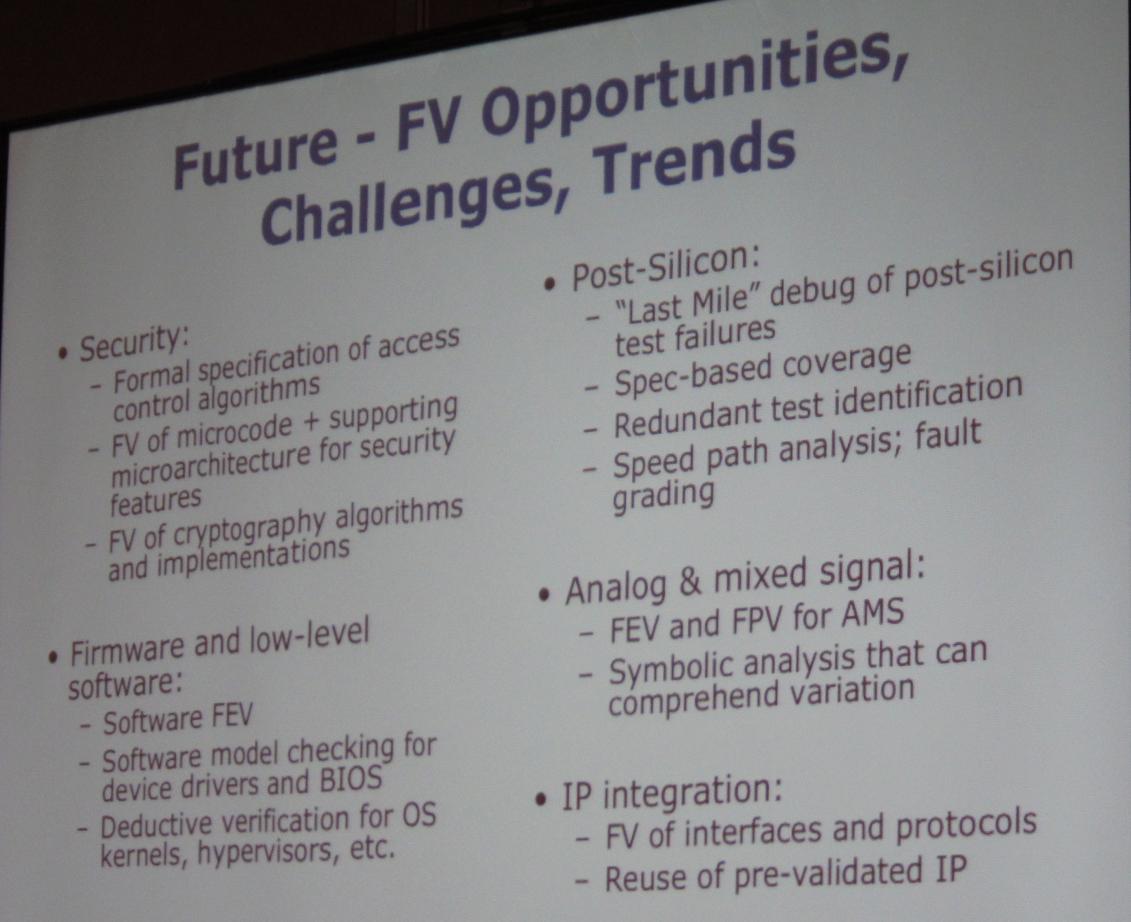

Going forward they want to replace 50% of unit level simulation with formal approaches by 2015. This is a big challenge, of course. This will spread the word and democratize formal as an established part of the verification landscape and systematize it.

Going forward they want to extend the work to formally verifying security features, firmware, improve test coverage while reducing vector counts, do symbolic analysis of analog (including formally handling variation), and pre-validating IP.

CES 2025: All In on AI and Proposed Tariffs