After two years of wrestling with and at least partially resolving fraud charges over its “defeat device” to manipulate emissions testing results, Volkswagen emerged in 2018 as the flag-bearer for electrification in the U.S. The company also concluded 2018 as the largest producer of passenger cars in the world.

In spite of or perhaps because of the jailing of some senior executives, Volkswagen regrouped and announced the most aggressive investment effort in the industry with the intent of ultimately dominating both the electric and autonomous vehicle markets. The company was careful, though, to place its milestones far enough out on the horizon – 2020 – to allow some time to actually achieve them.

Whether or not Volkswagen is able to meet its autonomous and electric vehicle-related objectives by 2020, the company is committed to building a nationwide network of charging stations in the U.S. as is called for by the $14.7B settlement of diesel-related fraud charges. The settlement in the U.S. calls for Volkswagen to create an operation, now called Electrify America, to build a network of charging stations using $2B in settlement funds.

https://vwclearinghouse.org/about-the-settlement/

Electrify America enters the fast charge station market with plans to install chargers at more than 650 community-based sites and approximately 300 highway sites in the U.S. The company is recruiting other auto makers to use its non-proprietary chargers (using CCS, CHAdeMO and J1772 standards).

There are a variety of ways that this effort just now getting underway will transform the public’s perception of electric vehicles.

Electrify America’s strategy includes the concept of charging as a service. The concept is not new, but EA is recruiting auto makers to participate in and support the program. Thus far, Audi of America and Lucid Motors have agreed to participate with others expected to join.

Electrify America will join the growing rush to bring fast charging to neighborhood locations like supermarkets, convenience stores and coffee shops. Slower charging systems are more often found in company or airport parking lots or apartment complexes. Many fast charging stations today are found in remote locations – most notably Tesla’s.

Electrify America is bringing liquid cooled cables to the fast charging effort in order to deliver the fastest, highest capacity charge in the market. Bringing such new technology to bear has implications for software compatibility and the requirements of the supporting electrical grid – but EA will presumably and is presumably resolving these issues.

Electrify America will change the nature and importance of reserving parking spaces. EV and PHEV drivers will want to know if charging station-equipped parking spaces are available, functioning and compatible. Given the range of current charging station programs, payment schemes will need to be sorted out including pay-as-you-go vs. subscription-based charging services.

If successful, EA will be the first car company owned network in the U.S. providing non-proprietary fast charging as a service for competing car companies.

Electrify America is not alone in building out a nationwide charging network. EVgo already has more than 1,100 fast charging stations to EA’s 40 and already offers broad coverage. Together, the two companies will give a substantial impetus to the process of upgrading the existing network of chargers in the U.S. and sprinkling charging locations across the landscape.

With more than 100,000 traditional gas stations in the U.S., it is clear that these are early days for building out fast-charging infrastructure – currently numbering in the low thousands of locations, including Tesla. But the ability to make nearly any parking space a charging location marks a fundamental shift in deployment strategy and also alters the EV owning equation for consumers.

Just as EV drivers may sometimes have access to privileged HOV lanes on highways, they are also seeing higher level parking privileges. All of these value propositions begin to add up for consumers – along with the peppier performance of electric vehicles themselves.

In the end, Volkswagen may have found its green mojo in the eye of the diesel-gate storm. Most notable of all is that the opportunity arrives in the one market in the world – the U.S. – where it has consistently underperformed for several decades. It is this expectation of a turnaround in the U.S. that may be behind LMC Automotive’s forecast of continued global VW sales dominance for the foreseeable future and at a 3% CAGR.

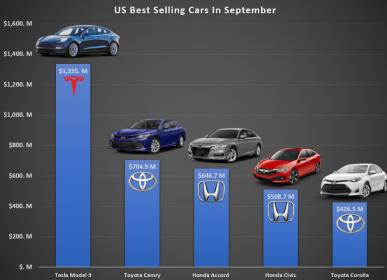

VW’s U.S. renaissance arrives, meanwhile, in the shadow of Tesla Motors’ brilliant 2018 sales performance. Multiple sources peg Tesla’s Model 3 EV as the best revenue producing car overall in the U.S. in September and the fifth best-selling car overall in Q3. The bar is set high for Volkswagen and Electrify America – 2019 promises to be an interesting year for EVs.

SOURCE: Cleantechnica, GoodCarBadCar, InsideEVs, TroyTeslike, Kelly Blue Book