In 2015 Intel acquired Altera for $16.7B changing one of the most heated rivalries (Xilinx vs Altera) the fabless semiconductor ecosystem has ever seen. Prior to the acquisition the FPGA market was fairly evenly split between Xilinx and Altera with Lattice and Actel playing to market niches in the shadows. There were also two FPGA startups Achronix and Tabula waiting in the wings.

The trouble for Altera started when Xilinx moved to TSMC for manufacturing at 28nm. Prior to that Xilinx was closely partnered with UMC and Altera with TSMC. UMC stumbled at 40nm which gave Altera a significant lead over Xilinx. Whoever made the decision at Xilinx to move to TSMC should be crowned FPGA king. UMC again stumbled at 28nm and has yet to produce a production quality FinFET process so it really was a lifesaving move for Xilinx.

In the FPGA business whoever is the first to a new process node has a great advantage with the first design wins and the resulting market share increase. At 28nm Xilinx beat Altera by a small margin which was significant since it was the first TSMC process node Xilinx designed to. At 20nm Xilinx beat Altera by a significant margin which resulted in Altera moving to Intel for 14nm. Altera was again delayed so Xilinx took a strong market lead with TSMC 16nm. When the Intel/Altera 14nm parts finally came out they were very competitive on density, performance and price so it appeared the big FPGA rivalry would continue. Unfortunately, Intel stumbled at 10nm allowing Xilinx to jump from TSMC 16nm to TSMC 7nm skipping 10nm. To be fair, Intel 10nm is closer in density to TSMC 7nm than TSMC 10nm. We will know for sure when the competing chips are field tested across multiple applications.

A couple of interesting FPGA notes: After the Altera acquisition two of the other FPGA players started gaining fame and fortune. In 2010 MicroSemi purchased Actel for $430M. The initial integration was a little bumpy but Actel is now the leading programmable product for Microsemi. In 2017 Canyon Bridge (A Chinese backed private equity firm) planned a $1.3B ($8.30 per share) acquisition of Lattice Semiconductor which was blocked after US Defense officials raised concerns. Lattice continues to thrive independently trading at a high of more than $12 per share in 2019. Given the importance of programmable chips, China will be forced to develop FPGA technology if they are not allowed to acquire it.

Xilinx of course has continued to dominate the FPGA market since the Altera acquisition with the exception of the cloud where Intel/Altera is focused. Xilinx stock was relatively stagnate before Intel acquired Altera but is now trading at 3-4X the pre-acquisition price.

Of the two FPGA start-ups, both of which had Intel investments and manufacturing agreements, Achronix was crowned the winner with more than $100M revenue in 2018. Achronix originally started at Intel 22nm but has since moved to TSMC 16nm and 7nm which will better position them against industry leader Xilinx. Tabula unfortunately did not fair so well. After raising $215M in venture capital starting in 2003 Tabula officially shut down in 2015. They also targeted Intel 22nm and according to LinkedIn several of the key Tabula employees now work for Intel.

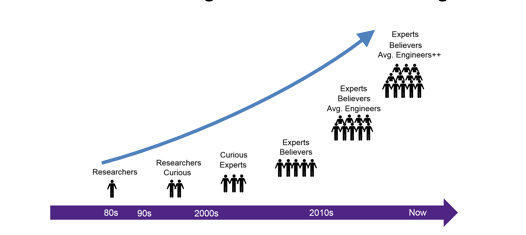

According to industry analysts, the FPGA market CAP broke $60B in 2017 and is expected to approach $120B by 2026 growing at a healthy 7% CAGR. The growth of this market is mainly driven by rising demand for AI in the cloud, growth of Internet of Things (IoT), mobile devices, Automotive and Advanced Driver Assistance System (ADAS), and wireless networks (5G). However, the challenges of FPGAs directly competing with ASICs continues but at 7nm FPGAs will have increased speed and density plus lower power consumption so that may change. Especially in the SoC prototyping and emulation markets which are split between ASICs and FPGAs.