A few times a year, I check in with AMIQ EDA co-founder Cristian Amitroaie to see what’s new with their company and the integrated development environment (IDE) market for hardware design and verification. Usually he suggests a topic for us to discuss, but this time I specifically wanted to learn more about the version of their Design and Verification Tools (DVT) IDE built on the Visual Studio Code (VS Code) platform. They announced this product earlier this year, and my colleague Kalar wrote about it in some detail.

Microsoft developed VS Code, a language-aware source code editor with a streamlined graphical user interface (GUI), and released the source code on GitHub in 2015. VS Code is customizable for language support, editor theme, keyboard shortcuts, user preferences, and more. Because of its flexibility and availability, a broad ecosystem of extensions and themes is available. It is not surprising that hardware design and verification engineers would want support for their languages in an IDE based on VS Code.

I asked Cristian why they felt the need to develop DVT IDE for VS Code when they’ve already had the very successful DVT Eclipse IDE since 2008. AMIQ EDA pretty much invented the whole concept of hardware IDEs, and they have lots of happy users, several of whom I have interviewed in past blog posts. DVT Eclipse IDE is based on the Eclipse Platform, which is also very popular, widely adopted, and supported by a wide range of plugins. I wondered whether there is some deficiency in Eclipse that caused them to add support for VS Code.

Cristian explained that it all comes down to user preference. There is a generation of engineers who have had exposure to other IDEs based on VS Code and are familiar with its look and feel. There are many other engineers who know Eclipse better and have no interest in switching. As the industry’s leader in hardware IDEs, Cristian and his team felt that it made perfect sense to support both underlying platforms. Users are free to mix and match however they want, at no extra cost.

In my long experience in the industry, I have seen that both hardware and software engineers are reluctant to switch GUIs or editors. I am old enough to remember when we did most of our work using plain-text editors in full-screen mode on ASCII terminals. There were two primary choices: vi and Emacs. Users were surprisingly passionate about which they preferred, to such an extent that observers often referred to the “religion” of Emacs or vi. Perhaps the strong feelings about Eclipse and VS Code are just a new manifestation of an old feud.

Cristian said they are seeing lots of interest in DVT IDE for VS Code, as expected, but that they are not observing any significant migration of DVT Eclipse IDE users. Engineers sometimes try the other version out of curiosity, especially since it’s easy to do so using the same project settings and scripts. However, for the most part they prefer either VS Code or Eclipse and they choose their hardware IDE accordingly. AMIQ EDA is happy to support both.

I wondered whether there were any technical reasons to choose one version of DVT IDE over the other. Cristian said that they have worked very hard to make the two implementations as equivalent in functionality as possible. They have a common engine behind both interfaces to ensure consistency in code compilation and analysis. However, there are some differences in the user experience due to the different technologies used by the underlying platforms.

I found this intriguing, and asked Cristian for some examples. He said that Eclipse has better support for dedicated views of the code, such as hierarchy browsers and schematics. Eclipse also provides a way to show “breadcrumbs” as the user traverses the design and verification hierarchy. This makes it easy to know the location in the hierarchy of the file being edited and to navigate to another location. These actions are possible in VS Code, but in a less intuitive way.

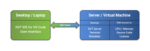

Cristian then made a deep dive into a capability that works much better in VS Code than in Eclipse: the ability to open a secure shell (SSH) to a remote machine from within the IDE. Users can connect over Remote SSH from their laptop or desktop, for example a Windows PC or Apple, to a workstation at the office. They can remotely browse folders, edit files, save results, etc. as if the files were local. This provides better performance than running an interactive GUI over a virtual network computing (VNC) connection.

The VS Code Remote SSH capability also supports the use of remote machines for compiling and analyzing the source code. Users can spawn a process on a dedicated machine, on a server farm, or in the cloud. This allows the use of inexpensive desktops without the computational power and memory needed for high performance on large designs. Users can also open a terminal to a remote machine to run simulations and other tasks. Again, the performance is better than with a VNC and the users feel as if they are doing everything locally.

Cristian closed our conversation by reminding me that AMIQ EDA is all about user choice. They support an incredible range of language and format standards, including SystemVerilog, Verilog, Verilog-AMS, VHDL, e, Property Specification Language (PSL), Portable Stimulus Standard (PSS), Unified Power Format (UPF), and the Universal Verification Methodology (UVM). Offering their DVT IDE based on either Eclipse or VS Code is the next logical step in their openness.

Also Read:

Using an IDE to Accelerate Hardware Language Learning

AMIQ EDA Adds Support for Visual Studio Code to DVT IDE Family