Initial thoughts

At IEDM held in December 2024, TSMC presented: “2nm Platform Technology featuring Energy-efficient Nanosheet Transistors and Interconnects co-optimized with 3DIC for AI, HPC and Mobile SoC Applications,” the authors are:

Geoffrey Yeap, S.S. Lin, H.L. Shang, H.C. Lin, Y.C. Peng, M. Wang, PW Wang, CP Lin, KF Yu, WY Lee, HK Chen, DW Lin, BR Yang, CC Yeh, CT Chan, JM Kuo, C-M Liu, TH Chiu, MC Wen, T.L. Lee, CY Chang, R. Chen, P-H Huang, C.S. Hou, YK Lin, FK Yang, J. Wang, S. Fung, Ryan Chen, C.H. Lee, TL Lee, W. Chang, DY Lee, CY Ting, T. Chang, HC Huang, HJ Lin, C. Tseng, CW Chang, KB Huang, YC Lu, C-H Chen, C.O. Chui, KW Chen, MH Tsai, CC Chen, N. Wu, HT Chiang, XM Chen, SH Sun, JT Tzeng, K. Wang, YC Peng, HJ Liao, T. Chen, YK Cheng, J. Chang, K. Hsieh, A. Cheng, G. Liu, A. Chen, HT Lin, KC Chiang, CW Tsai, H. Wang, W. Sheu, J. Yeh, YM Chen, CK Lin, J. Wu, M. Cao, LS Juang, F. Lai, Y. Ku, S.M. Jang, L.C. Lu- with Jeffrey Yeap presenting the work.

This paper continued TSMC’s trend over the last several years of presenting marketing papers at IEDM instead of technical papers. In fact, this paper took the trend even further, there are no pitches in the paper, no SRAM cell size, and that graphs are all relative performance graphs without real units. Although the paper doesn’t present the kind of technical details that would typically be included in an IEDM paper, it does paint a picture of a process ready for 2025 production and the session was packed.

In this review we will take the few substantive details that are in the paper as well as our own analysis and present how the process compares to competing 2nm class processes.

In terms of the overreaching Power, Performance, and Area (PPA), the paper states that the process delivers a 30% power improvement or 15% performance gain and >1.15x density versus the previous 3nm node. Note: the 3nm paper reference suggests this is in comparison to N3E, not N3.

Power

At the 14nm (Samsung)/16nm (TSMC) node Samsung and TSMC both produced the Apple A9 processors. Measurements by Tom’s hardware found the Samsung version had slightly better power performance compared to TSMC. We believe the A9 was designed for Samsung first so that may simply reflect a design that is more optimized for Samsung that was ported to TSMC, nevertheless, the power was very close between the two. Going forward from 14nm/16nm, to 10nm, 7nm, 5nm, 3nm, and now 2nm Samsung and TSMC have both provided relative power improvement for each node versus the previous node.

We have been able to compare the Samsung and TSMC at 3 different nodes since the 14/16nm comparison and our extrapolations have been consistent with those values.

At 10nm TSMC provided a larger power reduction than Samsung and maintained that lead until 3nm where Samsung Gate All Around (GAA) provided a large enough improvement to mostly close the gap to TSMC’s 3nm FinFET process in power (GAA versus FinFET is expected to provide a greater power improvement).

TSMC 2nm announced power improvement of 30% versus 3nm is greater than Samsung’s 25% improvement and TSMC once again maintains a lead.

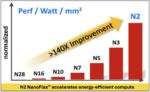

During the presentation of the paper, graphs were shown of power efficiency and performance per watt versus node. The power efficiency graph was in one version of the paper although it is not in the “final” version of the paper published in the proceeding. Thankfully we captured the power efficiency graph because it is very interesting to analyze, see figure 1.

We took the graph image, pulled it into Excel and created an Excel graph overlaying it with the 28nm bar normalized to 1 and then entering values for the other bars until they matched the graph. If we then build a set of bars starting at 28nm = 1 scaled up based on the TSMC announced node to node power improvements we get a total improvement of less than 9x. Nodes from N28 to N10 match well but from N7 on the bars on the graph show more improvement per node than TSMC has announced. Just the N3 to N2 bars on the graph show a 55% improvements versus the announced 30% improvement.

It isn’t clear what may be driving this difference, but it is a big disconnect. This may be why the graph was removed from the final paper.

Performance

Similar to the power analysis above, at Samsung 14nm/TSMC 16nm the Apple A9 processor had identical performance on the 2 processes. Normalizing both processes to 1 and applying the announced node to node performance improvements from both companies it is possible to compare performance per node. It has also been possible to use an Intel 10SF versus AMD processors on TSMC 7nm process, to add Intel to the analysis and forward calculate based on Intel performance by node announcements.

We have been able to check our extrapolations at 3 nodes for Samsung and TSMC since the 16/14nm nodes as well as Intel at 2 nodes and those checks have confirmed our extrapolations are tracking correctly.

Based on this analysis it is our belief that Intel 18A has the highest performance for a 2nm class process with TSMC in second place and Samsung in third place.

Our performance index values are in the full article available with free registration on the TechInsights platform here.

Area

The third part of PPA is area. We analyze two “area” related factors, one is high density logic cell transistor density and the second is SRAM cell size. TechInsights has done detailed reverse engineering work on TSMC N3E process and we have all the pitches necessary to calculate our standard high density logic cell transistor density. Similarly, we have analyzed Samsung SF3E and SF3. Both TSMC in this paper and Samsung in public statements have provided density improvement values for 2nm. In the case of Intel we have used our own estimated pitches to do a density comparison. For high density logic cells TSMC is well ahead of Samsung and Intel on density, Intel is second, and Samsung is third.

The high density logic cell transistor density is in the full article available with free registration on the TechInsights platform here.

As previously mentioned, the TSMC paper does not include SRAM cell sizes, however there is a graph of SRAM density versus node, see figure 3.

The problem with this is an SRAM array includes not only the SRAM cell but also overhead, for example 7nm has 25.0 Mb/mm2, the SRAM cell size at 7nm was 0.0270um2. If you multiply 25.0Mb by the SRAM cell size, you get 0.675mm2. The difference between 1.000 and 0.675mm2 is the overhead and it isn’t constant from node to node, see table 1.

Yield

Yield is a hot topic these days with lot of reports about Samsung struggling with yield at 3nm and losing customers due to low yield, there have also been some recent reports that Intel’s 18A yield is 10%.

In the paper TSMC reports that a 256Mb SRAM array has >80% average yield and >90% peak yield. These yields at this point in development indicate excellent defect densities. There are other yield components beside those tested in an SRAM array, but these are impressive results.

With respect to Intel’s 10% yield report, we have had two separate credible sources that tell us that simply isn’t true, that yields are much better than that. The other things about a report of 10% yield is how big/what is the die and at what point in development was that yield seen if it is even true. Our belief based on our sources is the 10% reported yield is either wrong or old data.

Wafer price

Another number that has been widely circulated is that TSMC is going to charge $30,000 per wafer for 2nm.

TechInsights produces the world’s leading cost and price models for semiconductors. Prior to 3nm entering production we were projecting <$20,000 per wafer and a few customers contacted us insisting 3nm prices would be $20,000 to $25,000 per wafer. Once 3nm entered production we were able to run our proprietary forensics on TSMC’s financials and determine we were correct, and the volume price was <$20,000/wafer by thousands of dollars.

To go from a price of <$20,000/wafer for 3nm wafers to $30,000/wafer for 2nm wafers is a >1.5x price increase for a 1.15x density improvement, that is a dramatic increase in transistor cost and it raises the question of who would pay that, our price estimates are <$30,000/wafer. There have also been reports that Apple who is typically TSMC’s lead customer for each node may be forgoing initial 2nm use due to price although we have also heard push back on that.

Another element to this discussion is what volumes the pricing is for TSMC’s high volume wafer price is a lot lower than their low volume wafer price, so volume needs to be considered in any discussion. In general, we believe $30,000 is higher than the average to high volume pricing will be.

If TSMC prices 2nm wafers at $30,000/wafer they will create a lot of pressure for customers to switch to Intel and Samsung for 2nm class wafer supplies.

Backside Power Delivery

The TSMC paper does not address backside power delivery but competing 2nm processes will be implementing backside power delivery.

Intel 18A will have backside power delivery – with a 2025 ramp Intel will be the first to implement this technology. In 2026 Samsung SF2P process is due to also implement backside power delivery. Finally, TSMC is not expected to implement backside power delivery on their 2nm process variants at all and will wait until 2027 (recent reports are that this is being pulled in to 2026) to implement it on their A16 process. The A16 backside power delivery is expected to be a direct backside connection that can provide smaller track heights than Intel’s and likely Samsung’s implementation.

Since Intel is the most performance focused of the three companies it makes sense, they are implementing backside power delivery first.

Another interesting thing we are hearing about backside power delivery is that foundry HPC customers want it but mobile customers don’t due to cost.

For multiple nodes we may see nodes with and without backside power delivery and given the effect it has on metal 0 the design rules would likely be different. In addition to this for the highest performance we expect molybdenum to be introduced first for vias and later from critical interconnect. This could lead to nodes splitting between backside power delivery and molybdenum metallization for HPC and no backside power and copper metallization for mobile.

Other

One final interesting item in the paper is the comment about “flat passivation”. Many processes have a top aluminum metal layer and passivation follows the metal contours, if something like hybrid bonding is desired the wafer surface must be flat. Flat passivation is presumably a planarized top layer to enable bonding.

Conclusion

TSMC has disclosed a 2nm process likely to be the densest available 2nm class process. It also appears to be the most power efficient at least when compared to Samsung. In terms of performance, we believe Intel 18A is the leader. The early yield reports appear promising, but the reports of $30,000/wafer pricing do not in our opinion represent acceptable value for the process and may present an opportunity for Intel and Samsung to capture market share . TSMC 2nm should be in production in the second half of this year.

Also Read:

5 Expectations for the Memory Markets in 2025

VLSI Technology Symposium – Intel describes i3 process, how does it measure up?