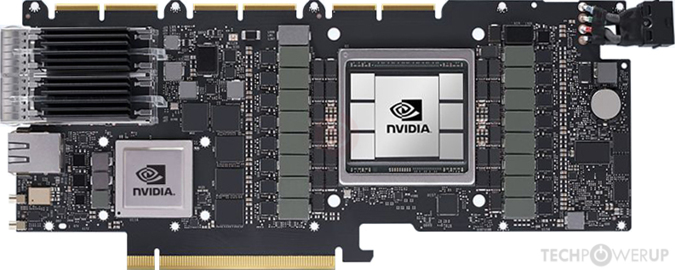

I said that gaming PCs (CPUs) are a relatively small and shrinking part of the total leading-edge silicon market; yes they're still growing -- at least, for the time being -- but the total market is predicted to grow faster, and the vast majority of this growth is predicted to be in AI, especially engines at the hyperscalars (about 50%/year CAGR) but also GPUs at the endpoints. By the time all this gets into its stride in 18A/N2, this market will be bigger than gaming PCs, even including the GPUs inside these, and much bigger than the CPUs where single-core speed is headlined (but increasingly less relevant).Gaming PCs are not a "relatively small market". Nvidia sold just under $3B of GeForce GPUs in 2023, impressive considering that the majority of the gaming market is now integrated graphics. While revenue-wise that's only 25% of Nvidia's data center GPU sales, the entire PC gaming hardware market is estimated at over $50B with a 12.9% CAGR. AI GPUs are Nvidia's future, but Intel isn't on that track (yet, I hope for their sake). Like I said, I've never thought Intel's client-first strategy was a good thing for data center silicon, but it still looks like Intel's strategy.

Gaming PC Market Size, Share And Trends Report, 2030

The global gaming PC market size was valued at USD 50.23 billion in 2022 and is expected to grow at a CAGR of 12.9% from 2023 to 2030www.grandviewresearch.com

That's what TSMC say, they're building their business in the next few years based on this, so I tend to believe them ;-)

My point is that maximum single-core (or even multi-core) clock rate (6GHz!!!) so beloved by gamers (and Intel...) is becoming less and less important, and density (cost) and power efficiency (heat) are becoming more and more important -- at least, as far as the biggest markets for the most advanced nodes are concerned.

So that's where the process should be targeted if you want to give (most big) customers what they're looking for -- and it's clearly what TSMC are doing, because these are already their customers.

In contrast Intel is coming from an IDM background where the biggest customer was themselves (x86 CPUs) and their headline #1 target was clock rate, with power consumption/density/cost coming second -- and their processes (including 18A) still seem to be targeted at this market.

Last edited: